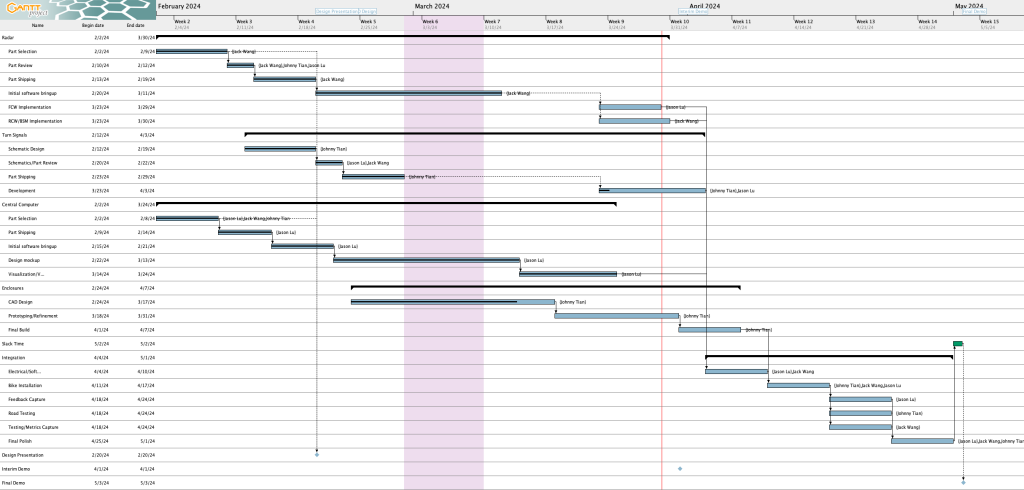

Personal Accomplishments:

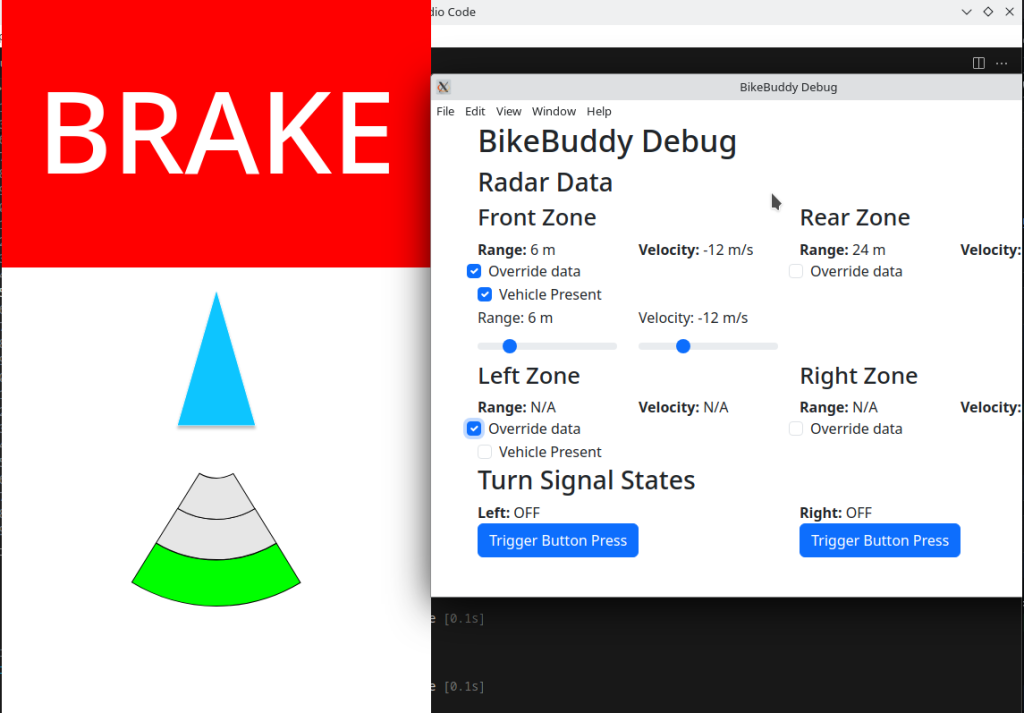

- Interim Demo Set Up (2 hrs): I spent some time this week setting up the radar portion of the interim demo. In addition to plotting the data points, I printed out the possible warning types (collision/blind spot) based on the location of the target. I then presented the setup on Monday.

- Radar Tuning and UI Integration (10 hrs): I spent the majority of time last week processing the radar signals to make them useful for our needs and integrating the radar with the UI that Jason has been doing. I discussed with Jason about what is the relevant data that should be sent to the UI and how to pack the data. We agreed that I would be doing the majority of the data processing. Specifically for the rear radar, I will divide the region into three sectors as discussed before. For the areas of blind spot detection, I will send a positive flag if the radar detects an object within the threshold. This is because the UI only cares if there is an object approaching the “blind spot” and does not require detailed information; for the region of rear collision detection, I will send over the object that has the closest absolute distance to the bike, where the UI will be using the distance and velocity information to alert the rider of a possible collision. I discovered that we could use Named Pipe to send the information between my radar processing script in Python and Jason’s UI implementation in JavaScript. I packed the data into JSON format and did some basic testing with Jason to verify the communication. As of Friday, we were able to transfer data from the radar detection script to the UI.

Progress:

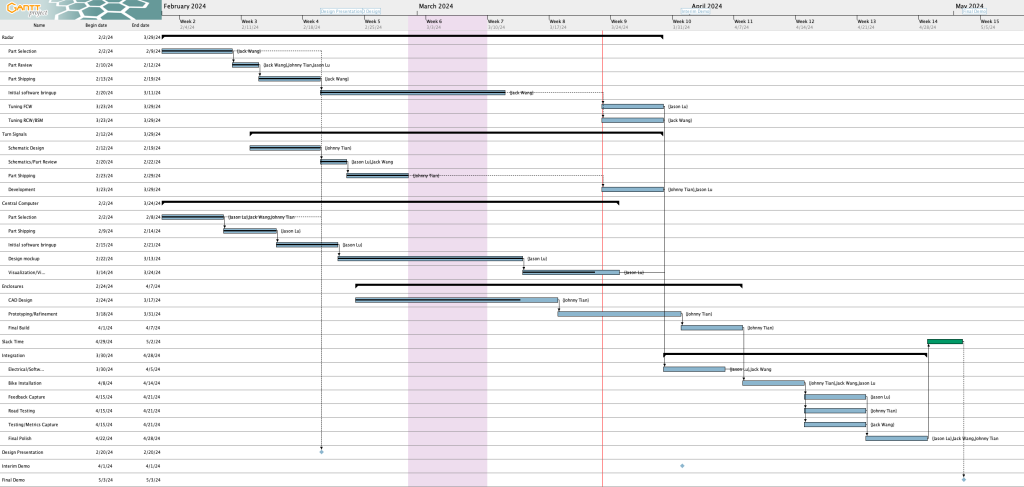

I finished the radar tuning and some basic UI integration, so I am on track for now. I will be taking over Jason’s task of tuning the forward radar, given the similarity of its functionality to the rear radar. This is to keep us on track with system integration. The latest schedule in our group report reflected this change.

Verification

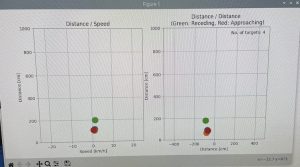

- Basic Radar Detection: I have run some tests to benchmark the basic functionality of the radar. This was done by setting up the radar in a controlled environment and having people running/walking toward the radar from different angles to simulate incoming traffic. Using the plot generated, I verified that the radar could accurately detect the approaching people with reasonable distance output. The result is analyzed by recording the output x-y distance that the radar is reporting and comparing them with the actual location of the people, which is measured with meter sticks. This data is used to benchmark the metrics mentioned in the Radar Accuracy Baseline section of the design report. This verification task indicated the basic functional success of my radar implementation.

- Integration Verification: I verified that the output data of the radar was correctly communicated with the UI. This was done by printing out the raw data in the radar processing script and the information that the UI received. This is to make sure that the system is updating data correctly, which indicates the functionality of the communication pipeline.

- Radar Detection in Real World Environment: This will be a verification task that I will do soon, since I just finished tuning the rear radar. The goal of this verification task is to make sure that the radar will provide the desired detection results in the real traffic environment. I will mount the radar onto the bike and drive a car approaching the bike in a parking lot. I will then analyze the result to see if the radar output makes sense. This includes comparing the distance and the velocity output of the radar with the ground truth data, which is measured by tape measure and speedometer.

Next Week:

- Integration

- FCW radar tuning