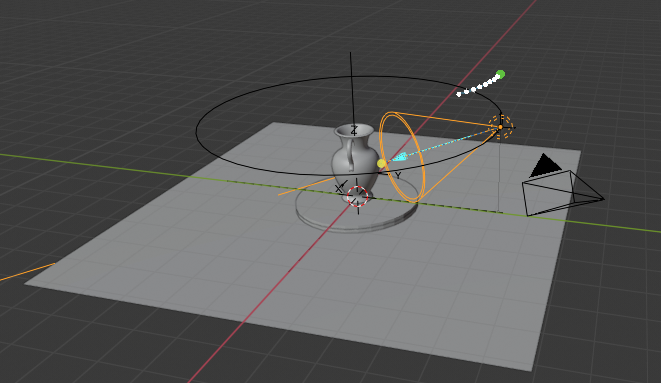

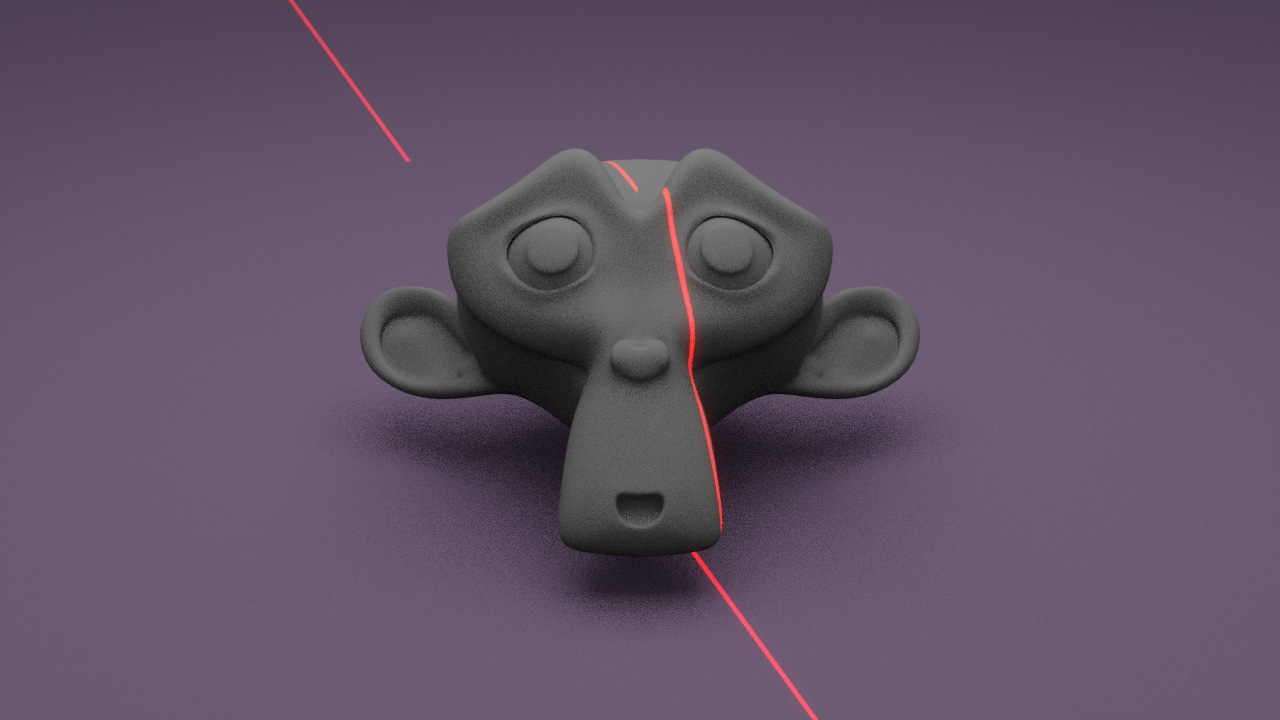

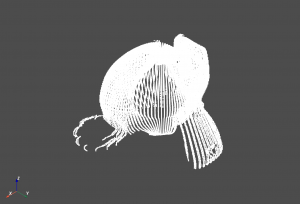

This week, on top of working with other team members on preparing for the in-lab demo and thinking of different testing we could perform, I mainly worked on improving the triangulation algorithm. As mentioned in last week’s report, the triangulated meshes using different algorithms were not satisfiable. The Screened Poisson Reconstruction proposed in Kazhdan and Hoppe’s paper looked the most promising; thus I decided to try fixing it.

I first tried working on other example pcd files from the web, and the Poisson Reconstruction algorithm worked perfectly.

I looked deeper into the pcd files and the only two main differences were just that example pcd files also contain FIELDS normal_x, normal_y, and normal_z which have normals information. Thus, my speculation was correct.

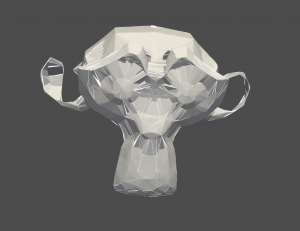

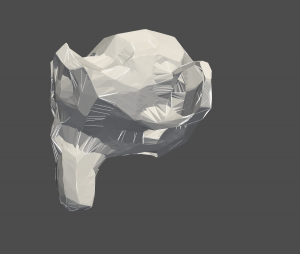

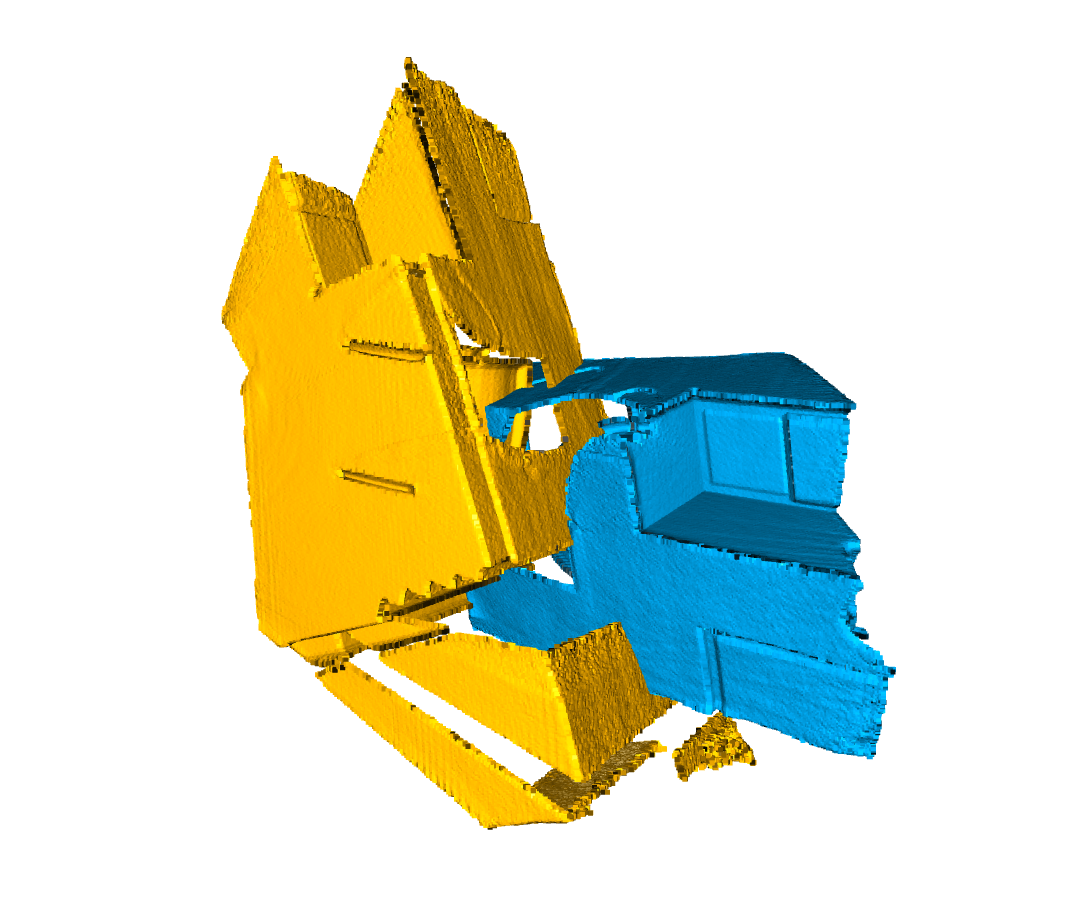

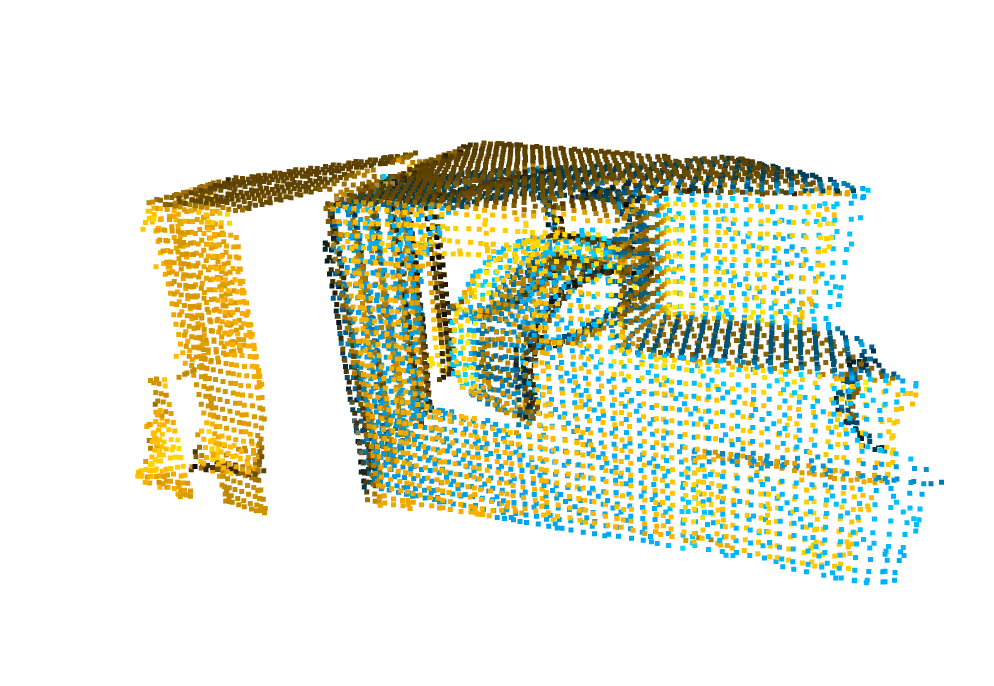

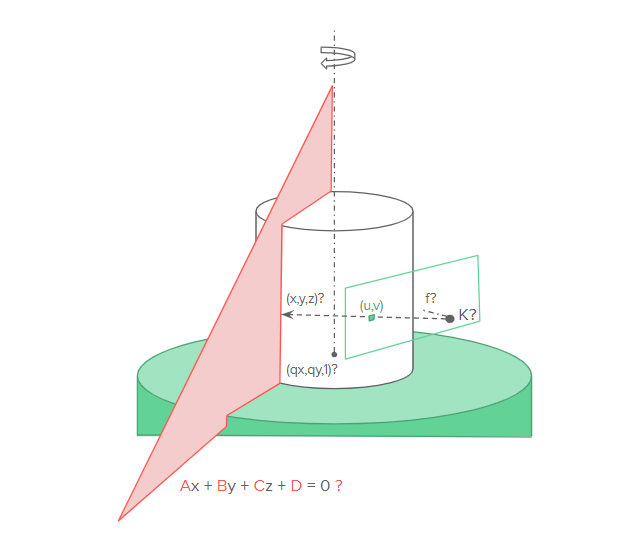

I then tried orienting the normals to align with the direction of the camera position and laser position but they still didn’t work.

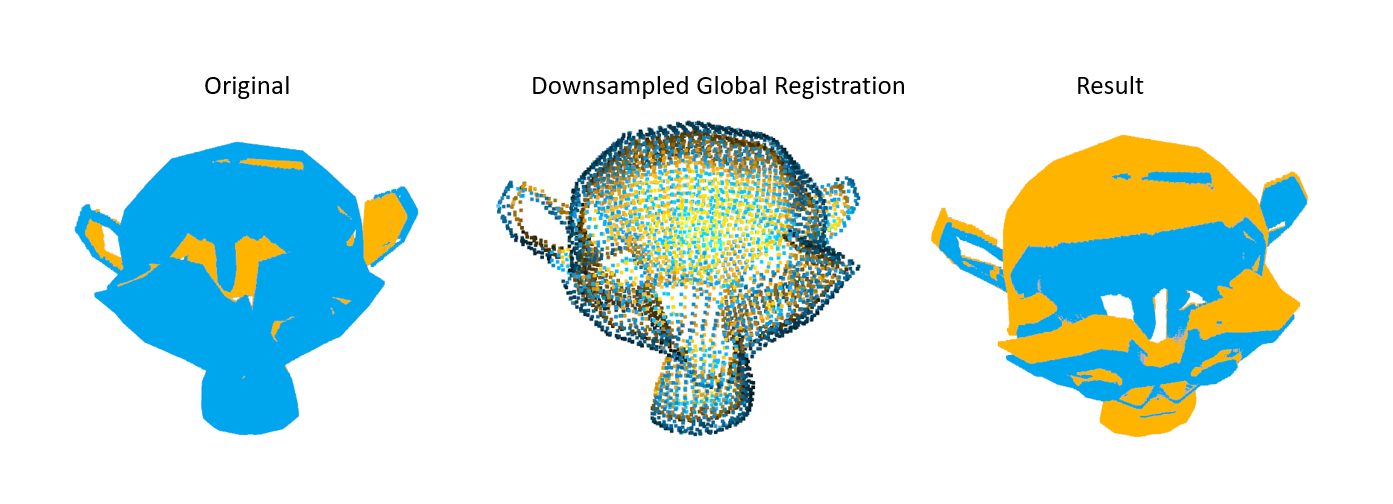

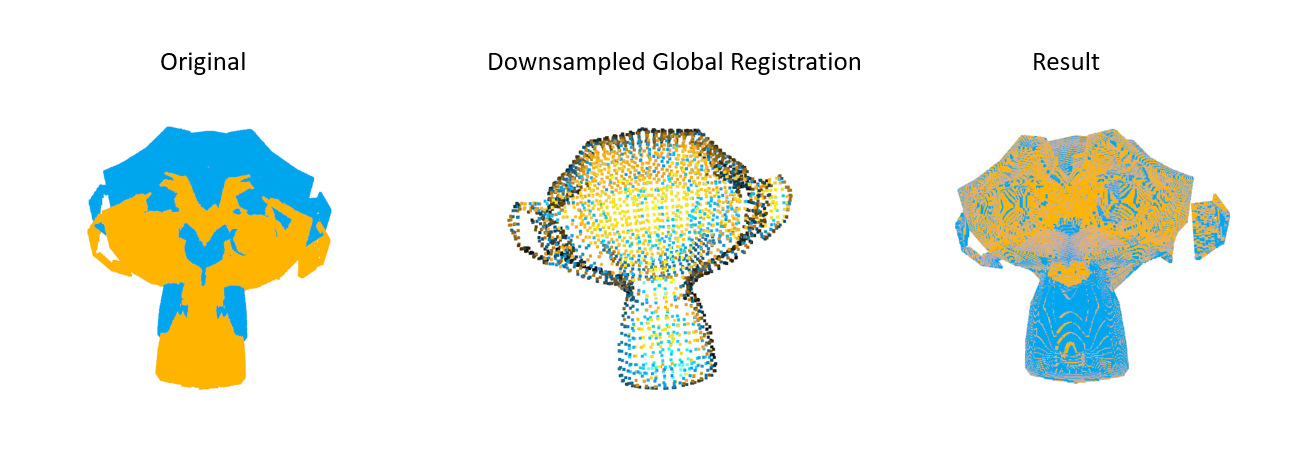

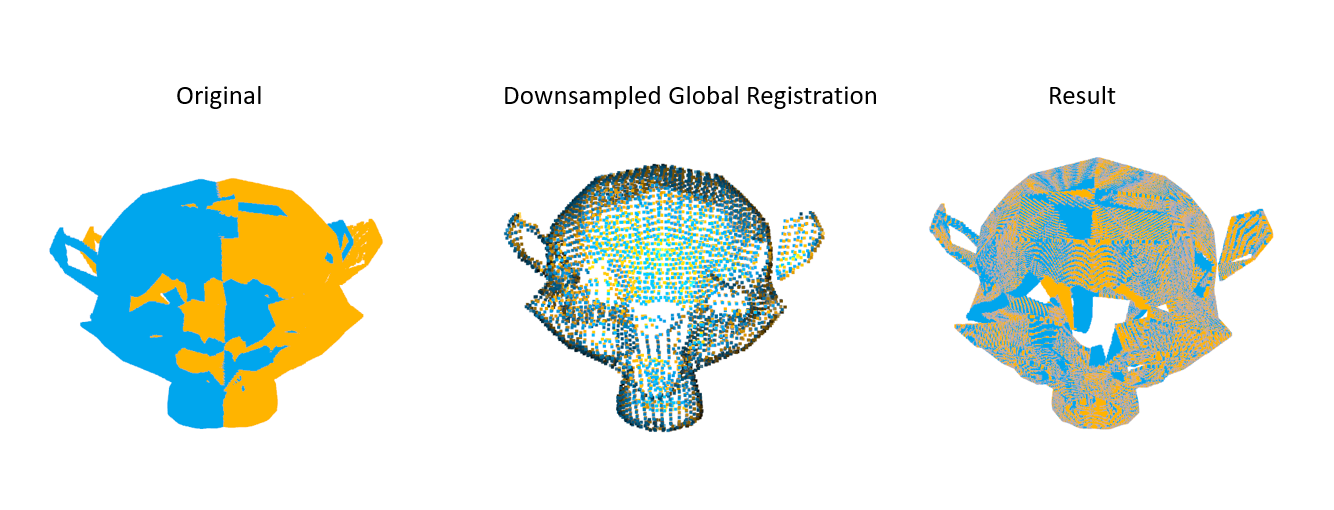

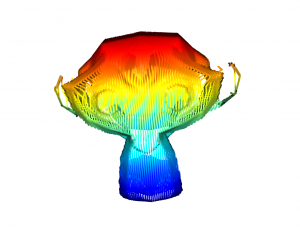

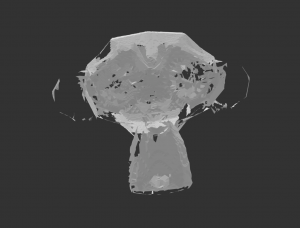

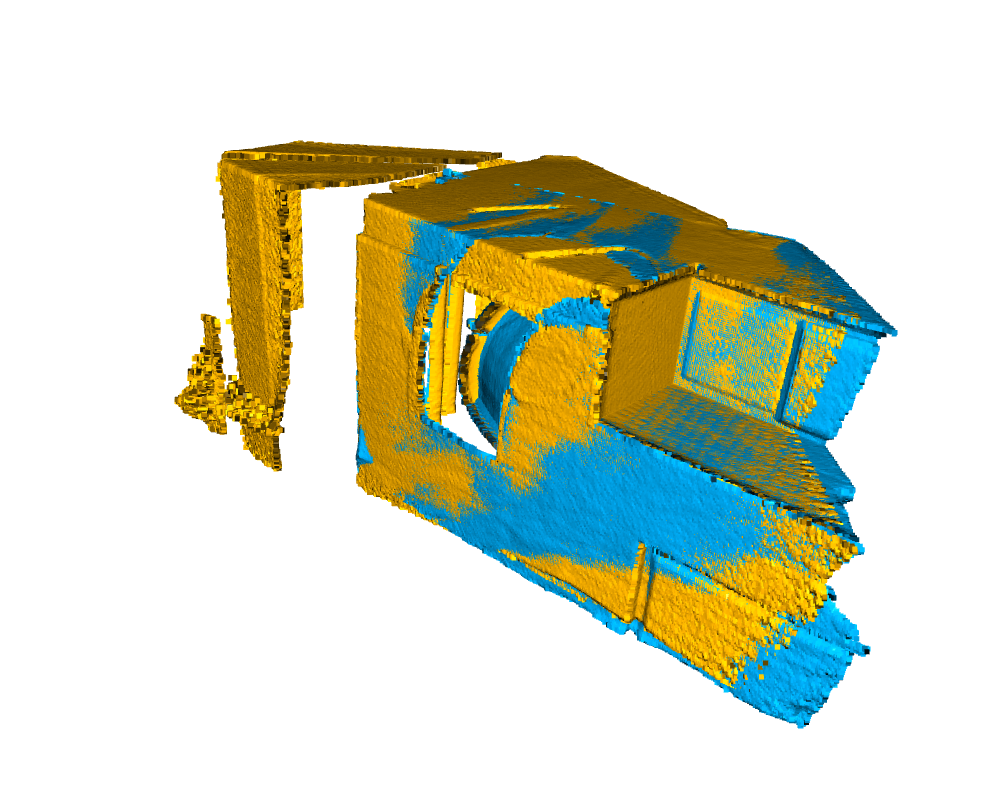

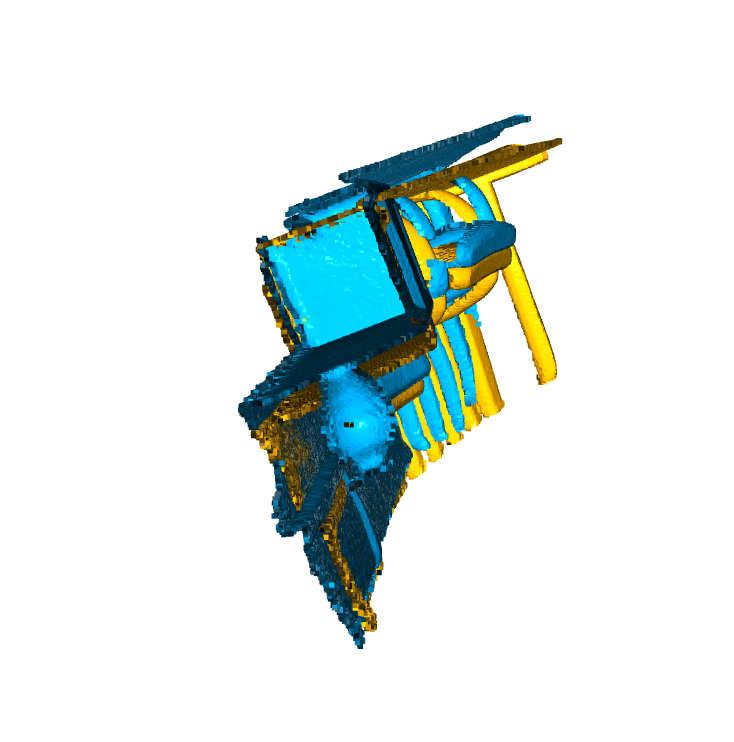

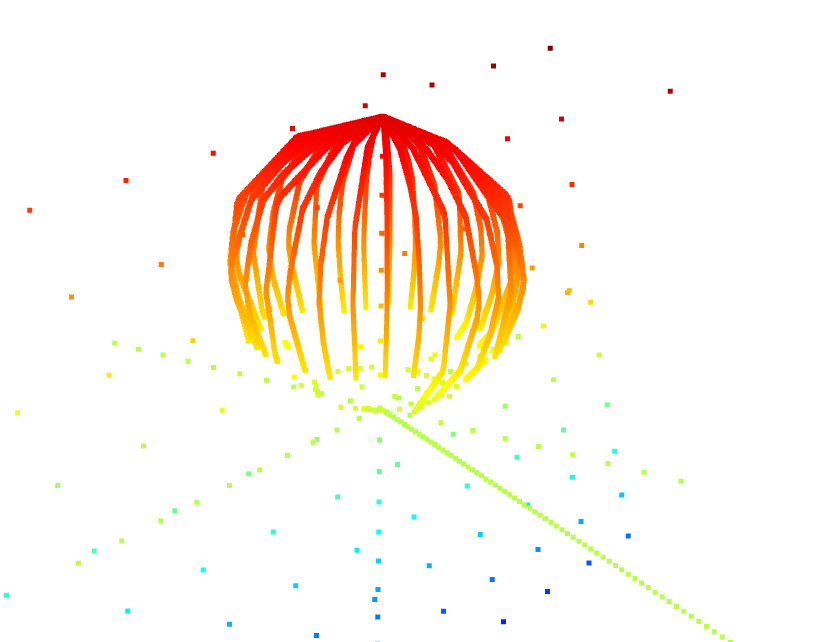

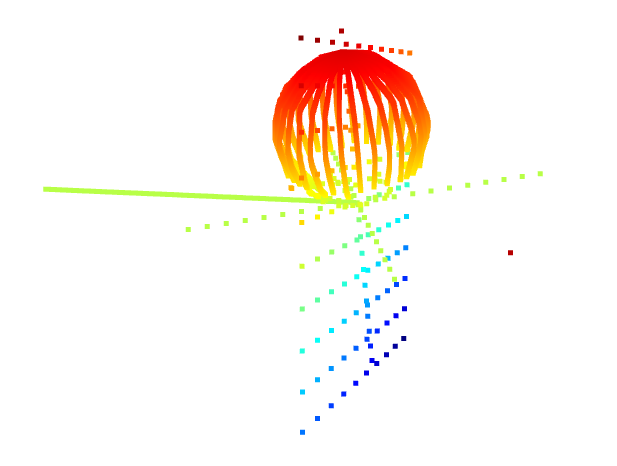

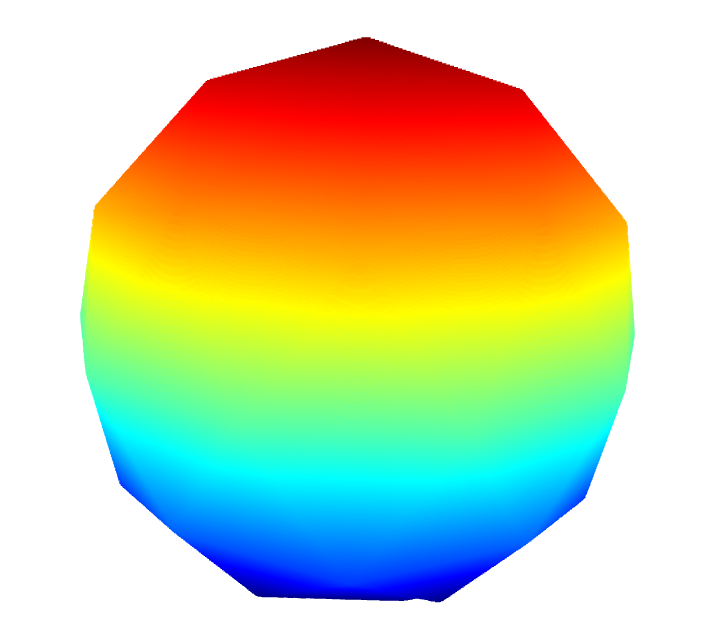

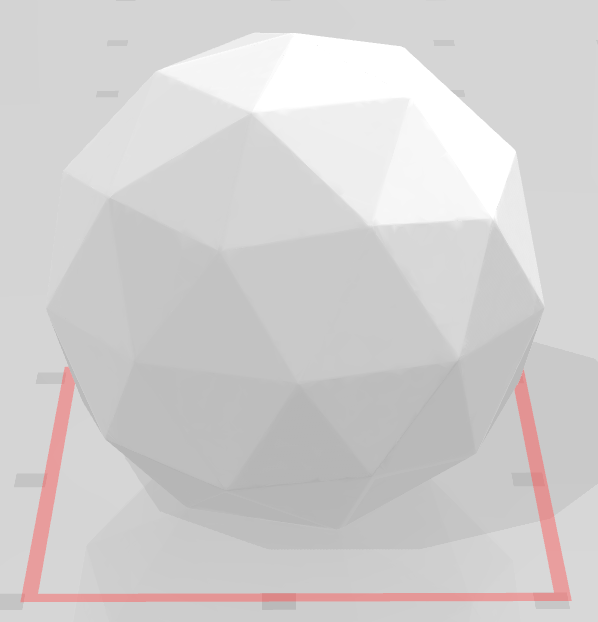

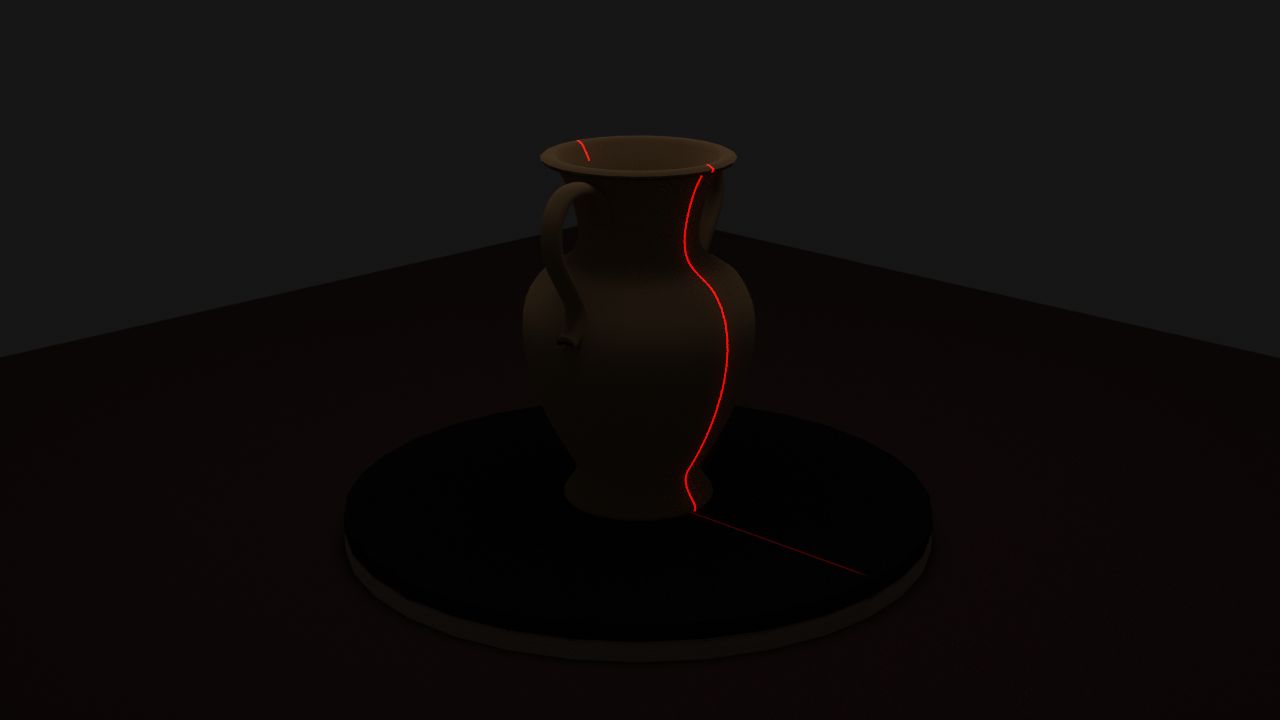

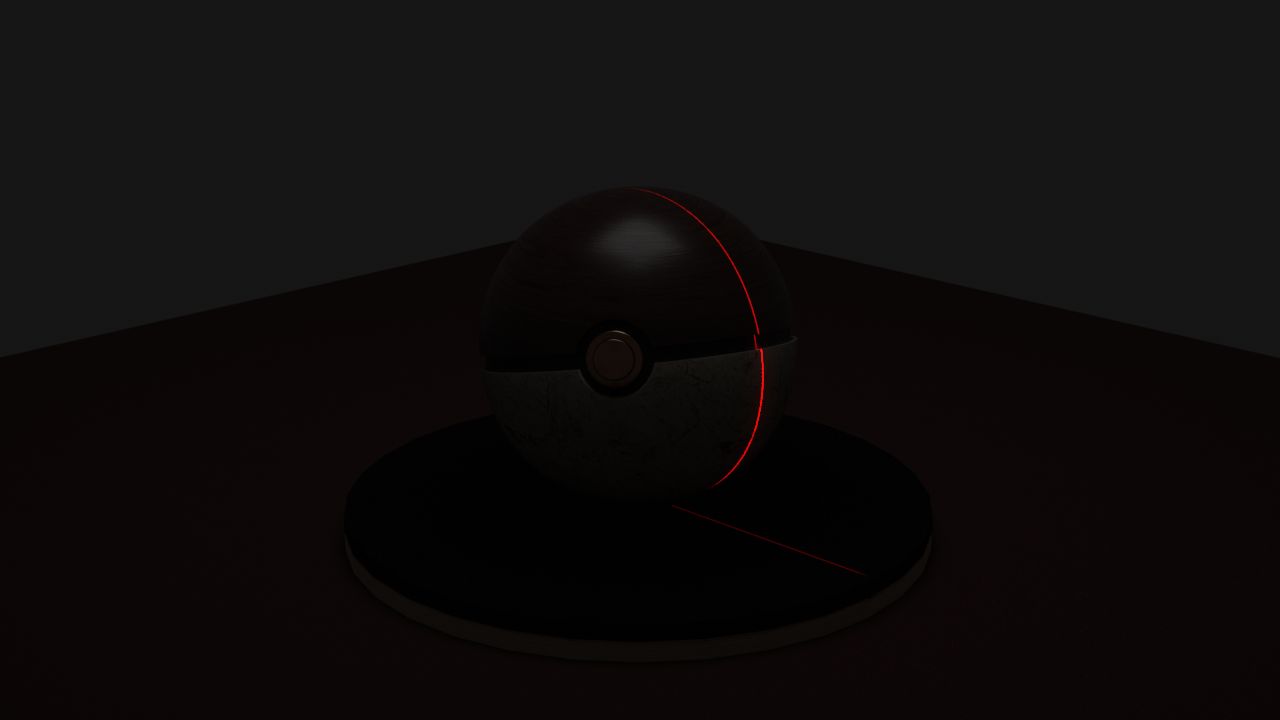

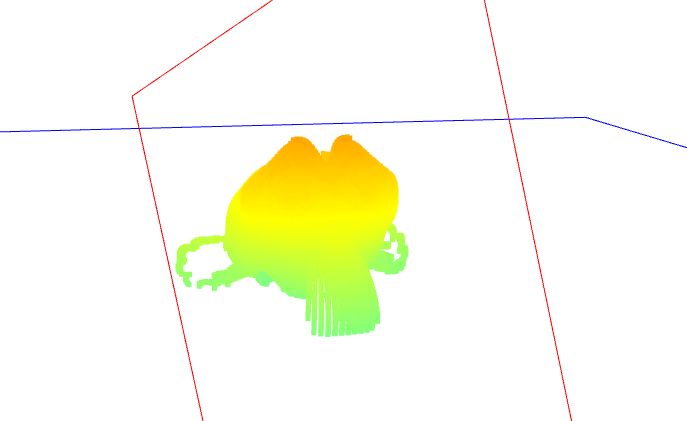

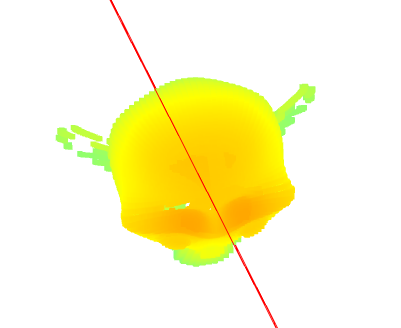

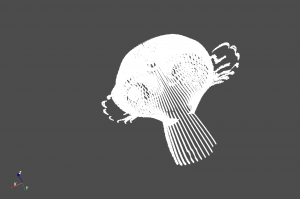

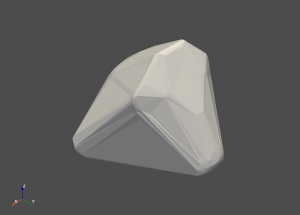

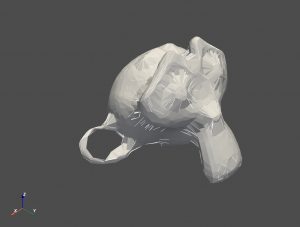

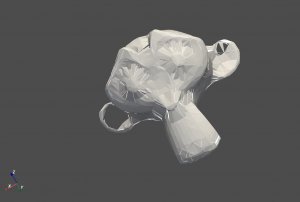

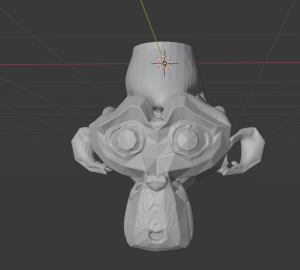

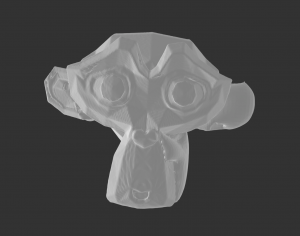

After that, I tried adjusting the search parameter when estimating the normals. I changed from the default search tree to o3d.geometry.KDTreeSearchParamHybrid which I can adjust the number of neighbors and the radius size to look at when estimating the normals for each point in the point cloud. After estimating the normals, I then orient the normals such that the normals are all pointing inward and invert the normals out so that all the normals are pointing directly outward from the surface. The results are much more promising. The smoothed results were not as accurate so I decided to ignore smoothing.

I then realigned the normals to get rid of the weird vase shape by making sure that the z-axis alignment of the normals was at the center of the point cloud.

After that, I helped Alex work on the verification by writing a function to get the accuracy percentage. I used Alex’s work on getting the distances between the target and the source and wrote a simple function to check if 90% of the points are within 5% of the longest axis and 100% of the points are within 2% of the longest axis.

I am currently on schedule. For next week, I hope to fully finish verification and help the team prepare for the final presentation and demo videos.