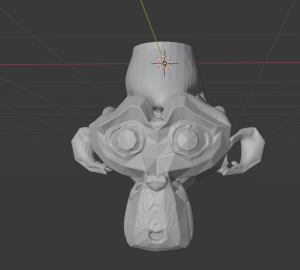

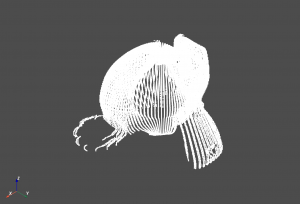

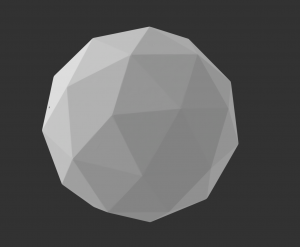

This week, after Alex made fixes to the point cloud construction algorithm, I tried testing the new pcd files on our current triangulation algorithm. The rendered object looks perfect.

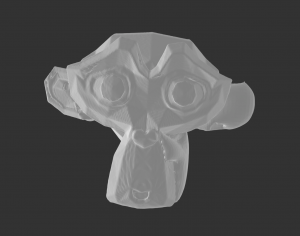

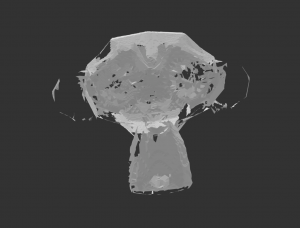

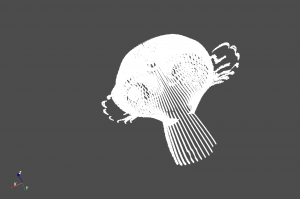

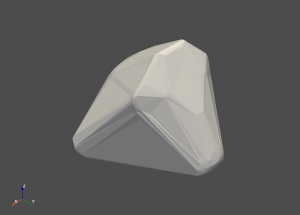

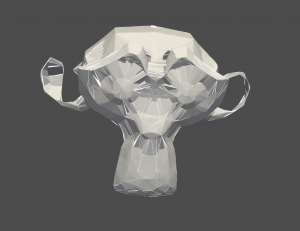

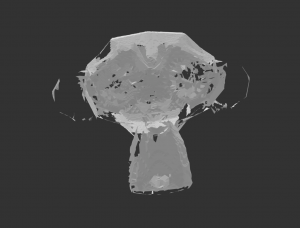

However, testing a more complicated object such as a monkey, the triangle meshes look a little rough and might not meet our accuracy requirements.

I looked into the point cloud and it is very detailed so the problem is with the delaunay technique we are currently using. Although I tried changing different parameters, the results are still not satisfiable.

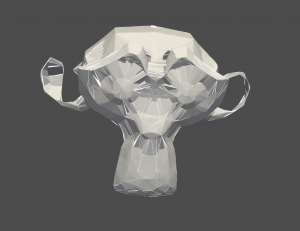

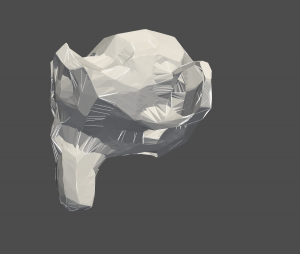

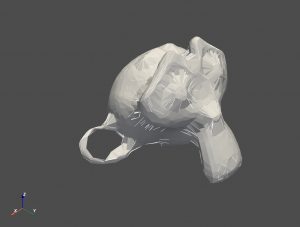

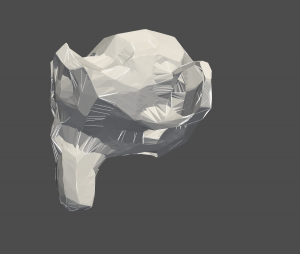

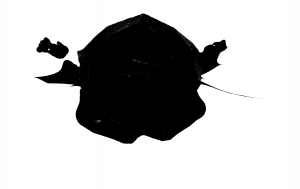

Thus, I started looking into other libraries which might have other techniques. I ended up trying open3d this week. The first technique I try is to compute a convex hull of the point cloud and generate a mesh from that, the result is very not satisfiable since the convex hull is just a set of points is defined as the smallest convex polygon, that encloses all of the points in the set. Thus, it only creates an outer rough polygon surface of the monkey.

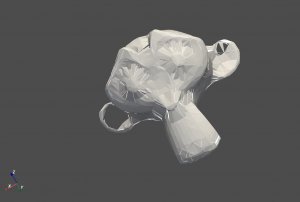

After that, I tried the pivoting ball technique. This implements the Ball Pivoting algorithm proposed in F. Bernardini et al. The surface reconstruction is done by rolling a ball with a given radius over the point cloud, whenever the ball touches three points a triangle is created, and I could adjust the radii of the ball that are used for the surface reconstruction. I computed the radii by finding the norms of all the closest distance between every point in the point cloud and multiply it by a constant. Using a constant smaller than 5, the results were not satisfiable. The results got more accurate as I increased the constant size; however, a constant above 15 takes longer than 5 minutes to compute using my computer which would not pass our efficiency requirement, and the results were still not as satisfiable as I hoped for. I tried different smoothing techniques but they did not help much.

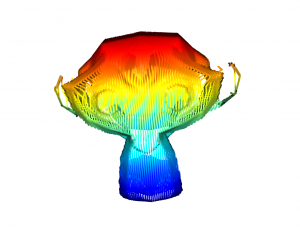

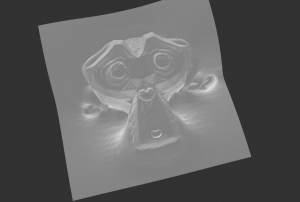

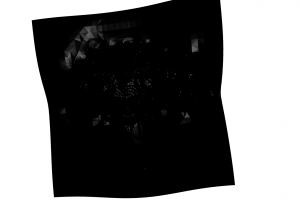

The next technique I used was the poisson technique. This implements the Screened Poisson Reconstruction proposed in Kazhdan and Hoppe. From this method, I can vary the depth, width, scale, and the linear fit of the algorithm. The depth is the maximum depth of the tree that will be used for surface reconstruction. The width specifies the target width of the finest level octree cells. The scale specifies the ratio between the diameter of the cube used for reconstruction and the diameter of the samples’ bounding cube. And linear fit can tell if the reconstructor uses linear interpolation to estimate the positions of iso-vertices or not.

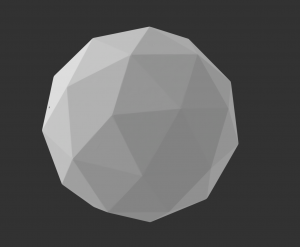

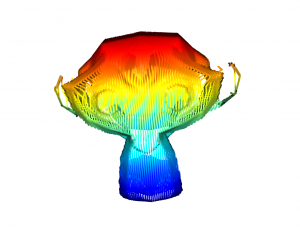

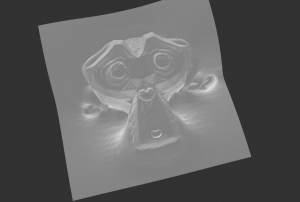

The results are accurate and look smooth once I normalize the normals but there is a weird surface. By looking at the pcd file and a voxel grid (below), there are no points where this weird rectangular surface lies.

Currently, I assumed that the weird surface is from the directions the normals are oriented to, since the location of the surface changes when I orient the normals differently.

I’m currently a little behind schedule since I hoped to fully finish triangulation, but luckily, our team allocated enough slack time for me to fix this. If I finish early, I hope to help the team work on the testing benchmarks and adding noise.

For next week, I hope to be able to fix this issue, either by applying different other techniques on top of the poisson technique or changing to marching cubes algorithm which also seems probable.