This week I finished writing the prototype code to generate the point cloud from a set of images from the scan. Last week, I implemented laser image detection and the transformation of pixels from screen space to world space. This week I implemented the final two components of the point cloud generation pipeline:

- Ray-plane intersection from the origin of the camera through the location of each pixel in world space to the laser plane in world space. This intersection point is the point of contact with the object in world space.

- Reverse rotation about the center axis of the turntable. Once we find the intersecting points on the object in world space, they need to be reverse rotated about the center axis of rotation to find the location of the corresponding point in object space. These points are the final points used in the point cloud.

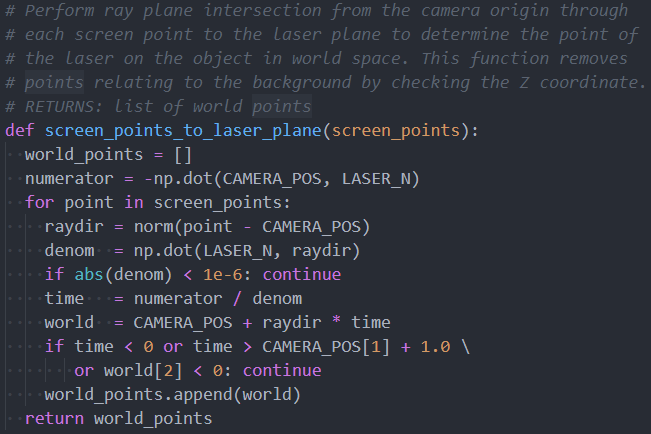

Again, like last week, I had to write a few scripts in the Blender application to extract parameters such as the transformation between laser space and world space. After having this translation, and knowing that the laser plane passes through the origin, the laser plane can simply be seen as a vector along the -X direction in laser space, which when transformed into world space gives us the laser plane in world space as a vector. This vector can be used in the simply ray-plane intersection algorithm which is computed via arithmetic and dot products done between a few vectors. The code for ray-plane intersection to find world space points of the object:

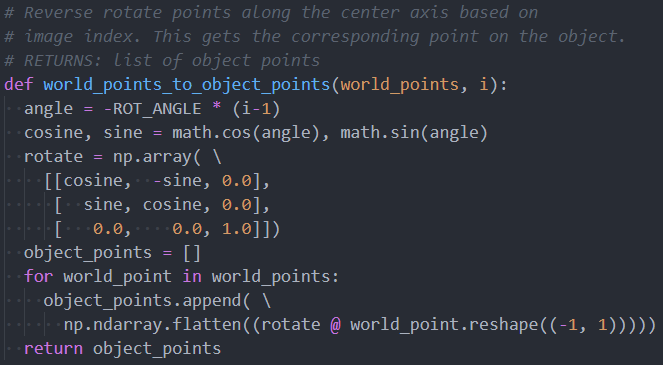

And the code for transformation from world space to object space (reverse rotation). This code simply utilizes a euler rotation matrix about the Z axis, since that is the axis of the turntable:

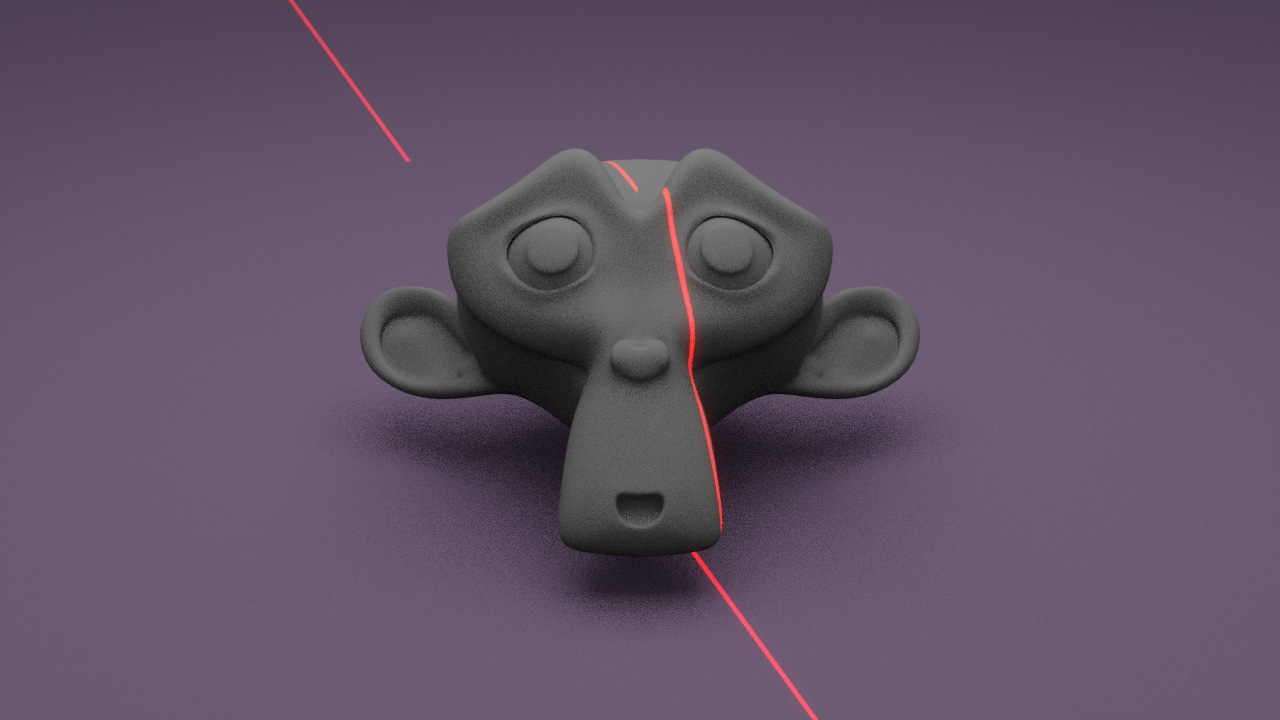

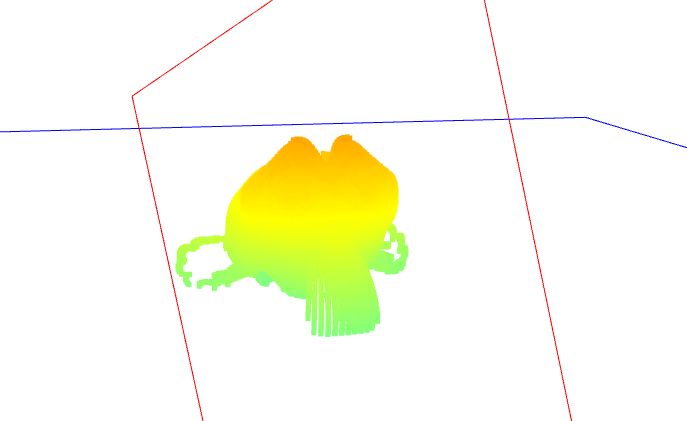

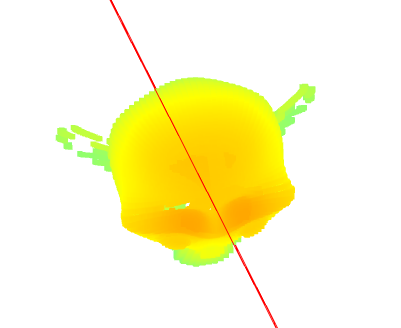

And below you can see the generated point cloud for a scan of a monkey head, where one example scan image below:

The blue plane is the plane of the rotational platform, and the red plane is the laser plane:

Currently I believe I am on track for my portion of the project. Tomorrow, we plan on preparing the demo video for Monday using the work I have done this last week. After the demo, we plan to refine and optimize the prototype code into something which meets our requirements. After this, we eventually hope to be able to implement IPC to meet our goal of single-object multiple-scan.