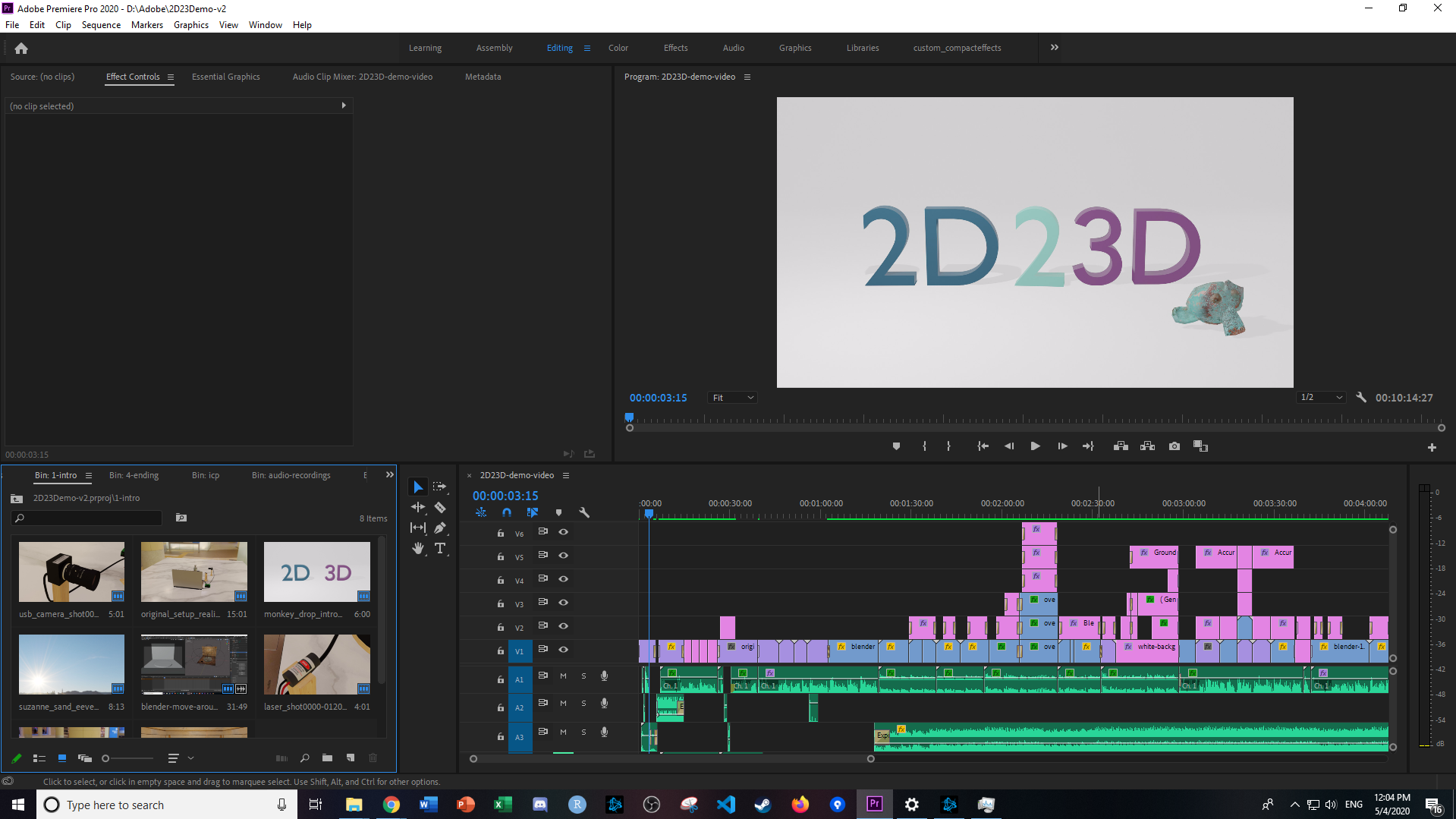

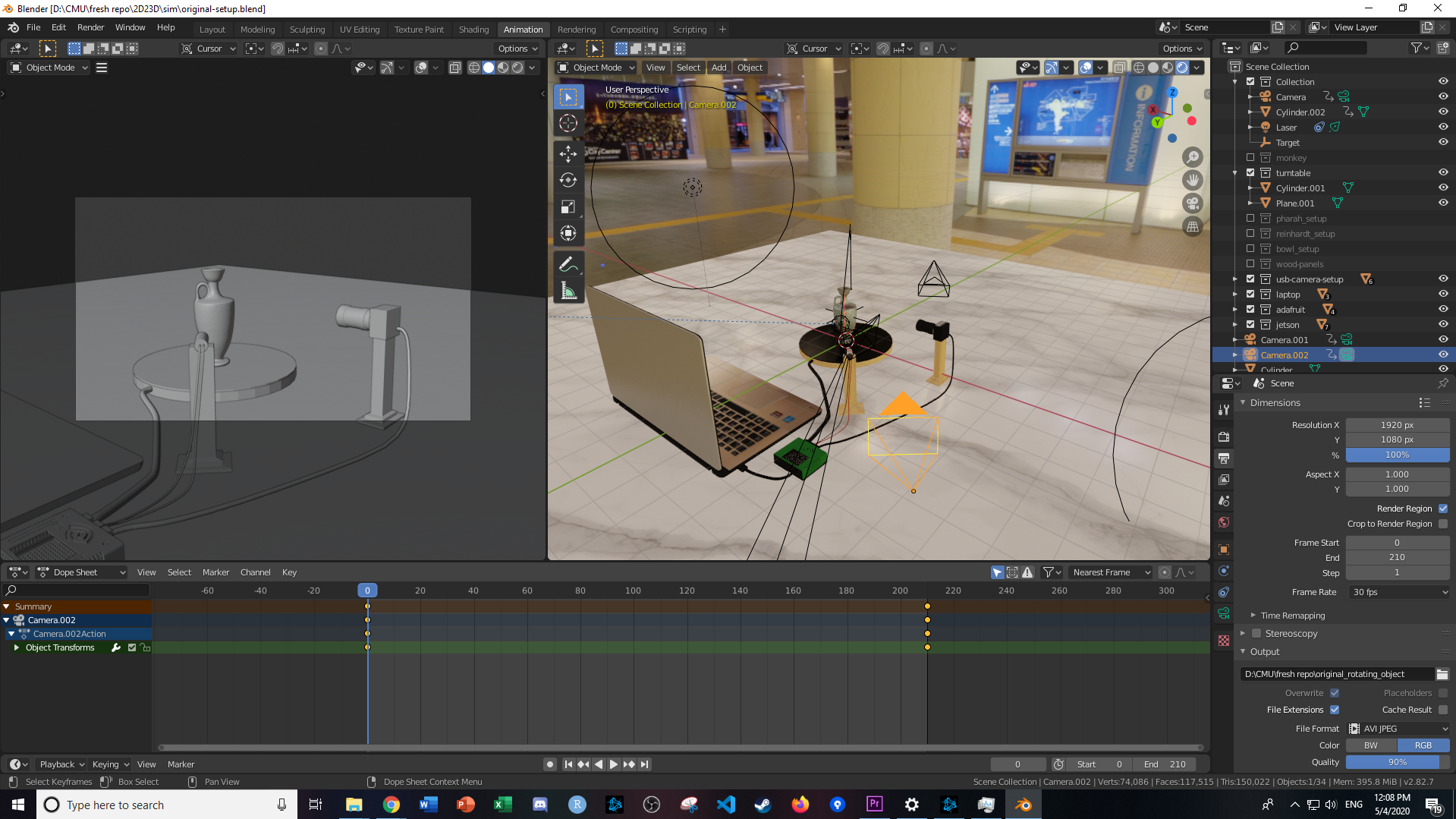

At the start of this week I had completed implementing the point cloud generation algorithm, and now with both ICP completed by Jeremy for single-object multiple-scans and mesh triangulation completed by Chakara, I could begin writing a program to verify our accuracy requirement on simulated 3D scans.

Our accuracy requirement is as follows: 90% of non-occluded points must be within 2% of the longest axis to the ground truth model. 100% of non-occluded points must be within 5% of the longest axis to the ground truth model.

The first thing to do is to figure out how to find the distance between scanned points and the ground truth mesh. This is done by utilizing the ICP algorithm we already implemented to align the mesh. The procedure is as follows:

- Sample both the scanned mesh and the ground truth mesh uniformly to generate point clouds of their surfaces.

- Iterate ICP to generate a transformation matrix from the scanned mesh to the ground truth mesh.

- Apply the transformation to each vertex of the scanned mesh to align it to the same rotation/translation as the ground truth mesh.

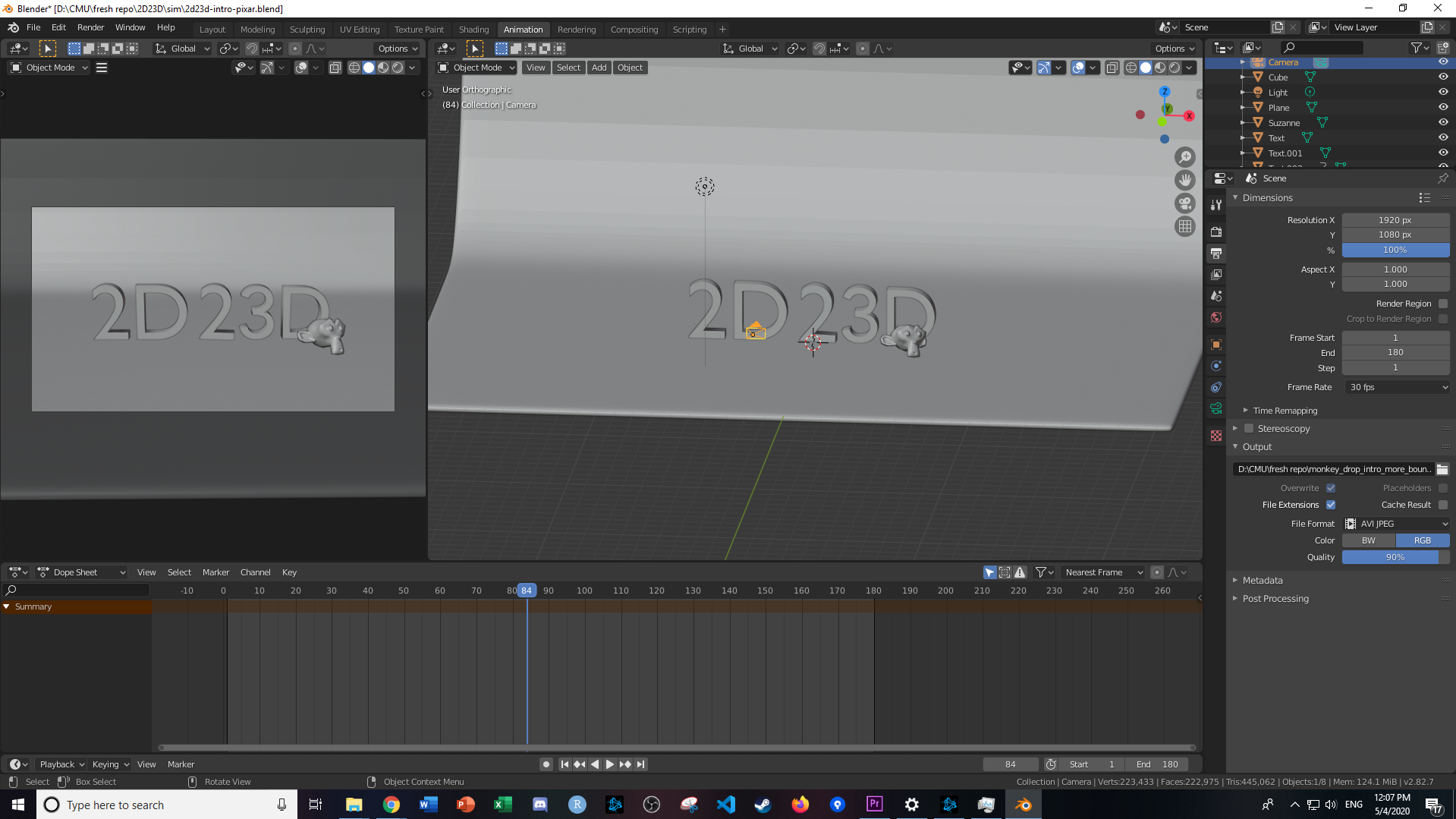

The need for this alignment is that the ground truth mesh can be oriented differently than our scanned copy, and before we can evaluate how different the meshes are they must be overlaid on each other. The following image is a demonstration of the success of this mesh alignment:

Here the grey represents the ground truth mesh, and the white is the very closely overlaid scanned mesh. As you can tell the surface triangles weave in and out of the other mesh, so they are quite closely aligned.

Now that both meshes are aligned, we need to compute the distance of each vertex of the scanned mesh to the entirety of the ground truth mesh. The algorithm is as follows to compute the distance of a point to a mesh:

- For each vertex of the scanned mesh

- For each triangle of the ground truth mesh

- Determine the distance to the closest point of that triangle

- Determine the minimum distance to the ground truth mesh out of all the triangles

- Determine the number of distances farther than 2% of the longest axis

- Determine the number of distances farther than 5% of the longest axis

- If the number of distances farther than 2% of the longest axis is more than 10% (100% – 90%) of the number of points, accuracy requirement has failed

- If any distances are farther than 5% of the longest axis, accuracy requirement has failed.

- Otherwise, verification has passed successfully, and accuracy requirements are met for the scan. Note that occlusion points are not considered since they can be accounted for by performing single-object multiple-scans using ICP.

This looks good, but the double for loop incurs a significant performance cost. We are ignoring this cost since the speed of verification is not important to any of our requirements (this cost can be alleviated by the use of a kd-tree or similar to partition the triangles into a hierarchy).

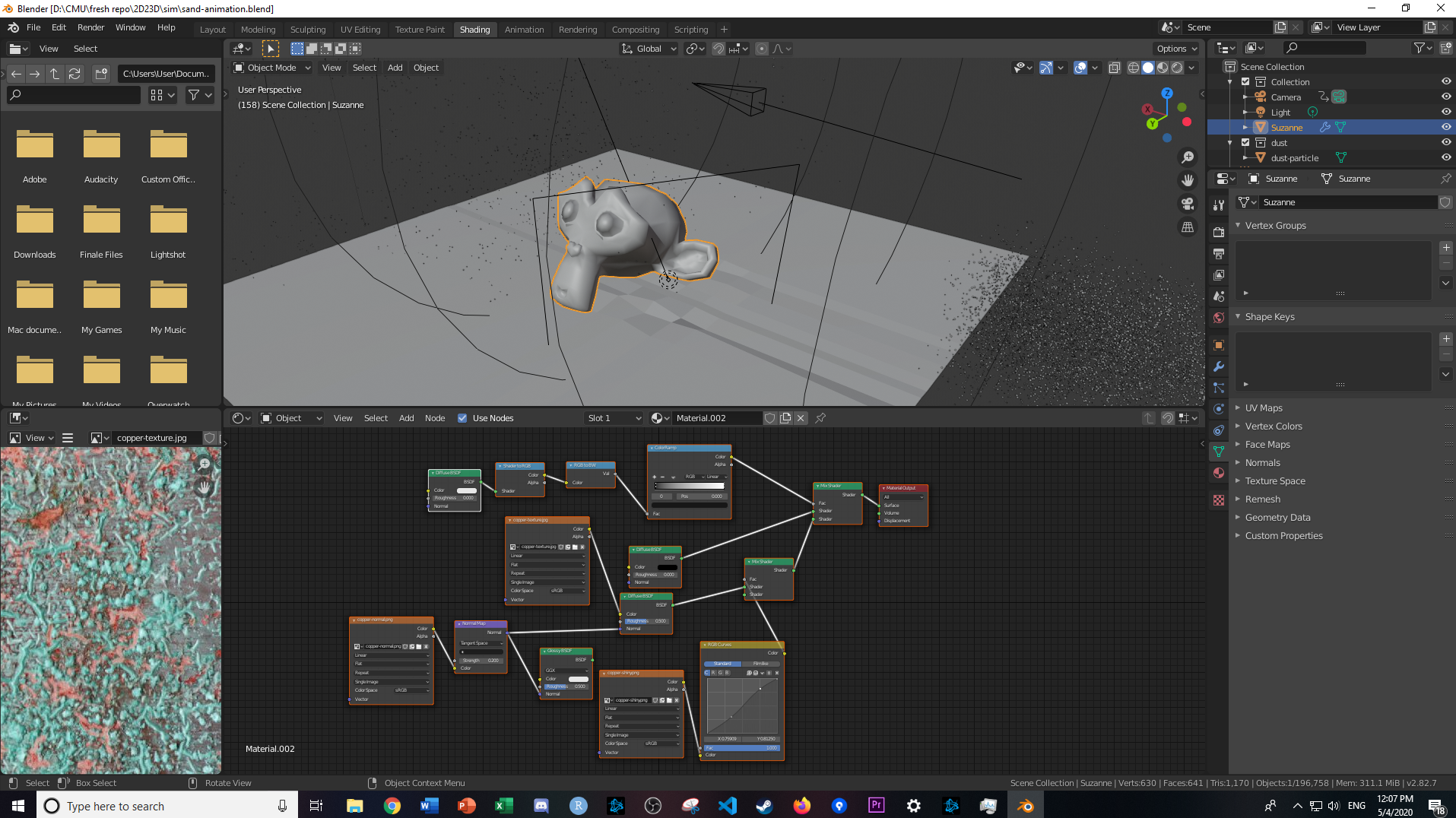

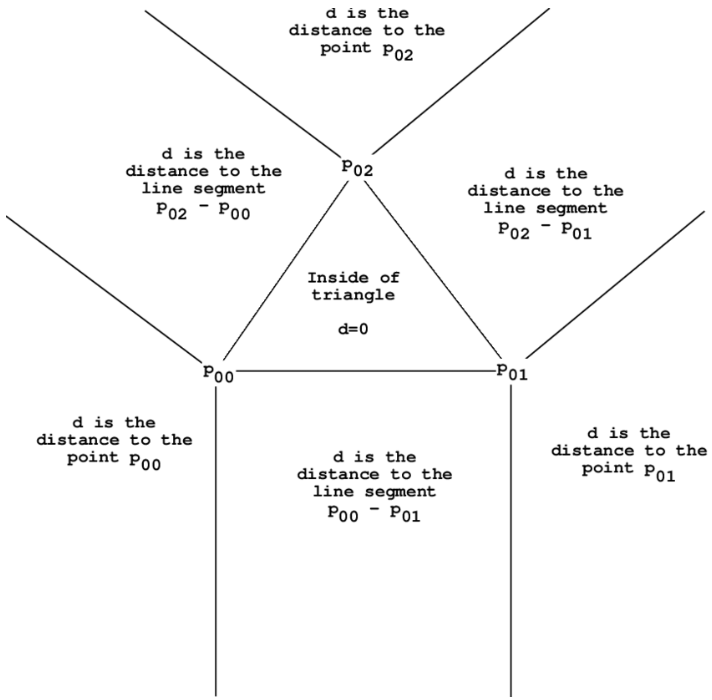

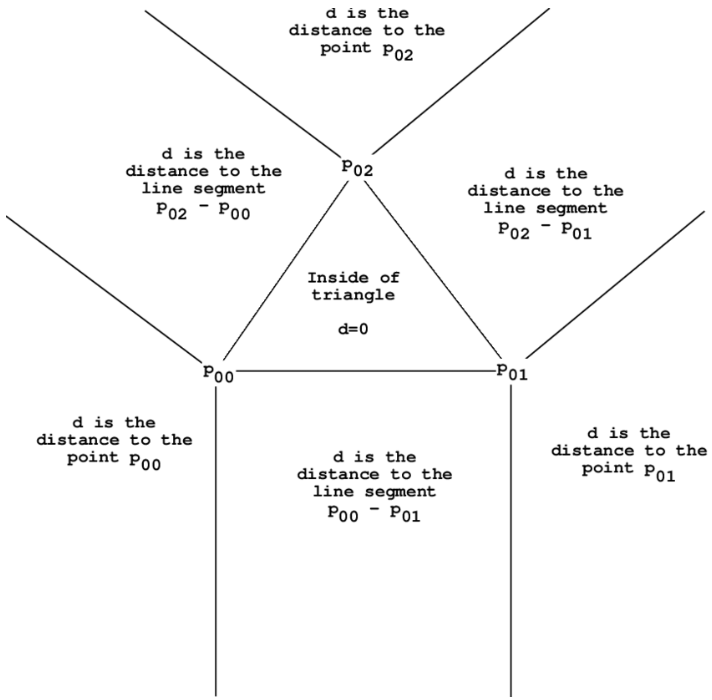

Finally, we are left with the question of how we can determine the distance of a point to the closest point on a triangle. The procedure to do this is to project the point onto the plane of the triangle, then determine which region of the triangle the point is in on the plane, and finally based on that region perform the correct computation. The regions are illustrated below for the 2D case:

The computation is as follows for each of these cases:

- Inside the triangle, the point distance is the distance from the point to the plane

- To the line segment, the distance is the hypotenuse of the distance to the plane and the distance from the point on the plane to the line segment. This distance is computed by subtracting the portion of the vector from the projected point to a vertex that is parallel to the segment.

- To a point of the triangle, the distance is the hypotenuse of the distance to the plane and the distance from the point on the plane to the point of the triangle.

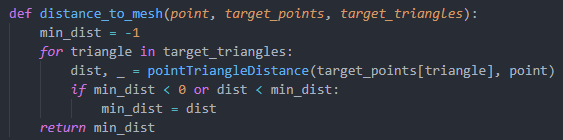

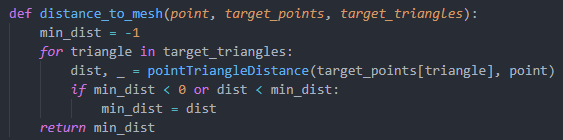

There were a couple implementation hurdles, such as figuring out how to extract triangle data from the mesh object. Specifically, the triangles property is an array of 3-tuples where each element corresponds to an index to a vertex in the vertices array. In this way triangles can be reconstructed by utilizing both of these arrays. Below is a sample of code to perform the distance computation from a point to a mesh, the point to triangle computation is quite complicated and is not posted here:

With all of this now implemented, we can perform verification of our scans to check that they meet the accuracy requirements. However, this verification process takes a significant amount of time, so I may in the next week optimize it to use a tree data structure.

My part of the project is on schedule for now, and at this moment we are preparing some examples for our in-lab demo on Monday. This next week I hope to optimize the verification process, test on a larger datasets of multiple objects, and overall try to optimize and streamline the scanning and verification processes. I do not see significant risks at the moment as our scans so far are meeting the accuracy requirements. I will also plan to acquire significant data regarding how accurate our scans are, and compile that data into a form that we can analyze to determine what weaknesses our current system has.