This week we all worked on making the final video and poster.

Next week, we will continue to fine tune the video and poster and prepare for presenting on Thursday.

Carnegie Mellon ECE Capstone, Spring 2021 [Alvin Shek, Vedant Parekh, Siddesh Nageswaran]

This week we all worked on making the final video and poster.

Next week, we will continue to fine tune the video and poster and prepare for presenting on Thursday.

This week I worked with the team to make final video and poster. I also reviewed for the final presentation.

Next week, I plan to refine the video and poster and prepare for the poster session.

This week, I worked with Sid and Alvin on integrating all the systems together and debugging all the issues that came with integration. There were many issues we faced such as losing drone communication with the remote controller and the drone radio, flight commands using code broke down which we had successfully tested before, etc. Debugging and getting necessary data for our requirements was everything that I worked on this week. After taking our aerial shot, I helped code calculating the false positives and false negatives of the object detection code. everything together and debugging issues with drone communications. We faced a lot of strange setbacks this week. In the previous week, we were able to easily control the drone with remote control and even control the drone via code.

For next week, I am swamped with class work and most probably will work a little on trying to debug why the code functions sent for flight are not working.

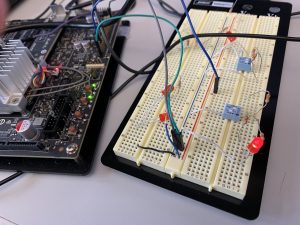

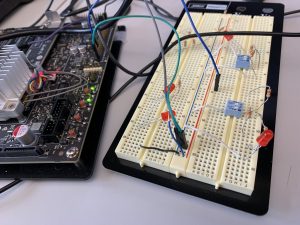

This week was a lot of integration and working as a team. I got the switches working and coded up with the TX1 to start/stop flight. The LED in red indicates start and off is stop:

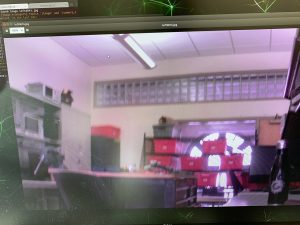

I also helped with debugging while we got our first test up autonomously: without the remote control. Some of the debugging included miscommunication from TX1 to Pi (and vice versa), adjusting camera filtering, camera calibration. Here are couple of images from one of our flights:

This week we focused on getting all the remaining parts integrated for our test flight. We completed the calibration and setup of the firmware for all the sensors. We also got the streaming to work. This allowed us to have our first test flight which will serve as our interim demo.

On target, next week, we plan to improve on some of the issues we saw with our test flight the biggest being some latency issues while streaming. We also have to improve on our communication with the drone via the button which is having some issues being streamed via ROS.

This week I worked on making the streaming work between the RPi and TX1. Rather than using raspicam_node package in ROS, which was giving too many errors for raspberry, I decided to use cv_bridge. Alvin and I worked on creating the script to convert the Pi camera image to a OpenCv image to then a ROS type image using cv bridge. Here is a picture of an image that was taken on the camera streamed to the TX1:

There is a 2-3 second delay between capturing frame and streaming it to the TX1 . One of the problems we are having is the TX1 is not receiving good WiFi strength. I also worked with the team to do various other tasks like testing out the buck convertor which will be used to power the RPi. I also worked on the camera calibration so the images that will be captured by camera can be converted to real work coordinates.

On schedule, next week plan to access the issue with Wifi connectivity of TX1, improve the latency between capturing and receiving the image. Also, planning to access how the running the algorithms on these frames will impact the latency when images send back to RPi.

This week I worked on making the button circuit which will be used to start/stop flight and start/stop video streaming/recording:

I also worked on the RPi camera. I tried implementing the 12MP camera with the Pi 4 but that did not work either so we have decided to use a 5MP camera Alvin had from a previous project. Here is a sample image from the camera:

I wrote a script to save the video to SD card as we plan on verifying some of our requirements on the recorded video. I also wrote a script to start/stop the video capture with switches as it will be easy to do that than have a computer and keyboard/mouse every time we want to start/stop flying.

The ROS connection on the Pi was not working and I am in the process of understanding why this is the case. There is a “connection” being made but using turtlesim, when pressing the keyboard arrows, the turtle does not move.

I am still on schedule. By next week, I want to resolve the ROS bug and get the Pi and TX1 communicating. I want to be able to stream the video captured on camera connected to Pi and feed it into my object detection code on the TX1. Finally, I hope to write code to integrate the switches I have prototyped with the TX1 so a start/stop signal is recognized. I spend too much time trying to make the 12 MP camera work, so I intend to spent more time this week to finish these goals so hopefully we can fly by end of next week.

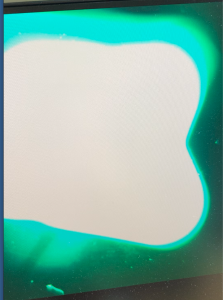

This week most of the time was spent debugging the camera with the Raspberry Pi 3. The camera doesn’t capture the image properly:

This is suppose to be a white ceiling. From extensive debugging, we figured out that a Raspberry Pi 4 will be necessary as the camera needs kernel 5.4. We tried updating the kernel to 5.4 with Raspberry pi 3 but that was not stable. We tried many libraries from Arducam (https://github.com/ArduCAM/MIPI_Camera/tree/master/RPI) and this other than raspistill. None of these solved the problem. Therefore, we have ordered a Pi 4 and the necessary cables. Also I got the wearable display working with the TX1, here is an image of our test video shown on the wearable display:

I am not behind schedule and next week the plan will be to get the camera working with raspberry pi 4 and stream the video captured to the tx1 using ROS so that I can integrate my object detection code.

We continued to work on our individual tasks this week. We have a completed computer vision algorithm that has been tested (still need to run on TX1 though), and state estimation algorithm. Further, we have the drone simulator working which allows us to test the state estimation algorithm first to see how the drone reacts before we physically fly it.

Next week, we plan to test the state estimation algorithm with the test video used for the computer vision. We also plan on focusing on the external interfaces like designing the camera mounting and getting the drone to fly an arbitrary path in simulation and physically. All these targets will help us get closer to integrating the drone with the computer vision and state estimation algorithm. We also plan on making the button circuitry for the wearable.

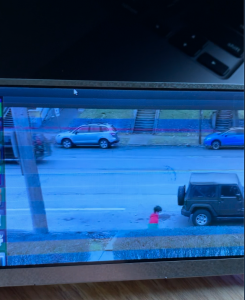

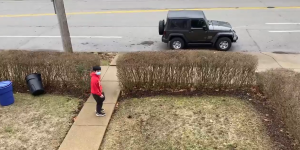

This week I worked on testing the computer vision algorithm I implemented last week. The test setup was a stationary 12 MP camera (to simulate the one we will use) at an approximate height of 20ft recording a person walking and running wearing a red hoodie (our target) under sunny and cloudy environment:

Sunny condition

Cloudy condition

The algorithm did not work well for the cloudy condition (where the target is walking) as it picked up the blue trash can and car that was parked:

Note the blue dot in the center of the red bounding box is the center of the tracked object. In the first picture, the algorithm switches from tracking the person to tracking the blue car parked

Under the sunny conditions (where the target is jogging), the algorithm was perfect in tracking the person:

As seen from the above picture, the blue car and garbage can are filtered out.

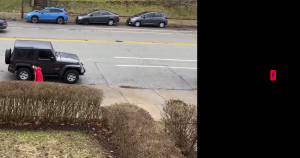

To better the algorithm for not well lit conditions, I used rgb filtering rather than converting to hsv space:

In the first picture, the new algorithm is still tracking the person and has filtered out the blue vehicle in the background. In the second picture, the new algorithm also filters out the blue garbage can unlike the old algorithm.

The new algorithm also worked in the sunny conditions:

Therefore, the algorithm using rgb filtering will be used. I also calculated the x, y position of the center of the target’s frame which will be used by Sid for his sate estimation algorithm.

We are on track with our schedule. Next week, I will shift my focus on developing the circuitry for the buttons, and if I get the camera we ordered, I will run the computer vision on the TX1.