(This status report covers the last two weeks since no reports were due last week).

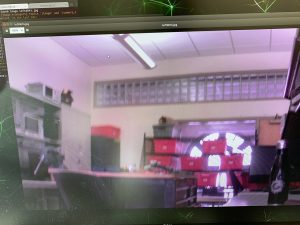

At the start of two weeks ago, we all met in the lab but were struggling to get the drone flying and our camera image was entirely red tinted. Apparently, the auto white balance on the camera was completely screwed up. I created a main Python function on the Jetson that would automatically receive the images streamed by the Raspberry Pi, run the object detection and display the results on the monitor screen. I modified the main function so we could manually send white balance values to the Raspberry Pi and have it change these values for the camera on the fly so we could adjust the white balance of the photos. Still, the image was extremely desaturated no matter what we tried.

Eventually, we decided to get a new camera (and a Wi-Fi radio we could hook up to the drone to receive error messages while attempting to fly). While waiting, Alvin and I tackled drone motion planning- each one of us using a separate approach. The idea behind drone motion planning is that we already have a target detection algorithm and a target state estimator that can model the future movement of the target. We now need to model the future movement of the drone and create a motion plan such that:

- The target stays close to center of frame.

- The drone doesn’t have to make extreme movements

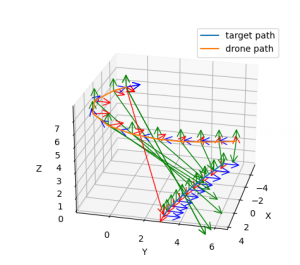

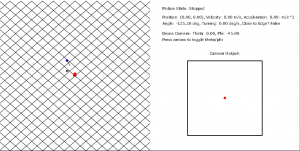

For my approach, I created a 3D simulator that simulated the target’s motion, the drone’s motion (based on our motion plan) and the drone camera output (based on the camera’s internal matrix). The simulator is pictured here:

My approach to motion planning was to run optimization on an objective function, trying to minimize the following two quantities:

- The negative dot product between the unit vector of the camera orientation and the unit vector from drone to target. Basically, the higher the dot product, the closer the target is to center. Since this is a minimizer I took the negative dot product.

- A regularization term that aims to minimize the sum of the squared velocities in the drone’s motion plan (basically try and make the drone move as slowly as possible to accomplish our goals)

The relative proportions of these two can be tweaked. In our Google Drive folder, I’ve attached video of when we only try to minimize 1) and when we try to minimize both 1) and 2). The first case has more accurate tracking, but the drone’s movements are jerky and unrealistic. The second case has slightly less accurate tracking, but much smoother and achievable motion.

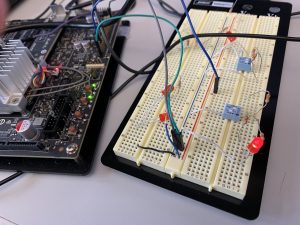

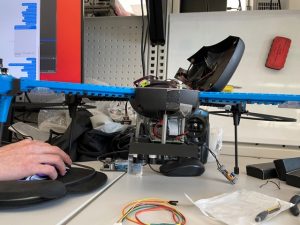

Finally, we received the new camera and Wi-Fi radio and began to set the groundwork for autonomous flight. First, we met in lab and actually got the drone to fly under manual control. We took test video, and to make things easier, I modified the main functions of the Jetson and the RPi so that the Jetson can send commands to the RPi that can handle events such as starting the camera or stopping the camera. I then modified the RPi’s config files so that the video streaming program would run at boot. This enabled us to easily start our video streaming. As soon as we connected the RPi to the drone’s battery it would start up the program headlessly, and then we could send it commands through the Jetson to start the video streaming.

After getting the drone to fly manually, I helped setup mavros on the RPi so we could connect via serial and finally start sending autonomous commands to the drone. Today, we were finally able to start sending basic autonomous commands to the drone and have it hover to a set position and remain there.