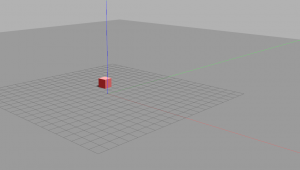

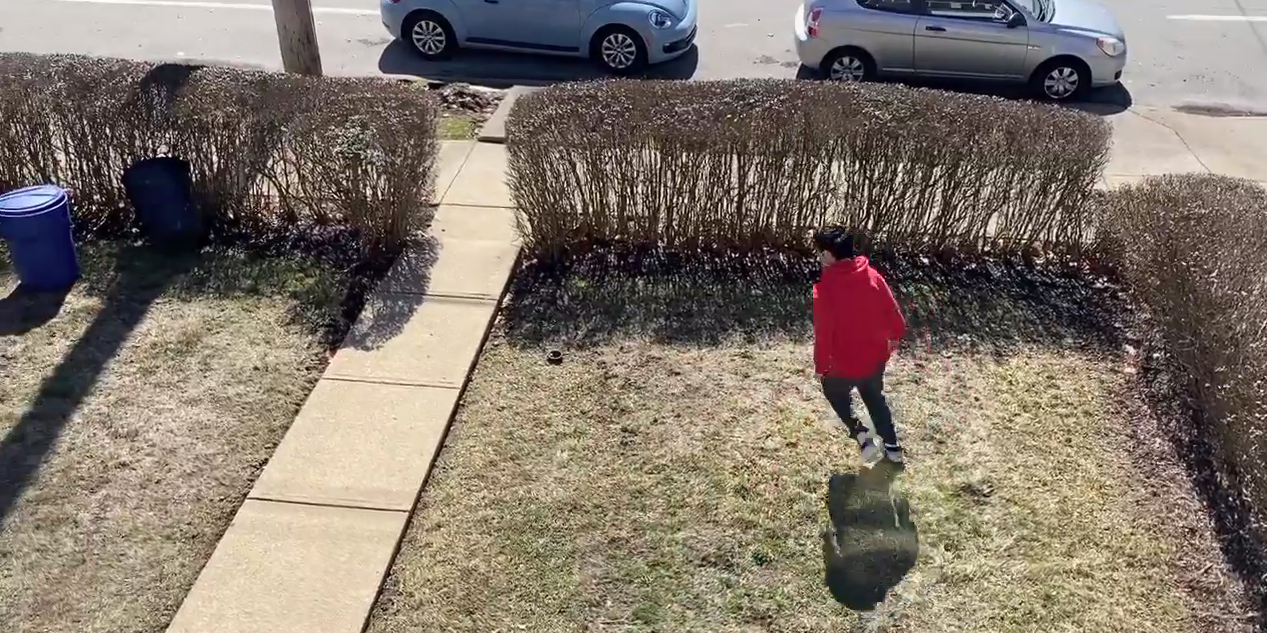

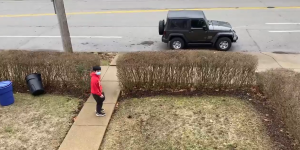

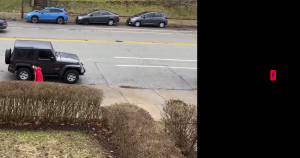

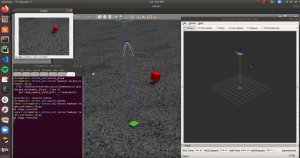

These past two weeks, I have built a functional launch sequence for the drone and verified its behavior in simulation. This sequence begins with arming the drone and having it takeoff to a set altitude of 3.2 meters (arbitrarily chosen for now) using high level position commands (send desired position, drone’s flight controller handles orientation). The drone then switches to a lower-level control output of desired roll, pitch, and yaw to allow for more dynamic maneuvers.

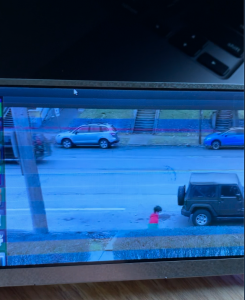

In addition, I have spent this last week implementing the transformation from 2D target pixel location to estimated 3D position in the world. This involves a combination of back-projection (transforming 2D pixel location into 3D position with respect to the camera) and intersecting a ray with the ground plane. More details can be found on this document: https://drive.google.com/file/d/1Tc6eirIluif-NBqA5EThOGmiCBtPO4DY/view?usp=sharing

Full results can be seen in this video: https://drive.google.com/file/d/1Tc6eirIluif-NBqA5EThOGmiCBtPO4DY/view?usp=sharing

For next week, now that I have 3D target position, I can work on generating 3D trajectories for the drone to follow so that it keeps the camera fixed on the target as much as possible (our objective function). I will first make a very simple baseline planner and stress-test the system so it is ready for physical testing next week.