This week, we just focused on the filming of our video and polishing the project as much as we could. I met with Vedant and Alvin in the lab, and we gathered a lot of footage of the project in action, in addition to collecting logs of all the ROS communications for future processing in simulation. We planned out the length of the video and how we would break down each component of the design. Finally, I recorded my segments at home.

Siddesh’s Status Report- 5/1/21

This week, I worked with the team in the final stages of integration. At the start of the week, I helped Alvin with the motion planner. I downloaded the Embotech Forces Pro license and software for the Jetson TX1 and tried to debug issues with the Forces Pro solver not respecting constraints and outputting motion plans with weird quirks (reluctance to move backwards or yaw beyond a certain angle). We tried to simplify the model, or switch to scipy.optimize.minimize, but the simplified models were very inaccurate and the minimize method took upwards of 17.5 seconds to solve a motion plan. Eventually, we decided to switch to the preliminary motion planner I had come up with two weeks ago, where we only changed the x and y position of the drone while keeping yaw and elevation fixed. This seemed to work well in simulation, and more importantly the drone’s motion was relatively stable, easing some of our concerns with safety.

I also worked on dealing with the local position issues with the rest of the team in lab. I collected local position data for a variety of test paths in order to understand how the drone’s position coordinates mapped to real world coordinates. I also helped in the camera calibration to try and debug issues in the conversion from pixel coordinates to real world distances. In the process of dealing with these issues, I helped the team reflash the drone’s flight controller with the Ardupilot firmware in efforts to make the position data more accurate by adding an optical flow camera. However, we eventually encountered issues with the GPS fix in Ardupilot and decided to switch back to Pixhawk.

The rest of the time spent with the team in lab basically involved debugging an assortment of miscellaneous issues arising with the flight controller, such as a loss of binding with the radio transmitter and a refusal to switch to auto mode mid-flight. Eventually, despite the turbulent weather, we got the drone airborne and hovering in place, but decided against running our full motion planning stack due to wild behavior of the drone. Instead, we focused our energies on getting as many metrics as we could from our actual setup (target precision and recall, video FPS, streaming latency), and getting the rest of the metrics from simulation.

Team Status Report- 5/1/21

This week we aimed to complete our integration and be able to run our full stack successfully on the drone. However, several issues presented themselves on our way to trying to complete this goal. Firstly, while we could issue positional commands to the drone in order to move to certain positions, we needed to understand how it’s own internal local position coordinates mapped to real world coordinates. After conducting tests moving the drone across different axes and sending the local position estimates through the Rpi to the TX1, we noticed some startling issues. Firstly, even while stationary, the positional estimates would constantly drift within +/- 3m in the x and y directions. Finally, the initial x, y and z estimates seemed to be completely random. The flight controller is supposed to initialize x, y, z to (0, 0, 0) at the location at which it turned on. However, each of these coordinates seemed to be initialized to anything between -2 and 2 meters.

We tried to combat these inaccuracies by downloading a different firmware for the drone’s flight controller. Our current Pixhawk firmware solely relied on GPS and IMU data to estimate local position. On the other hand, the Ardupilot firmware allowed us to configure an optical flow camera for more accurate local position estimate. The added benefit of this would be without a need for the GPS, we could even test the drone indoors. This was especially important since the weather this week was very rainy, and even when the skies were clear, there was a considerable amount of wind that could have been interfering with the position estimates. Unfortunately, there were many bugs with the Ardupilot firmware, namely that the GPS outputted a 3D fix error when arming despite the fact that we had disabled the requirement for GPS when arming. After troubleshooting this issue, we eventually decided to switch back to the Pixhawk firmware and see if we could fly despite the inaccurate position estimates. In doing so, the radio transmitter for manual override somehow lost binding with the drone’s flight controller, but we managed to address that issue.

In addition to the local position issues, the other major problem we needed to debug this week was our motion planner. The issue was the tradeoff between the speed and accuracy of the motion planner. Using scipy.optimize.minimize methods resulted in very accurate motion planning, but the motion planner would take upwards of 18 seconds to solve for a plan. Reducing the number of iterations and relaxing constraints, we could optimize this down to 3 seconds (with much more inaccurate plans). However, this was still too much of a lag to accurate follow an object. Another approach we took was acquiring a license for Embotech Forces Pro, a cutting edge solver library that is advertised for speed. While Forces Pro would solve for a motion plan in less than 0.15 seconds using the same constraints and optimization function, its results were less than ideal. For some reason, the solver’s results had a reluctance to move backwards and yaw beyond +/- 40 degrees. Eventually, however, we were able to create a reliable motion planner by reducing the complexity of the problem and reverting back to a simple model we made a couple weeks back. This model kept the yaw and elevation of the drone fixed, only changing the x and y position. The results of this motion planning and full tests of our drone in simulation can be found in the “recorded data” folder in our Google Drive folder.

Unfortunately, despite the success in motion planning, and finalizing a working solution in simulation, we were not able to execute the same solution on the actual drone. We tried pressing forward despite the inaccuracies in local position, but noticed some safety concerns. One simple test we used was we manually guided the drone to cruising altitude. Then, we switched off manual control and sent the drone a signal to hold pose. However, rather than holding the pose, the drone would wildly swing around in a circle. This is because due to the local position drifting while the drone was standing still, it would think that it was moving and try to overcompensate to get back. Because of this, we landed the drone out of concern for safety and decided to use simulation data to measure our tracking accuracy. However, in terms of measuring target detection precision and recall, we used data collected from the drone’s camera as we were manually controlling it.

Alvin’s Status Update – 5/1/21

This week, I worked with Sid and Vedant on integrating everything together and debugging issues with drone communications. We faced a lot of strange setbacks this week. In the previous week, we were able to easily control the drone with remote control and even control the drone via code. This week, both of these features broke down: remote control of the drone suddenly stopped working, and the drone ignored our flight commands through code.

Besides debugging, Sid and I set up a thread-based motion planner to account for slow planning times. Our drone requires motion commands at 2Hz, or one message every 0.5 seconds. Our motion plan originally took upwards of 17 seconds to solve for, and after various tricks with reducing planning horizon and floating precision from 64-bit down to 16-bit, we achieved planning on the order of 0.7 seconds. With our thread-based plan generation, the main planner can stream a previously generated trajectory at the required rate as a separate thread solves for a new trajectory.

Overall, the resulting plans from these flat system dynamics still resulted in somewhat shaky behavior, and we eventually used Sid’s more stable planning over x/y space.

For the upcoming week, I am completely booked with other classes, so we may end up sticking with what we have: all functional systems in simulation, but only manual flight on the physical drone. If we get lucky and the drone accepts our autonomous control, maybe we’ll have a full product.

Alvin’s Status Report – 4/10/21

This week, I spent most of my time working with the team to set up various components for integration. We aimed to get the drone flying with at least video recording, but various unexpected issues popped up.

Our raspberry pi 4 suddenly broke, so we were unable to get the drone flying with the video recording. Only today(4/10) were were able to borrow a new one, and with this I was able to calibrate the extrinsic and intrinsic matrices for our camera opencv and a chessboard printout. At the same time, I helped Vedant set up ROS video streaming from the raspberry pi to the jetson tx1. I also spent a long time with Sid trying to connect our lidar laser sensor and px4flow optical flow camera. Unfortunately we had issues compiling the firmware on our version 1 pixhawk flight controller given its limited 1MB flash.

This upcoming week, I will focus on making sure our drone is up in the air. This involves solidifying our flight sequence from manual position control to autonomous mode and hammering out other weird bugs. Otherwise, basic autonomy involves flying up to the specific height and turning in a specific direction. This upcoming week will be extremely busy with other projects and exams, so the full motion planning may not be finished yet.

Team Status Report- 4/3/21

This week we focused on getting everything in perfect order before integration. First, we assembled the drone’s sensors and camera onto the adjustable mount and adjusted the hardware and tolerances until everything fit properly, the wires could connect safely and the sensors and proper line of sight.

Then, we focused on other miscellaneous tasks in preparation for full integration. We got the camera configured and working with the new RPi4, and started prototyping the circuitry for the passives for button control. In addition, we worked on the calibration of the camera in simulation (and measuring the actual position/pose of the real thing from our CAD design). Using this, we were able to perfect the transformation from 2D pixel coordinates to 3D coordinates in simulation and integrate the state estimator into the simulation. The successful integration of the state estimator into the simulation signaled that our drone tracking pipeline was finally complete.

Finally, we worked to start calibrating the LIDAR and optical flow sensor for drone flight. For next week, we plan to get the drone up in the air and perform rudimentary tracking. In preparation for this, we plan to write the ROS scripts to successfully communicate between the RPi and the TX1, fully calibrate the drone’s sensors and implement the button control to start and stop the main tracking program on the TX1.

Vedant’s Status Report 4/3/21

This week I worked on making the button circuit which will be used to start/stop flight and start/stop video streaming/recording:

I also worked on the RPi camera. I tried implementing the 12MP camera with the Pi 4 but that did not work either so we have decided to use a 5MP camera Alvin had from a previous project. Here is a sample image from the camera:

I wrote a script to save the video to SD card as we plan on verifying some of our requirements on the recorded video. I also wrote a script to start/stop the video capture with switches as it will be easy to do that than have a computer and keyboard/mouse every time we want to start/stop flying.

The ROS connection on the Pi was not working and I am in the process of understanding why this is the case. There is a “connection” being made but using turtlesim, when pressing the keyboard arrows, the turtle does not move.

I am still on schedule. By next week, I want to resolve the ROS bug and get the Pi and TX1 communicating. I want to be able to stream the video captured on camera connected to Pi and feed it into my object detection code on the TX1. Finally, I hope to write code to integrate the switches I have prototyped with the TX1 so a start/stop signal is recognized. I spend too much time trying to make the 12 MP camera work, so I intend to spent more time this week to finish these goals so hopefully we can fly by end of next week.

Siddesh’s Status Report- 3/27/2021

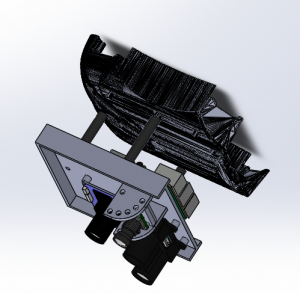

For these weeks, I first started the CAD design process for the drone mounts. I had to design a mount which could be attached to the underside of the drone and house the Raspberry Pi, sensors for the drone (a LIDAR and a PX4Flow camera) and our camera for taking video. The camera position had to be adjustable so that we could modify the angle between test flights and figure out which is optimal. In addition, I had to make sure there was enough clearance for each of the ports on the sensors / Pi and that there was ample room for the wires connecting them. The .stl files are in the shared folder. Here is a picture of a mockup assembly of the mount attached to the underside of the drone:

After asserting the design seemed to work in the assembly, I scheduled the parts to be printed (along with a few other drone guards we found online). The parts were successfully printed and I started the assembly:

In addition to this, I modified the state estimation algorithm to handle asynchronous datapoints. Rather than assume that updates to the drone’s state and the target’s detected position come together at a set fps, I modified the algorithm to handle asynchronous updates that are tagged with the timestep they were sent out. Since the algorithm requires the drone and target updates simultaneously, when one is received without the other, I predicted what the state of the other would be given its last known state and the delta t. Using this, the algorithm then updates its model of the target’s/drone’s movement.

Finally, I also helped Vedant try and get the camera working with the Raspberry Pi 3. We figured out that the kernel version of the Raspberry Pi 3 did not support the IMX477 camera and even though we tried an experimental way to update the 3’s kernel, we eventually decided we needed to get a Raspberry Pi 4 instead since this experimental update caused the 3 to stop booting.

For next week, I intend to start integration with the team. We will finish assembling the drone mount and I will help create the main function on the TX1 that integrates my state estimator and Vedant’s target detection. After this, we will test the communication between the TX1 and RPi and hopefully try a few test flights.

Team Status Report- 3/6/21

This week, we started setting up the Jetson TX1. We tried setting up the TX1 and installing the SDK on a Windows laptop but this didn’t end up working due to using WSL instead of an actual Ubuntu OS. So, we met up at lab to download and configure Jetpack and install ROS on the Jetpack. During the process, we ran out of space on the internal memory. and had to research methods for copying over flash memory to an SD card and booting the Jetson from an external SD card. In addition. we started working on the color filtering and blob detection for identification of targets using a red Microsoft t-shirt as an identifier. In addition, we set up the drone flight controller and calibrated the sensors. Finally, we designed a case for the TX1 to be 3d-printed and worked on the design presentation.

For next week, we will continue working on the target identification, using test video we capture from a 2-story building to simulate aerial video from 20 feet up. In addition, we will start to work on preliminary target state estimation using handcrafted test data and design a part to mount the camera to the drone. We will also install the necessary communication APIs on the RPi and set up an initial software pipeline for the motion planning.

Alvin 3/6/21

I met up with Sid to help install the Jetpack SDK on the TX1 as well as install ROS, but weren’t able to finish the full setup due to a memory shortage. I also helped build the design review presentation for this upcoming week.

This week was extremely busy for me, and as a result I didn’t accomplish the goals I set last week: namely setting up a software pipeline for the motion planning and actually testing on the simulator. What I did instead was set up the drone’s flight controller and double-check that my existing drone hardware was all ready for use. The drone and flight controller already contain the bare minimum sensors to enable autonomous mode:

- gyroscope

- accelerometer

- magnetometer (compass)

- barometer

- GPS

I used the open-source QGroundControl software to calibrate these sensors. The accelerometer calibration is shown as an example below:

I also wired up other sensors to the drone’s flight controller:

- downward-facing Optical Flow camera

- downward-facing Lidar Lite range-finder

This next week, I will finish installing the communication APIs on our Raspberry Pi to communicate with the flight controller and verify its success with ROS by sending an “Arm” command to the drone. I will also finish last week’s task to set up an initial software pipeline for the motion planning.