Group:

I worked with my teammates to build a proposal presentation as well as Gantt chart to describe our semester schedule and general task division. We also worked together to pick out materials to buy and materials that are already owned.

Personal:

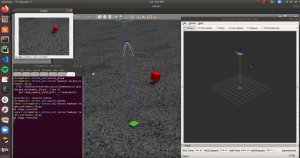

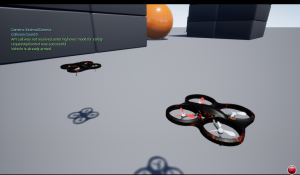

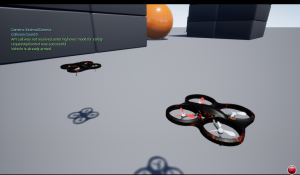

On my own specific task, I finished setting up the AirSim quadrotor simulator and familiarized with the API. The API provides both C++ and Python interface, but I will stick with Python since Computer Vision and various trajectory optimization packages use Python. I chose Airsim because it runs accurate nonlinear dynamics under the hood. Airsim can also simulate windy weather, which is important in testing the robustness of our motion planning and controls. Airsim most importantly interfaces with the Px4 Flight Controller API, which is exactly what is running on our real drone. This means that all my motion planning and controls can be tested and developed in simulation, and should run smoothly on the drone without any modification.

Next, I verified that one of our wifi modules functions properly by connecting it to a Raspberry Pi and accessing the internet. I then also double-checked that wifi can support communication between the ground compute and the drone through ROS . I set up my laptop as the “Master” that maintains ROS’s Publisher-Subscriber system, and the Raspberry Pi as one node. Because both devices were connected to my house’s wifi, as long as the nodes have access to the Master’s local IP address, they can communicate seamlessly. To demonstrate functionality, I had the Raspberry Pi publish a “Hello World” string and verified that the laptop could receive these messages over the network. This was just a proof-of-concept, and Sid will be handling the specifics of the code and software for the communications as well as compare bandwidth with other platforms.

Next Steps:

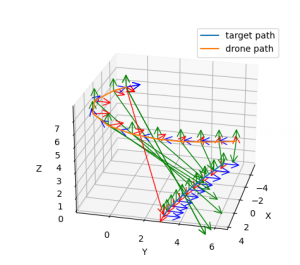

Overall, our team and my individual progress is on schedule. I’ve already shipped the drone from my house and will receive within the next few days, so the others can begin prototyping hardware on the drone. Since I can do all my testing on Airsim initially, this upcoming week, I will begin building our software pipeline for motion planning by assuming that I am given some predicted future trajectory of 3D positions of the human target. I will develop an initial implementation of trajectory generation and verify this works in simulation. In the meantime, we will prepare for our design presentation.