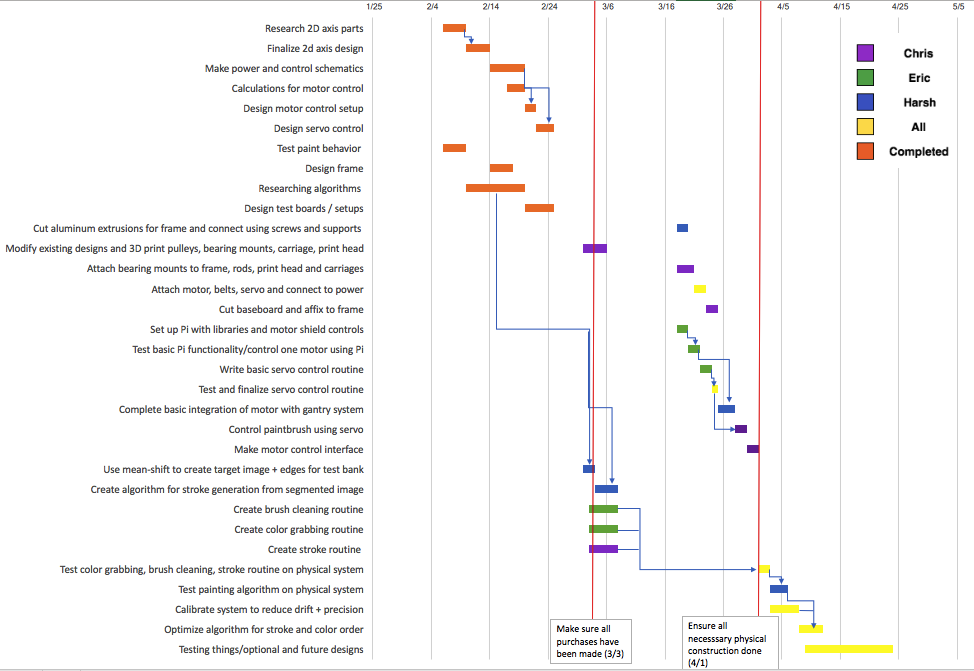

In the beginning of this week, most of my time was spent working on the team’s design review report. In addition to writing my contribution to the report content, I compiled and formatted the document at the end. The Raspberry Pi order has still not arrived yet, so I am still unable to directly work with the Pi. I created a GitHub repository for the team’s code, and continued working on the code for the motor controls. I am encountering some difficulties in using the library, which I may need to solve by installing Linux on my computer.

My progress is a bit behind schedule, because I was unable to complete most of the motor control code before Spring Break began. To make up for it, I will push myself to complete the code soon after break ends. The process will also surely be expedited by the arrival of the Pi and being able to actually test the code. In the week after break ends, I plan to finish the majority of the code for the motor controls and test it on the Pi once it arrives.