Our group is making progress according to schedule this week and preparing for the upcoming interim demo.

Ray and Jerry are collaborating on the Kivy (UI) design pipeline, there have been various implementation issues including environment switches (Linux to Windows) and learning the different methodology that Kivy provided compared to traditional Python. Kivy also provided various widgets that seemed easy to implement and apply to our program, but they turned out to be a lot more complex than we previously estimated. Fortunately, we collaborated during our usual meeting on Friday to debug and discuss elements of the UI design, which fixed most of the issues we previously had in development. Ray also worked on the file management part in creating a relatively primary file management system for us to store reference and user poses.

In addition to the work previously mentioned, Jerry has been making significant contributions to the development of his pipeline for single file uploads. His efforts have proven to be highly successful, as his code has been integrated into the main program developed by Ray. This integration marks a crucial step forward in the application’s functionality, allowing for the straightforward acceptance and processing of basic single images. With this foundation in place, Jerry is poised to continue his work on expanding the application’s capabilities. His next focus will be on further enhancing the application’s features by enabling it to accept and utilize pose sequences.

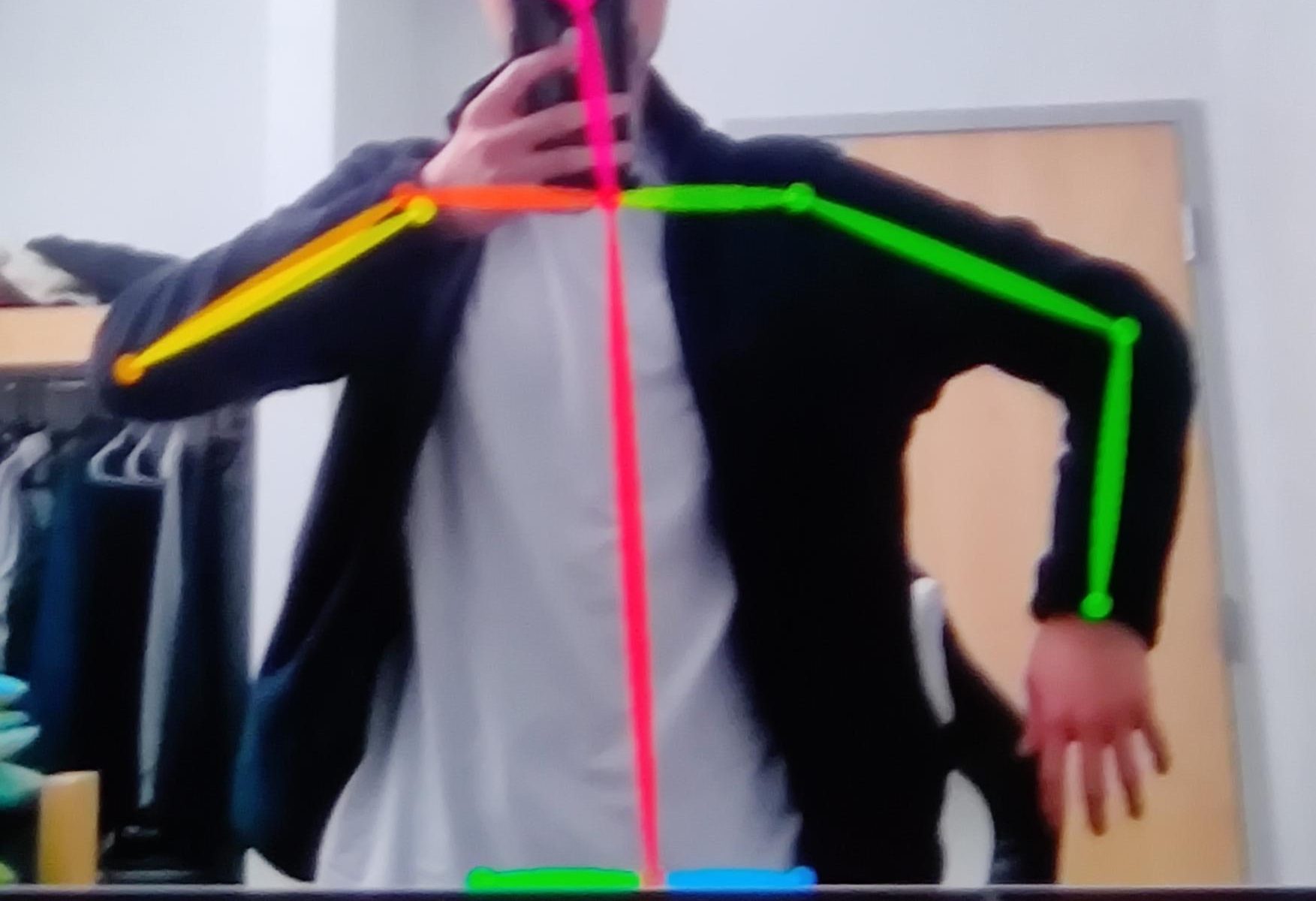

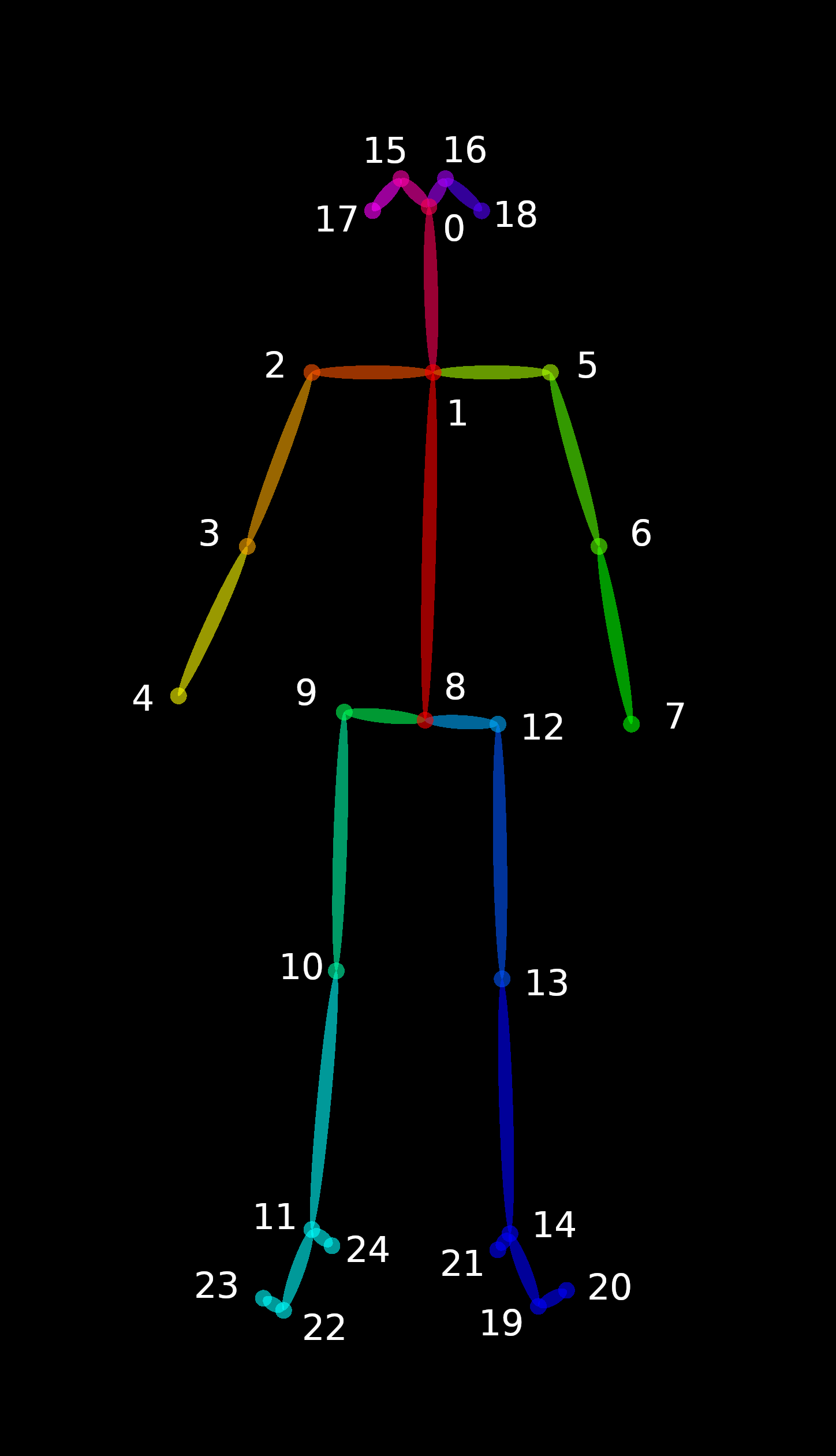

Eric (Hongzhe) continues to work on the Openpose system and its integration into our application. Eric is also learning Kivy along with Ray and Jerry to speed up the application development and integration of pose recognition. Continuing his progress from last week, Eric also did extensive testing on various Taichi pose pictures to make the skeleton from Openpose to overlay on the original picture. He is also working on file directory for output json files and with Shiheng for him to accept the inputs for comparison algorithm from our designated directory. Eric also helped with debugging throughout the programing both on Kivy and communicating demands with Shiheng’s comparison algorithm, which he would gladly provide postures for Shiheng to do unit testing for his algorithm.

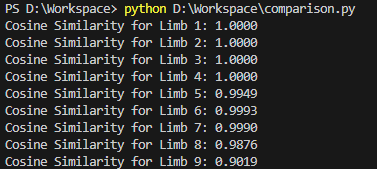

Shiheng worked more on his own about the comparison algorithm for our design of capturing single image inputs. However, during the Friday meeting we held, the rest of the group did code review on the algorithm and pointed out various issues to be optimized, since some parts of the code were originally being hardcoded for testing purposes. After fixing and improving the script from team member’s advice, it could achieve most of the designs we planned initially, including scoring, producing joint angles as outputs, and invoking our voice engine. Since we have not decided how to implement the voice module into our program, Shiheng will continue to work with the rest of the group about playing generated voice instructions and polishing on the details will be the key of achieving a robust Taichi instructor tool.