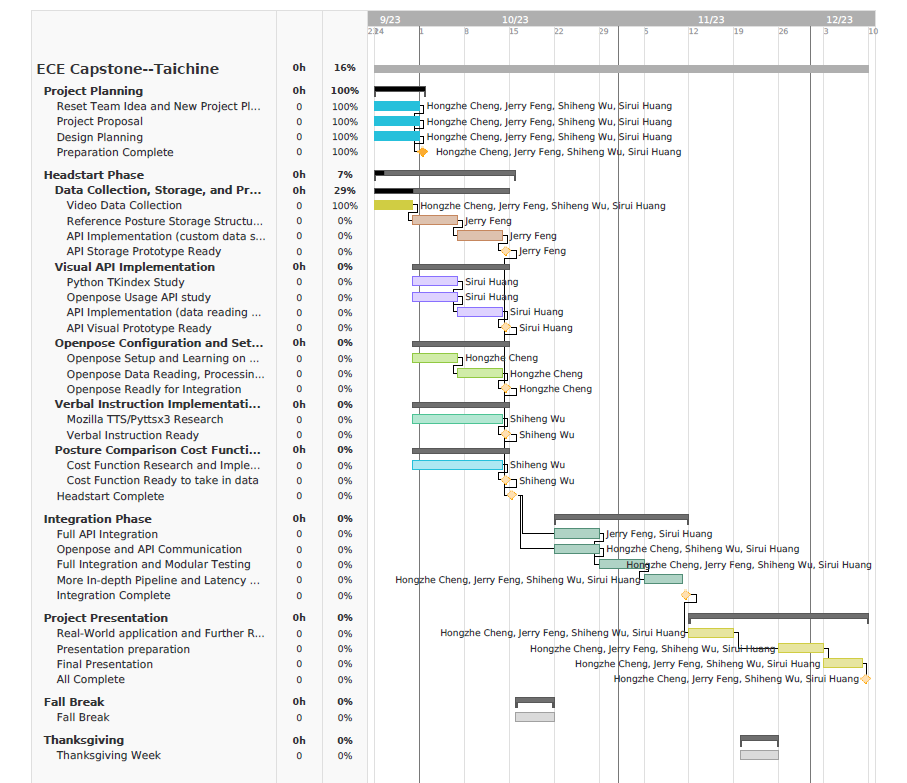

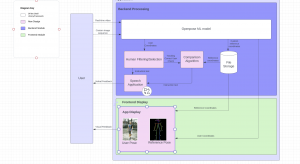

This week, we had a new team member on our team. We had two three meetings together to discuss on labor division as well as technical details of the project and made solid progress on our implementation design. On my part, I researched on the API structure of Openpose and the packages for implementing our user interface.

The API of Openpose supports both Python and C++ for data transfer, and after discussing with my teammates (specifically Jerry), we decided on using Python since it has more functionalities available for user interface implementation. The most time consuming computation our system will do is in openpose, while the api is only responsible for reading outputs from it, so there should not be a huge sacrifice in runtime efficiency.

The API package that I plan to use is TKinter. Upon research, I find it having great potential as the tool for implementing our system. It supports graphics and also has extensive support on GUI elements. (Below is the tutorial I’m watching.)

I was taught briefly about how openpose work in Computer Graphics 15462 and had the chance to read about it in the past. However, I have not integrated the model into a full system before, so for the past week I read about openpose’s API usage on their Github page. Openpose official repository has some helpful tutorials for me to refer to. I read through some of them, but have not had the chance to try running them. I plan to install and build the openpose project on my laptop this weekend.

I am back on schedule this week, and my plan for next week is to get used to the TKinter package and the Openpose API to start creating a prototype UI for our system. Look forward to seeing how it will turn out!