During the week of 9/30, my primary focus was on researching and understanding critical design tradeoffs related to our project. This entailed two key aspects: evaluating text-to-speech (TTS) options within the Python development environment and gaining insights into the implementation of cosine similarity-based posture analysis. Each option had its unique set of pros and cons, with considerations such as internet accessibility, voice quality, and language support. Furthermore, I delved into the idea of cosine similarity and its application in posture analysis, with a keen eye on setting an appropriate similarity threshold. These endeavors paved the way for informed design decisions in the upcoming phases of our project.

In the realm of Python, I examined three TTS solutions: gTTS API, pyttsx3, and Mozilla TTS. The gTTS API offers flexibility in preprocessing and tokenizing text, supporting multiple languages with customizable accents. However, it necessitates internet access due to its API nature. Conversely, pyttsx3 provides greater customization options but lacks the naturalness of gTTS. Mozilla TTS, while high-quality and offline-capable, requires research for voice training and personal selection of voice engine. These assessments have equipped us with a comprehensive understanding of TTS tools, which I determined that Mozilla TTS is the best option among all. I also made backup plans for the case of C++ and found TTS engines that fit that approach.

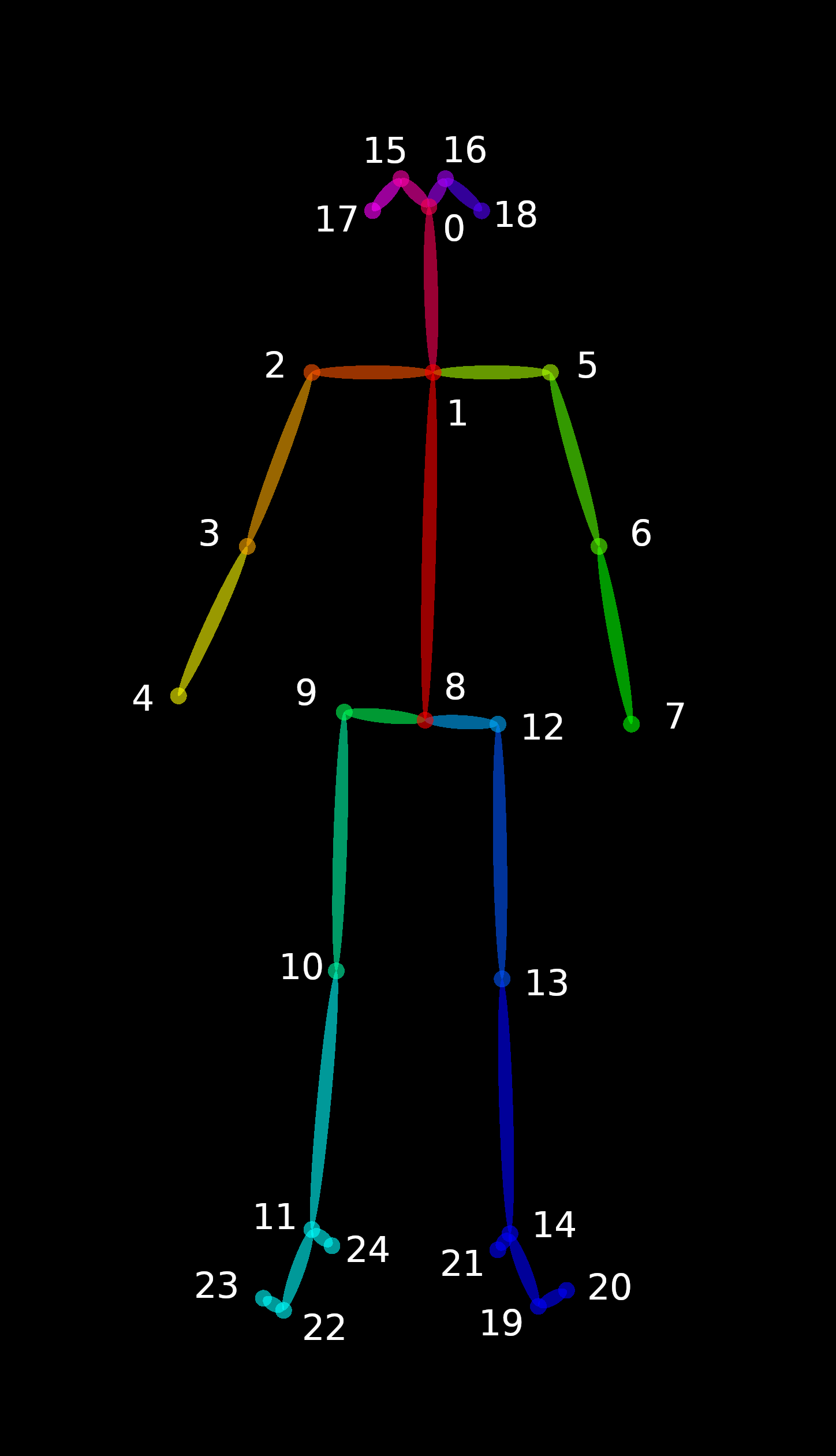

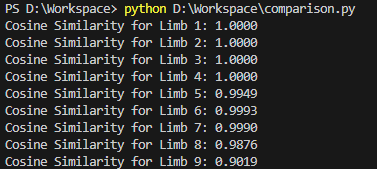

In parallel, I delved into the mathematical underpinnings of cosine similarity for posture analysis. It offers scale-invariance and angular similarity comparison, making it apt for body pose analysis. The critical decision to set a similarity threshold, possibly ranging from 80% to 90%, emerged as a key design consideration. This threshold will be pivotal in assessing whether two postures are sufficiently similar or dissimilar. By thoroughly understanding these design tradeoffs, we are better equipped to make informed choices in developing our posture analysis system, balancing accuracy, and flexibility to accommodate varying body sizes and orientations.

The comprehensive evaluation of TTS tools has provided insights into the advantages and disadvantages of each option, enabling us to make an informed choice aligning with our project’s goals. These efforts represent significant progress toward the successful execution of our project, empowering us to make well-informed design decisions moving forward.

ABET: For consine similarity, I extracted the concept from 18-290 and 18-202 in terms of vector comparision and algebra.

For Python coding perspective, I took it from previous CS courses taken and my personal experience with Python. I researched on TTS packages this week through looking at github developing docs and developer websites about those concepts.