My team and I made considerable progress on the project in the past two weeks. Except for some minor bugs, our application is functioning properly in general, and we are also actively optimizing our application to make it more convenient for our users.

To briefly summarize my work over the two weeks:

a. Training page posture sequencing works

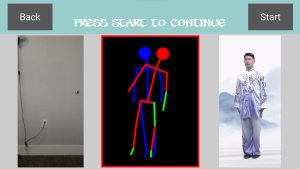

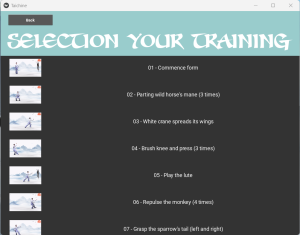

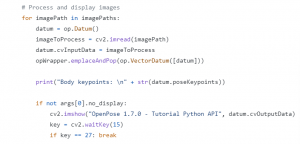

Last Saturday and Sunday, I worked on the training page posture sequencing based on the implementation plan I created in advance. Since I finished the posture drawing functionality ahead of the plan, I implemented the posture sequencing functionality as well.

Now, for a Taichi posture sequence, the training page go through all subposetures in the sequence so that the users can actually train themselves on the full Taichi posture. When the user correctly performed a subpose, the training will automatically switch to the next subpose.

I also created a new configurable parameter “move-on time” for the users in the setting screen to control the interval between subposes. It is different from the “preparation time” parameter, which is time interval between the moment start button is pressed and the evaluation of the first subpose.

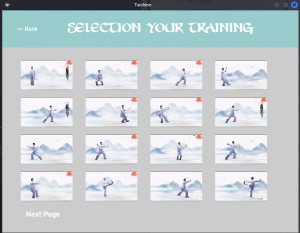

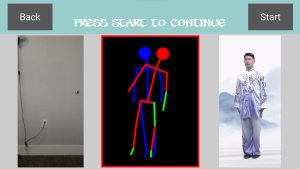

Below is a image of the new trianing page (Image provided by Shiheng (Roland)).

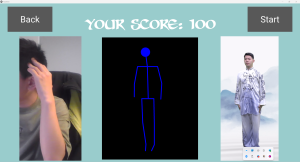

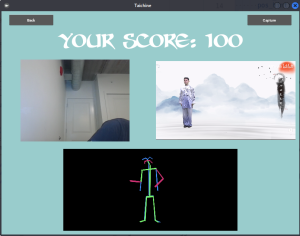

b. Result page implemented

When the training of a Taichi posture ends, training screen now switches to a result screen, showing average score, total time, and a random tip on how to use our application correctly.

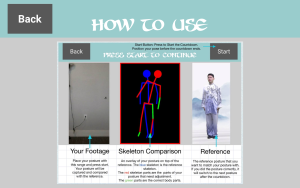

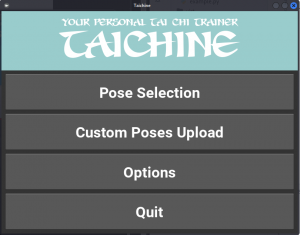

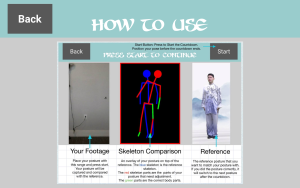

c. Tutorial page implemented

I created a new page showing a tutorial on how to use our application correctly. It is accessible from the menu page. Below is the tutorial page.

d. Minor fixes for better user experience

Selection screen has much larger pose item in order to make the image preview easier to view. Back buttons in all screens are enlarged. I also made a few small fixes on training screen timer display issues.

In general, my team and I am back on the schedule and we are promptly finalizing our project. Next week, I will fix the bugs and issues I found during testing and also brainstorm on what extra feature I want to add to our application.