This week I mostly focused on comparative user testing. In our final demo, the professors expressed the desire to see user testing that measures the difference in accuracy of limb angles to the reference pose, with training with a video, and training with our system.

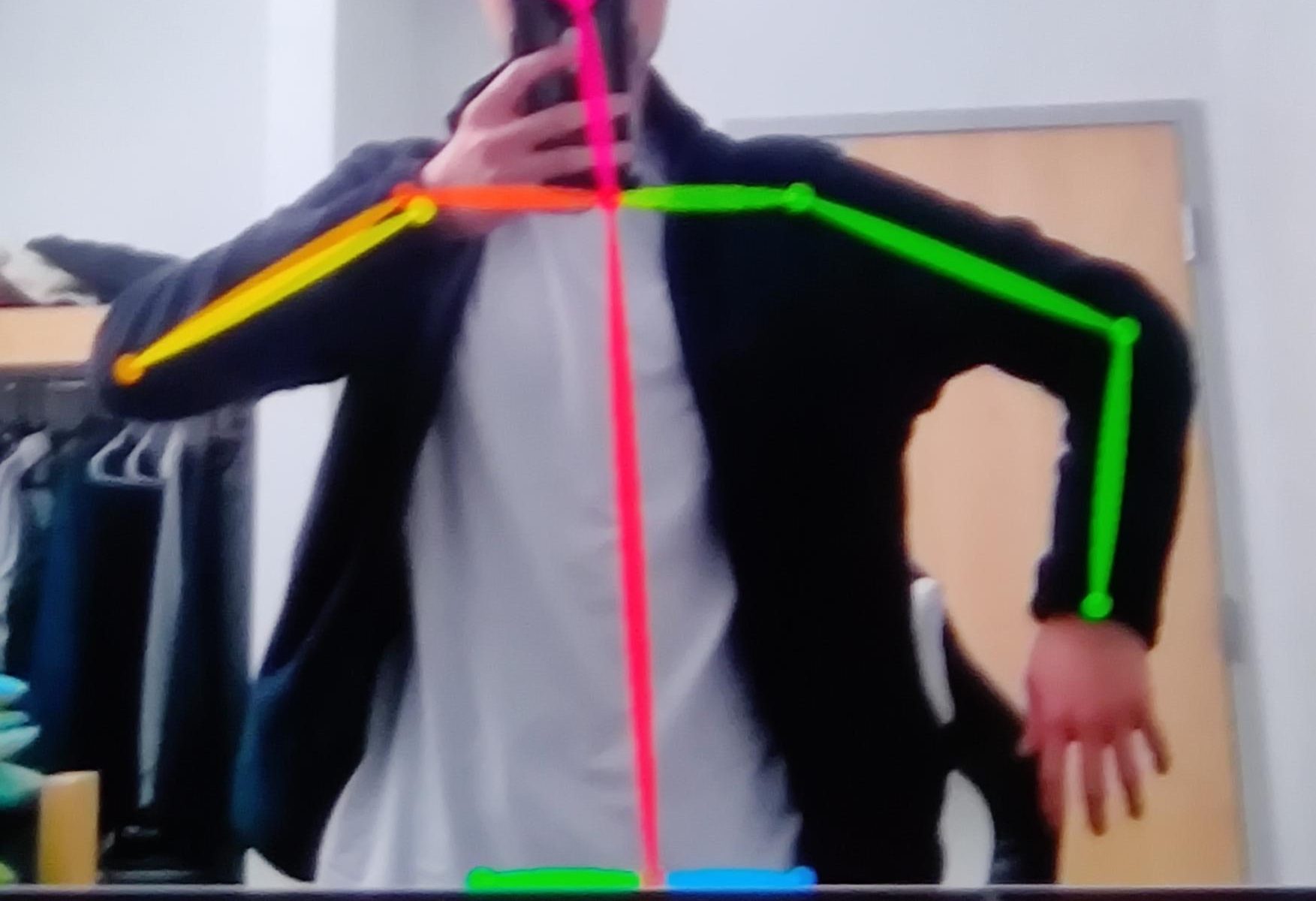

Users would test using a side by side of the video Ray gathered the reference poses from, and a webcam on the side to mimic a mirror, so they could look at their own pose and adjust. I specified the users to train on the pose sequence of “repulse the monkey” in the video. I had them train with the video for 5 minutes and then took a picture of the pose they felt they did the best at. I would then have the users train on our system with a tolerance of 5 degrees for 5 minutes and use the latest training image and keypoints coordinates json file the system took for evaluation.

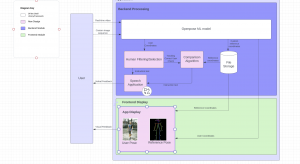

I fed the picture of the pose the users did with the video into openpose to get a json file of the coordinates, and then fed the json file into the comparison algorithm with the reference pose’s json file to get a list of similarities. I did a similar thing with the json file of the users’ keypoints when training with our system. I am still doing data analysis on the files and as such do not have an update yet to share. We found that the users did better with the video than with our system when looking at an average of all of our similarities, however when we look at the similarities split up by joint angle type, we see that this is due to one main outlier: the angle between the right calf/shin and the right foot. There are other joint angles that show large differences in favor of the similarity measurements of the user training without our app, but they are not nearly as dramatic as the angle between the right calf/shin and the right foot.

The full plot of the similarity differences can be found below. The y axis is the similarities of the users joint angles training without our app minus the similarities of the users joint angles training with our app. Thus a negative value, means the users showed better similarity to the reference pose when training without our app and a positive value means the users showed better similarity to the reference pose when training with our app.

I also helped make the poster and final presentation this week as well, including making a short gif of the custom upload pipeline in action.

We are well on schedule as we are doing testing right now and evaluating our system. Nothing remains to be done with our project in terms of functionality.

In terms of deliverables we plan to finish making the video and writing the final report to turn in on time.

ABET Question 6:

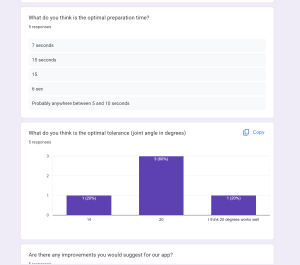

In terms of tests we did, I did numerous unit tests on my custom file upload in terms of testing the functionality of the system and making sure widgets were getting ordered correctly, and that the custom upload feature was robust under continuous use. In terms of full system testing, our team did many full system tests during the integration phase of our project, and user testing and comparative user testing also doubled as a full system test. In terms of our tests, we found that users overall felt the app was a bit too slow, I noticed one user who mentioned it was very slow, ran into a bug in our system where openpose was continuously running in the training loop, even when the user exited the training screen. I fixed this bug and our user scores for the speed of our app went up.