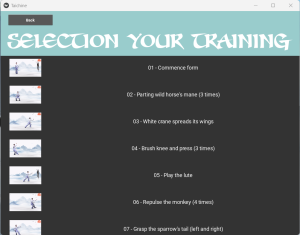

This week I am excited to announce that I have finished putting together pose sequence support for the custom images upload page. I was able to have dynamic image removal, and reordering from the user. The user is also able to name the pose they would like to use, with the default name of the pose being the name of the first image in the sequence. Users upload sequences by selecting multiple files from the computers native file manager that displays as a popup. Full functionality can be seen in the video below:

https://youtu.be/ZXbXMUYbDSE (In the video I accidentally say custom image upload support, I meant custom pose sequence upload support.)

I believe I am right on track with an adjusted schedule that my team has put together, where we wanted to get custom pose sequence support finished before Thanksgiving. Since the meat of custom pose sequence support (functionality of dynamic changes to poses in upload phase and upload in correct ordering) is done, I believe I am well on track with our adjusted schedule.

In terms of deliverables, I hope to be able to finish tweaking the button sizes so that the buttons do not overlap with images when only 1 image is being uploaded.

ABET Question:

Many of the ways we have grown as a team is that as we have worked together more, our communication as a team has gotten better and better. Originally, before I joined, the team communicated predominately through WeChat but they have been very gracious in switching to Slack to better accommodate me. We have also gotten better at adjusting to using Slacks functionalities to organize questions we ask each other to coordinate the integration of our project. We have also gotten better at actively maintaining an open atmosphere in our team meetings so everyone can voice their opinions and have a say in the direction we want to take our project as initially I think we were a little reticent to voice our opinions, but now everyone is comfortable sharing their opinions and discussing differences in view points to ensure a better project.