This week I did some research as to (1) how our remote web app server can communicate with Pi (how alarm data is passed from one to another), and (2) how alarm data is stored. As a result of my research, I decided to make some adjustments to our current system design – (1) AWS SQS is used to send data from the remote server to Pi, and (2) cron jobs will be scheduled on Pi instead of remote web app server.

My reason for change (1) is that just having MongoDB is not sufficient for real time communication between Pi and the remote server; sure, when user created new alarm on the frontend interface, data can be sent to backend and stored to MongoDB immediately, but MongoDB does not know if and which alarm is created in real time, and hence cannot pass the newly created alarm data to Pi in real time. This means instead of Python Requests library on Pi, we will use AWS SQS Python SDK. And on the remote server, AWS SQS Javascript(Node.js) SDK will be used.

While change (1) is absolutely necessary, change (2) is more up for debate and can be changed back later on. I made this change because this way we say goodbye to the potential latency between web app and Pi whenever the alarm starts. We did not plan to schedule cron jobs on Pi initially because we wanted to make sure Pi have sufficient computing power to run the CV code efficiently; I checked that cron job does not use up much resources(so does connection with SQS), and especially since only one cron job is scheduled for one regular alarm, Pi should probably do fine.

I have some pseudo code for this approach at the end of the update.

Since I spent more time on research and some more on ethic readings, plus big assignments from other classes , I have only written some pseudo code instead of actual code. As a result, I am running a little behind my schedule this week, but I will make up for it next week.

My next week’s schedule is to turn the pseudo code into actual code, with the exception of the addToCron() on the last line of the pseudo code on Pi.

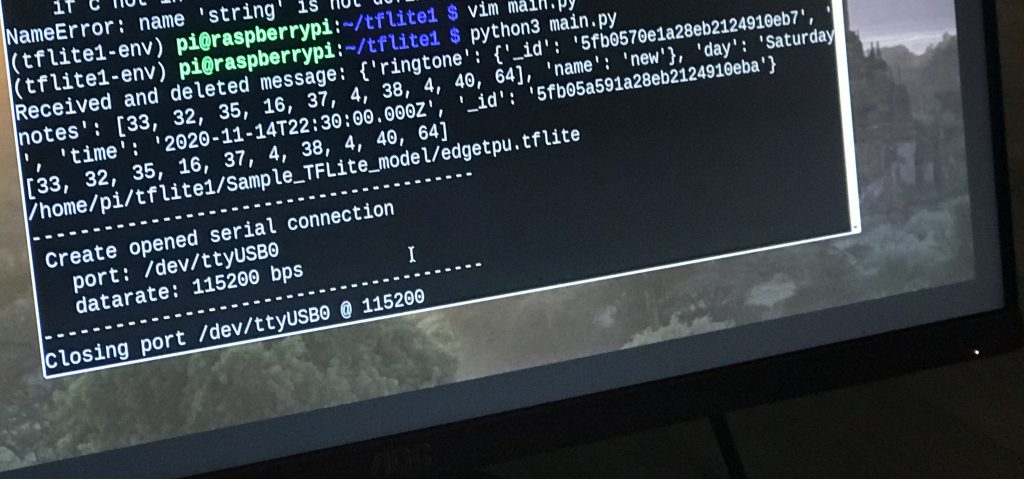

On Pi:

import boto3, logging

sqs = boto3.client('sqs')

q = sqs.queue('name')

while True:

alarmData = q.receive_message()

logging.info(alarmData)

q.delete_messge(alarmData["id"])

addToCron(alarmData["time"], alarmData["ringtone"])

...

On remote server(web app backend):

// mongoDB init

var mongoose = require("mongoose");

mongoose.Promise = global.Promise;

mongoose.connect("mongodb:<LOCAL HOST>");

var alarmSchema = new mongoose.Schema({

time: String,

ringtone: Array[int]

});

// SQS setup

var AWS = require('aws-sdk');

AWS.config.update({region: 'REGION'});

var sqs = new AWS.SQS({apiVersion: '2012-11-05'});

app.post("/add-alarm", (req, res) => {

var alarmData = new Alarm(req.body);

alarmData.save() // alarm saved for permanent storage })

.then(item => {

var msg = buildMsg(alarmData);

sqs.sendMessage(msg, function(err, data) { // alarm added to queue, which Pi can read from

if (err) { throw new Error('Alarm data is not passed to Pi'); }

});

res.send("alarm created");

})

.catch(err => {

res.status(400).send("unable to create the alarm");

});

const buildMsg = (alarmData) => {

var msg = {

MessageAttributes: { alarmData }, // payload

Id: "MSG_ID",

QueueUrl: "SQS_QUEUE_URL"

};

return msg;

}

...