Over the thanksgiving I decided to keep using NoSQL instead of switching to SQL databases. The advantage of NoSQL is that it allows room for future development, e.g. if we want to create a “big ringtone” – one long ringtone consists of 2-4 (original) ringtones (because one original ringtone is confined to up to 16 notes by Create2 Open Interface), is way harder if using fixed data schema in this case. It also allows us to change directions during our course of development with its dynamic schema. Besides, we don’t need any advanced filtering or querying, e.g. the most “advanced” filtering is probably filtering alarms by a specific user ID to get private alarm data of a user, other than that it is usually just “get all ringtones” or “get all alarms”.

Deliverables completed this week:

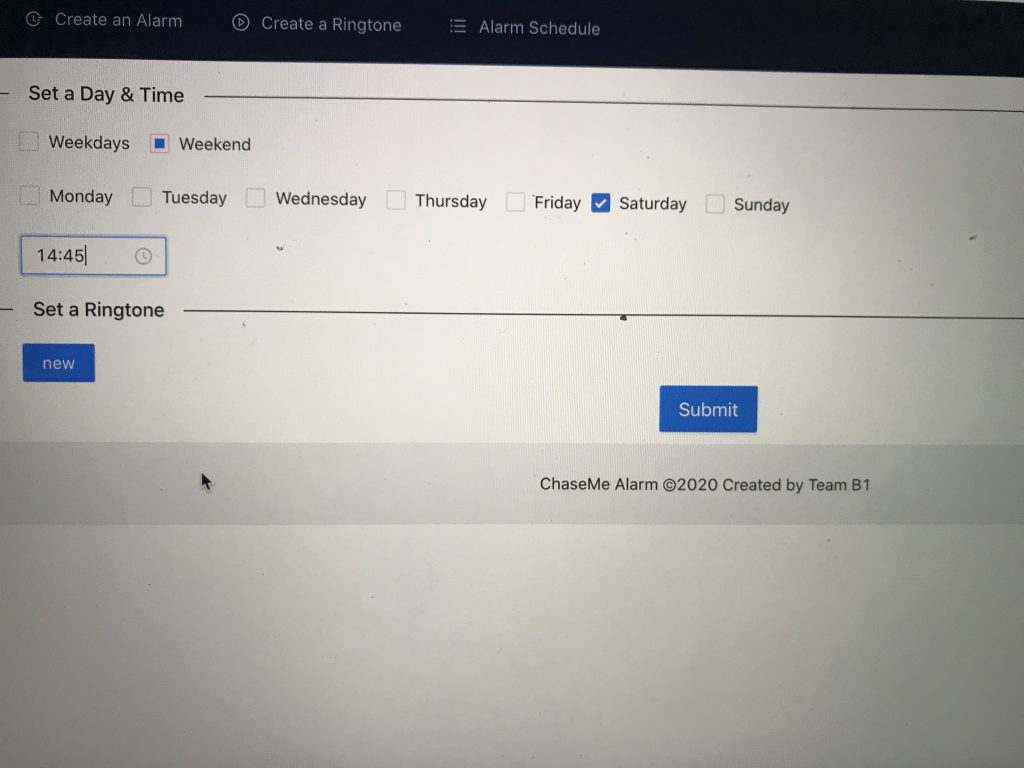

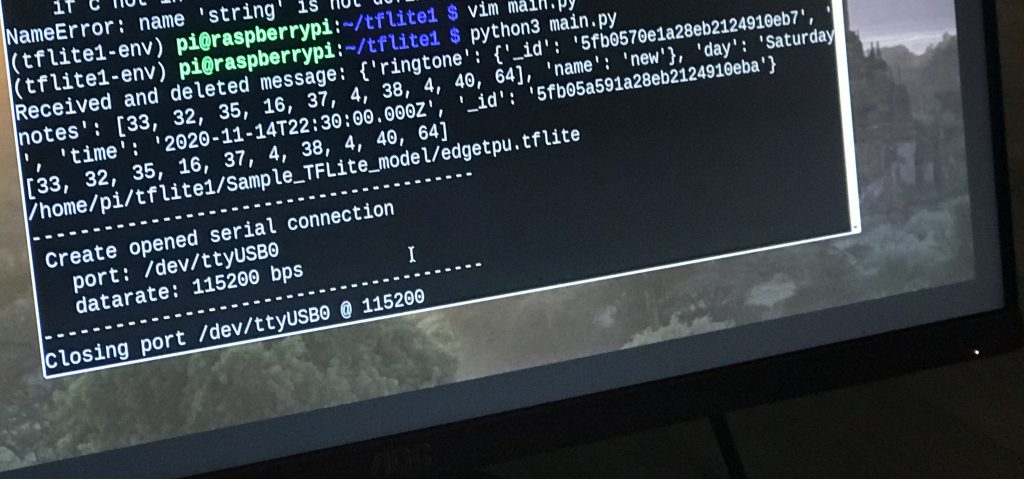

- continued testing communication between Pi and web app

- implemented a logging feature for myself to keep track of the messages sent from web app to Pi

- implemented user login

- users can now only view their own private alarms, but both public and their own private ringtones

- implemented alarm deletion

- tested by validating the logs after manually creating and deleting alarms

- implemented ringtone deletion

- deployed website using pm2

- alive on http://ec2-3-129-61-132.us-east-2.compute.amazonaws.com:4000/

Next Week:

- complete final video + report

- minor UI/UX touch-ups