This week, we did our last demo on-class, and finished off testing. We found the following test results against our metrics:

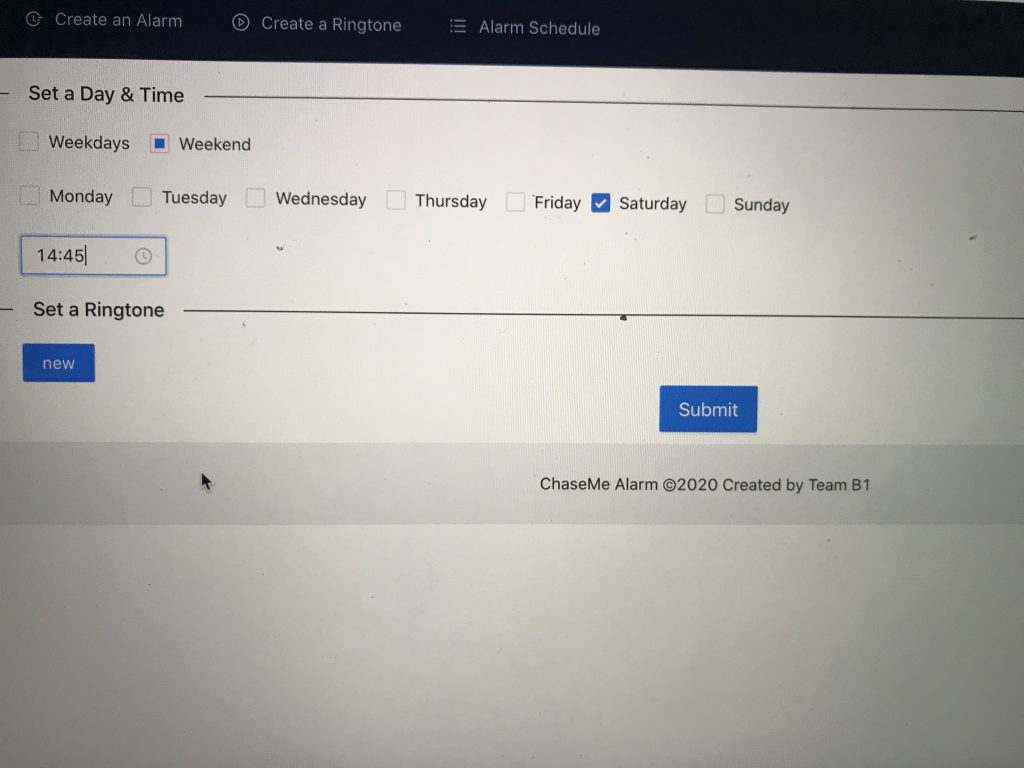

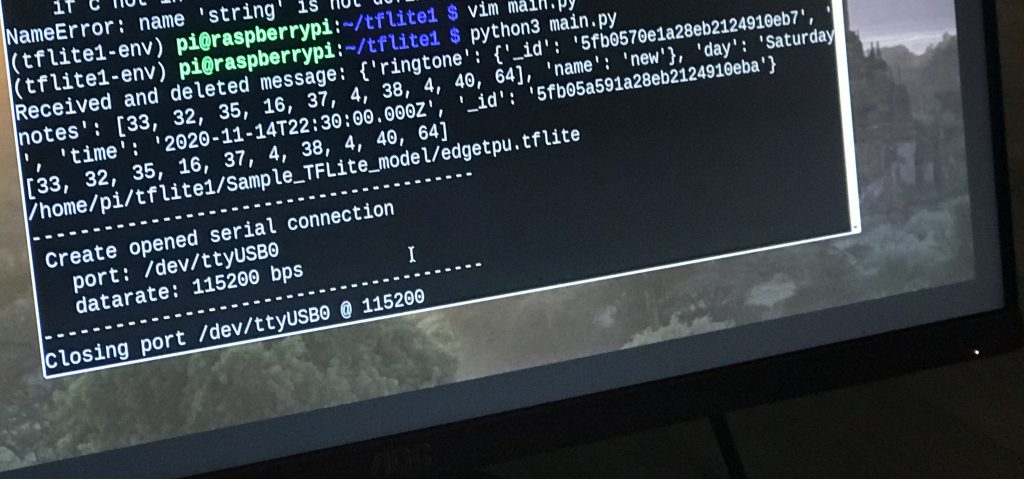

- Delay from web app sends signal, to raspberry pi receives signal < 1s: We tested this desired latency by setting an alarm at 1:00 at 12:59:59 and see if this message can be received by raspberry pi in the next second.

- From robot received message to robot activate < 5s: The time needed for the Raspberry Pi to initialize the camera stream and CV algorithm takes around 8.3s.

- Delay from facial recognition to chase starting < 0.25s: Achieved

- Fast image processing: ML pipeline FPS > 10: Achieved around 13 FPS running our algorithm on our Raspberry Pi.

- Accurate human detection: false positive: Never occurs during testing.

- Accurate human detection: false negative: Occurs under poor lighting conditions.

- Effective chase duration > 30s, chase overall linear distance > 5m: Achieved.

We also decided our schedule and division of work for the final video, and filmed many runs of interacting with the robot.

Next Week:

Finish the final video, start writing the final paper.12