Author: peizhiy

Team Status Update for 12/5

Echo & Page:

- Finished testing our completed version of the project against our metrics.

- Filmed videos for final video

- Decided on division of labor on the final video

We have finished the project on time. Started working on the final video and report.

Yuhan:

- implemented a logging feature

- implemented user login, so users can now only view their own private alarms, but both public and their own private ringtones

- implemented alarm & ringtone deletion

- deployed website using pm2

I am currently on schedule.

The website is currently alive at http://ec2-3-129-61-132.us-east-2.compute.amazonaws.com:4000/

Peizhi Yu’s Status Update for 12/5

This week, we did our last demo on-class, and finished off testing. We found the following test results against our metrics:

- Delay from web app sends signal, to raspberry pi receives signal < 1s: We tested this desired latency by setting an alarm at 1:00 at 12:59:59 and see if this message can be received by raspberry pi in the next second.

- From robot received message to robot activate < 5s: The time needed for the Raspberry Pi to initialize the camera stream and CV algorithm takes around 8.3s.

- Delay from facial recognition to chase starting < 0.25s: Achieved

- Fast image processing: ML pipeline FPS > 10: Achieved around 13 FPS running our algorithm on our Raspberry Pi.

- Accurate human detection: false positive: Never occurs during testing.

- Accurate human detection: false negative: Occurs under poor lighting conditions.

- Effective chase duration > 30s, chase overall linear distance > 5m: Achieved.

We also decided our schedule and division of work for the final video, and filmed many runs of interacting with the robot.

Next Week:

Finish the final video, start writing the final paper.

Peizhi Yu’s Status Update for 11/21

This week, I exhaustively tested out the success rate and the limitations of our obstacle-dodging algorithm.

- Due to limitations to the sensors on the robotic base, our algorithm can’t deal with transparent obstacles like a water bottle.

- It works with a 80% success rate of not touching the obstacle and going around it. The obstacle should be with a diameter that’s less than 15cm, otherwise it will be recognized as a wall

I identify the problem as the following:

- The robot uses a optical sensor that’s based on reflections, and having a transparent obstacle will not be detected by the sensors.

- The algorithm we are using essentially makes the robot turn when it comes close to a certain object, and continue moving forward when none of the sensors have readings. Problem with this approach is that there’s only around 120 degree of the front that’s covered by the 6 sensors. Although I did do a 0.25s extra turning, the larger the obstacle is, the likelier the robot will scratch with the obstacle.

- Simply adding the extra rotation time will not fix the problem because it will make our robot look kind of silly.

Team Status Update for 11/14

Echo & Page

Implemented a script on the Raspberry Pi and achieved communication between the back end of the web application and the Raspberry Pi. The Raspberry Pi now will receive a message from the back end at user specified alarm time. It will also receive and play the user specified ringtone after activation.

Implemented wall detection in our final script. The robot will halt and get ready for the next request from the server after detecting a wall based on our own algorithms.

A portable power supply is tested to be working and we are now able to put everything onto the robot and start final optimization and testing.

We are on schedule.

Yuhan

This week(week of 11/9 – 11/15):

- prepared for interim demo on Wednesday

- cleared and prepared sample alarm and schedule data

- deployed current implementation to EC2(Ubuntu)

- tested code snippet on Pi

- supported Echo and Peizhi in the integration of the code snippet into the main program running on Pi

- set up access for Echo and Peizhi for easier debugging

Next week(week of 11/16 – 11/22):

- continue testing communication between Pi and web app

- modify communication code snippet on RPi so it writes to files instead of printing to stdout(to keep a record of messages & when they are received, to validate reliability of the alarm scheduling)

- implement alarm and ringtone deletion

- research on and implement database change

I am on schedule.

Problems: refer to personal update.

Team Status Update for 11/7

Echo & Peizhi

This Week:

- Installed heatsinks and a 3.5-inch screen that’s mounted in the shroud for our raspberry pi 3.

- Implemented wall-detection algorithm through sensor data and self-developed algorithm

- Optimized obstacle detection and avoiding algorithm

Problems:

- Purchased wrong product. Expected a power bank, turned out to be a power adaptor

- Displaying captured video on the 3.5-inch screen, won’t fit even with 100×150 display window

Next week:

- Integration for web application and hardware platform

- Further optimization & Mounting Pi and camera onto the base

- Purchase power bank

We are on schedule.

Yuhan

This week:

- implemented backend with MongoDB

- web app data pulled from & stored to cloud

- implemented communication between web app and Pi with SQS(message queue)

- send messages on web app backend(javascript, scheduled by node-cron), receive and delete messages on Pi(python)

- did not test it on Pi, used local machine instead

Problems: Refer to my personal update.

Next week:

- prepare for interim demo on Wednesday

- deploy current implementation to EC2

- clear and prepare sample alarm and schedule data

- continue testing communication between Pi and web app

- test code snippet on Pi

- handle connection errors gracefully, especially for receiving messages

- support Echo and Peizhi in the integration of the code snippet into the main program running on Pi

- figure out a way to host the web app with minimum down time, and maximum secure access to EC2, MongoDB and SQS for Echo and Peizhi for easier development and debugging

I am on schedule. For the plans for the next few weeks, refer to my personal update.

Peizhi Yu’s Status Update for 11/7

This week, we were mainly dealing with 1. mounting hardware pieces together 2. implementing stop action for the robot. 3.adjusting the size of camera display on LCD screen 4. fixed the sensor data error we fixed last week

We started off by adding heat sink on raspberry pi and put it into a case. A mistake we made was that we realized the power pack we ordered was not what we expected. Therefore we need to reorder a new one, which dragged us a bit behind our intended schedule. (Without the power pack, raspberry pi cannot be fully mounted on top of the robot. But everything else were in place by now.) Next, we spent a long time implementing the final stop action for the robot, which was not intended. That is, when the robot hits the wall, it should turn off. There is a “wall detection signal” in its sensor packet, which we thought could be used to accurately detect the wall. Yet that builtin function does not work as expected. So we need to figure out a way to make the robot distinguish between an obstacle and wall. If obstacle is detected, robot should rotate until the obstacle is not in its view and continue moving. If wall is encountered, robot should shut down. By looking at the sensor data values returned from the robot, we found that when wall is detected, 4 out of 6 of the sensor value will be greater than 100. The best approach was to find the median of all 6 sensors. If this number is greater than 50, we will say that wall is detected.

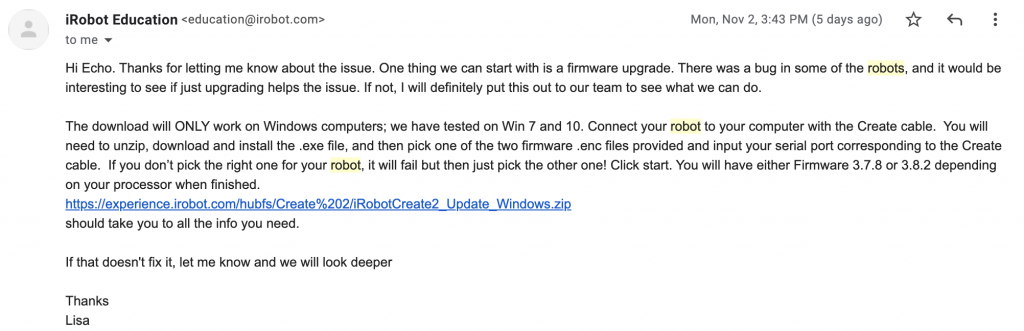

Last week, the problem we encountered was that if we excessively call the get_sensor function, the robot sometimes blow up and returns unintended values which messes up our entire program. This problem is solved by contacting IRobot Create2’s technical support. We followed the instruction provided, and our robot seems to be working fine now.

Next week, we will have our robot fully implemented with the raspberry pi mounted on top of robot so that we can give a cleaner and more elegant look of our product. We will also coordinate with Yuhan on making connections between raspberry pi and her website to setup alarm time and download user specified ringtone.

Peizhi’s Status Report for 10/31

Hardware portion almost finished:

After replacing our broken webcam with a USB camera, we continued our testing. This week, we combined our obstacle avoiding algorithm with our human avoiding algorithm together. Now, our alarm clock robot is fully functional with all our requirements reached. The complete algorithm is as followed: the robot starts self rotate at its idle stage while playing the song when no person is detected through camera. As soon as a person is detected through the camera, robot will start moving in the direction away from him/her while trying to fix this person’s position in the middle of the camera. Yet if obstacles are encountered, the robot will immediately start self rotate and move in the direction where no obstacles are detected through its sensors. At that point on, the robot will look back at its camera again to find the person. If the person is still in its view, it will perform the above action. If the person is not in its view, the robot will start self rotating until it finds a person again. As one might see, avoiding obstacles take priority over avoiding user. The “finish action/ alarm turn off action” will be done next week. That is, when the robot runs into a wall, the entire program finishes. A problem we encountered is that the distance information we received from IRobot Create2’s sensors to detect nearby obstacles sometimes blows up to completely inaccurate numbers. In that case, our entire program runs into undefined behavior and crashes. We have not yet find a solution to this problem.

(avoiding both human and obstacles demo)

(avoiding human only demo)

Integration with Webapp:

Now, we are stating to work on sockets and how raspberry pi communicates with Webapp from Yuhan’s website. Next week, we will work on how to let the Webapp controls the entire on and off of our program and how webapp sends ringtone and time to raspberry pi.

Team Status Update for 10/24

Peizhi & Echo

We are about 1 week ahead of our schedule.

This week:

- Obstacle detection & reaction

- Rotation when idle/lost track

Next week:

- Spot issue with Pi camera (likely burnt), purchase new one if necessary

- Purchase Raspberry Pi tool kit and mount the Pi + camera on the robotic base

- Integrate the obstacle detection script with our main Computer Vision script

Yuhan

My schedule is unchanged and posted in my individual status update.

There is no significant change to the web app system design either.

Refer to my individual update for screenshots of the web app.

The current priority/risk is to integrate Pi with web app. I will be focused on it next week onwards. To mitigate, My plan is to go over the following test cases:

- send data for one alarm to Pi, and see:

- if the robot base can be activated within a reasonable latency/in real time;

- if the robot base can play the ringtone sequence correctly.

- schedule one alarm, and see if the robot base can be activated at scheduled time;

- schedule 3 alarms in one row;

- schedule one alarm 3 times;

- schedule one alarm with a specified interval, and see if the robot can be repeatedly activated reliably.

Peizhi Yu’s Status Report 10/17

Computer Vision: No change, raspberry pi keeps running at 10 fps anytime when testing.

Hardware & Communication between raspberry pi and Robot base:

As mentioned in last week’s report, since we found out that multithreading does not work on Raspberry pi 3, we decided to use a counter to keep track of the time, that is, the robot does not move when it is in idle stage at first. When it first detects a person in the camera, it starts to perform the move away action by comparing the person’s position with the center of the camera. If the person is in on the left side, it will go forward right so that the camera keeps locking the person in its view while running away. If the person is on the left, it will go forward left as result. We used a counter in this process. When the robot is in the progress of performing such a move action, it will not receive any new instructions from raspberry pi. (For example, if the person first appears on the left side of the camera and suddenly jumps to the right side of the camera on the next frame, robot will only perform forward left action.) Now we set the counter to 5, which means that the robot will receive new instructions every 0.5 seconds. However, the only concern is that, now, the robot will have a little pause every 0.5 seconds, which is the time it takes to process new instructions. Even though it does not cause any issues for now, the pause is still quite obvious and is a little bit distracting.

We also made sure that the robot can keep playing songs while moving using its built in function calls: bot.playSong().

We are now starting to look into how Robot base sensors can be used to detect obstacles and walls. The problem we are facing right now is that: we realized when the robot encounters an obstacle, it will turn and move in another direction. Yet we need to make sure that after it turns, the camera still locks onto the person. For now we have not yet figured out a way to make the robot both move in the way to avoid obstacles and move away from the person.

Schedule

We are still ahead of our original schedule, which makes room for unintended difficulties.