Category: Uncategorized

Yuhan Xiao’s Status Update for 12/5

Over the thanksgiving I decided to keep using NoSQL instead of switching to SQL databases. The advantage of NoSQL is that it allows room for future development, e.g. if we want to create a “big ringtone” – one long ringtone consists of 2-4 (original) ringtones (because one original ringtone is confined to up to 16 notes by Create2 Open Interface), is way harder if using fixed data schema in this case. It also allows us to change directions during our course of development with its dynamic schema. Besides, we don’t need any advanced filtering or querying, e.g. the most “advanced” filtering is probably filtering alarms by a specific user ID to get private alarm data of a user, other than that it is usually just “get all ringtones” or “get all alarms”.

Deliverables completed this week:

- continued testing communication between Pi and web app

- implemented a logging feature for myself to keep track of the messages sent from web app to Pi

- implemented user login

- users can now only view their own private alarms, but both public and their own private ringtones

- implemented alarm deletion

- tested by validating the logs after manually creating and deleting alarms

- implemented ringtone deletion

- deployed website using pm2

- alive on http://ec2-3-129-61-132.us-east-2.compute.amazonaws.com:4000/

Next Week:

- complete final video + report

- minor UI/UX touch-ups

Echo Gao’s Status Update for 12/5

This week, we did our last demo on-class, and finished off testing. We found the following test results against our metrics:

- Delay from web app sends signal, to raspberry pi receives signal < 1s: We tested this desired latency by setting an alarm at 1:00 at 12:59:59 and see if this message can be received by raspberry pi in the next second.

- From robot received message to robot activate < 5s: The time needed for the Raspberry Pi to initialize the camera stream and CV algorithm takes around 8.3s.

- Delay from facial recognition to chase starting < 0.25s: Achieved

- Fast image processing: ML pipeline FPS > 10: Achieved around 13 FPS running our algorithm on our Raspberry Pi.

- Accurate human detection: false positive: Never occurs during testing.

- Accurate human detection: false negative: Occurs under poor lighting conditions.

- Effective chase duration > 30s, chase overall linear distance > 5m: Achieved.

We also decided our schedule and division of work for the final video, and filmed many runs of interacting with the robot.

Next Week:

Finish the final video, start writing the final paper.12

Peizhi Yu’s Status Update for 11/7

This week, we were mainly dealing with 1. mounting hardware pieces together 2. implementing stop action for the robot. 3.adjusting the size of camera display on LCD screen 4. fixed the sensor data error we fixed last week

We started off by adding heat sink on raspberry pi and put it into a case. A mistake we made was that we realized the power pack we ordered was not what we expected. Therefore we need to reorder a new one, which dragged us a bit behind our intended schedule. (Without the power pack, raspberry pi cannot be fully mounted on top of the robot. But everything else were in place by now.) Next, we spent a long time implementing the final stop action for the robot, which was not intended. That is, when the robot hits the wall, it should turn off. There is a “wall detection signal” in its sensor packet, which we thought could be used to accurately detect the wall. Yet that builtin function does not work as expected. So we need to figure out a way to make the robot distinguish between an obstacle and wall. If obstacle is detected, robot should rotate until the obstacle is not in its view and continue moving. If wall is encountered, robot should shut down. By looking at the sensor data values returned from the robot, we found that when wall is detected, 4 out of 6 of the sensor value will be greater than 100. The best approach was to find the median of all 6 sensors. If this number is greater than 50, we will say that wall is detected.

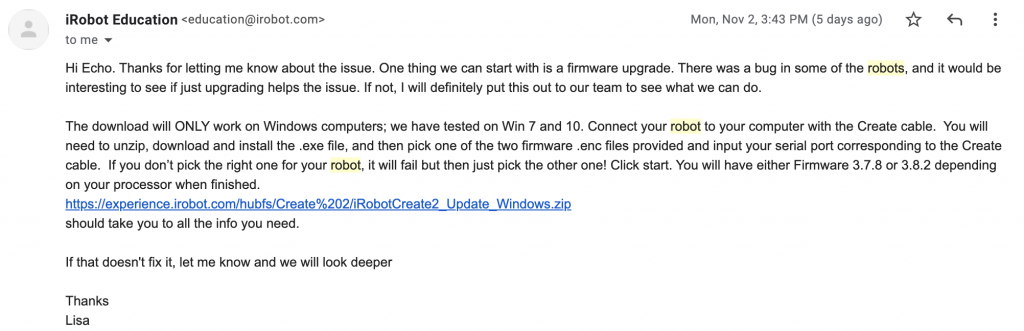

Last week, the problem we encountered was that if we excessively call the get_sensor function, the robot sometimes blow up and returns unintended values which messes up our entire program. This problem is solved by contacting IRobot Create2’s technical support. We followed the instruction provided, and our robot seems to be working fine now.

Next week, we will have our robot fully implemented with the raspberry pi mounted on top of robot so that we can give a cleaner and more elegant look of our product. We will also coordinate with Yuhan on making connections between raspberry pi and her website to setup alarm time and download user specified ringtone.