Computer Vision: No change, raspberry pi keeps running at 10 fps anytime when testing.

Hardware & Communication between raspberry pi and Robot base:

As mentioned in last week’s report, since we found out that multithreading does not work on Raspberry pi 3, we decided to use a counter to keep track of the time, that is, the robot does not move when it is in idle stage at first. When it first detects a person in the camera, it starts to perform the move away action by comparing the person’s position with the center of the camera. If the person is in on the left side, it will go forward right so that the camera keeps locking the person in its view while running away. If the person is on the left, it will go forward left as result. We used a counter in this process. When the robot is in the progress of performing such a move action, it will not receive any new instructions from raspberry pi. (For example, if the person first appears on the left side of the camera and suddenly jumps to the right side of the camera on the next frame, robot will only perform forward left action.) Now we set the counter to 5, which means that the robot will receive new instructions every 0.5 seconds. However, the only concern is that, now, the robot will have a little pause every 0.5 seconds, which is the time it takes to process new instructions. Even though it does not cause any issues for now, the pause is still quite obvious and is a little bit distracting.

We also made sure that the robot can keep playing songs while moving using its built in function calls: bot.playSong().

We are now starting to look into how Robot base sensors can be used to detect obstacles and walls. The problem we are facing right now is that: we realized when the robot encounters an obstacle, it will turn and move in another direction. Yet we need to make sure that after it turns, the camera still locks onto the person. For now we have not yet figured out a way to make the robot both move in the way to avoid obstacles and move away from the person.

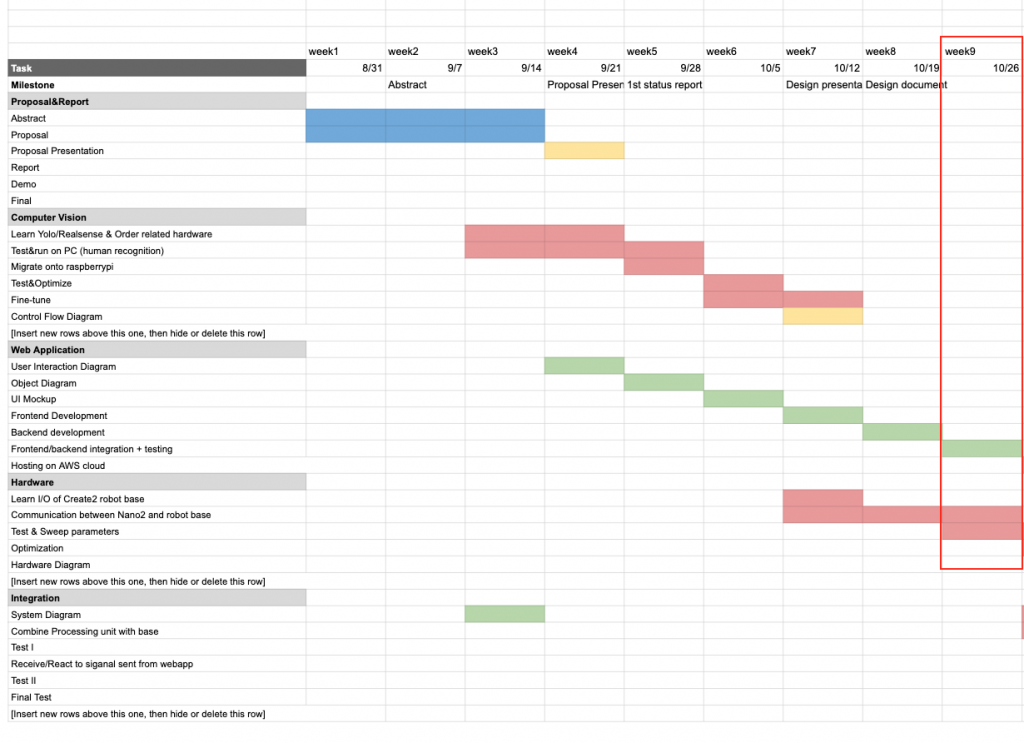

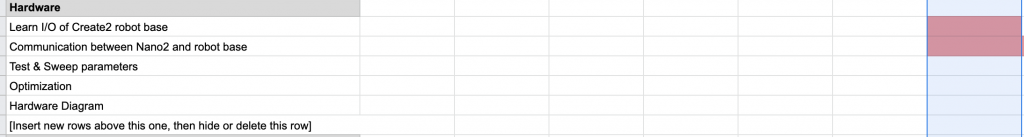

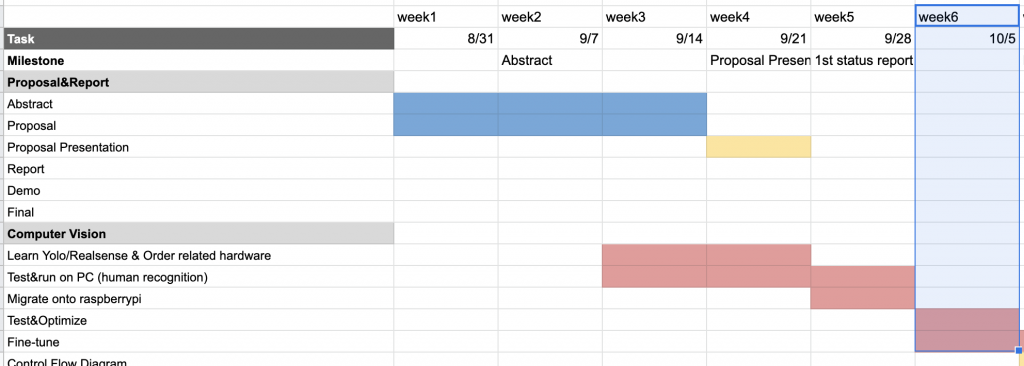

Schedule

We are still ahead of our original schedule, which makes room for unintended difficulties.