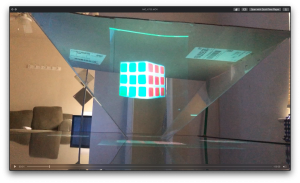

This week, our team gathers at my place to work on the project together. We work on integrating the entire pipeline together, from the output of the camera onto the display. Breyden and I first worked together to set up the TV and the pyramid because it’s a 55-inch TV so this set up task requires 2 persons.

I work on parts of adjusting the studio to make it works with 4 cameras. As mentioned in the team’s status report, we ran into wire length constrained and because of this, we decided to put the FPGA inside our studio. For this, I cut out a platform and place it on top of the FPGA. I also work on cutting up the felts to cover the walls. The dimension of these felts has to be so that it doesn’t cover up the LEDs running along the side of the studio. We also tried multiple colors felt and so all these sheets of felts need to be cut out. In each of the felt, there needs to be a hole so that the camera lens can fit through. There are 4 different colors that we tried (black, blue, light green, dark green) and each of these need 5 sheets of felt, 4 for the walls, and 1 for the bottom. Grace also helped me with cutting these felt. After cutting, we need to insert it into the studio and this tasks was also done with multiple iterations because we didn’t realized at first that we don’t want the felt to fully cover the LEDs lights because this turns out that the light becomes too diffused, hence some adjustment is needed.

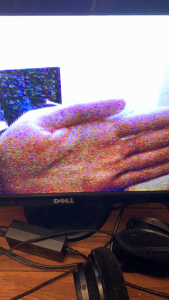

I also work on cutting some of the holes in the studio so that wires can go through, as well as holes for the 4 cameras and their wires. This is done by directly cutting into the studio and measuring the height so that the placement of the cameras would be somewhat in the same leveling plane.

The lighting of the studio also needed to be adjusted. I helped with cutting strips of felt to diffuse specific light on the LEDs strip. Multiple iterations of this task need to be done since we need to figure out which configurations would best depict the video feed the best.

When we film some of the shots, I also help with some of the set up, clearing out space for the camera, and being Breyden’s assistance for whatever he needs to film. I also provided some feedbacks for some of the shots we might want to do for filming. I also help with documenting some of our progress with pictures as we work on this project.

I printed out the MTF test so that we can do testing with the setup. This test tests the distortion of the pyramid and since we have the MTF testing card printed out, we were able to just run this test.

We are on track with our tasks. We just have to finish up testings and put together the video, which is what I will be doing in the next week.