At the beginning of the week, we worked together on the final presentation slides.

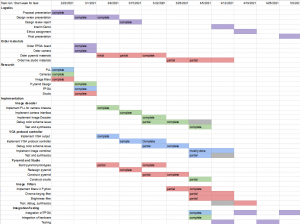

Later this week, we worked on integrating the studio with 4 cameras and outputting to the TV, on which the pyramid sits. We came together as a team to work on this at Jullia’s house. We spent some time mounting the four cameras to the side of the studio. Because longer wires have more electrical noise, and our cameras are especially sensitive to electrical noise, the wires must be short as possible. To ensure this, we placed our FPGA and the Arduino inside the studio at the bottom, and added a cardboard platform on top where our object can be placed on. We also spent some time cutting out some holes in the studio so that we can have access to the switches of the FPGAs. This allows us to adjust chroma keying color, discussed later in this status report. These changes to the design improve our project and do not affect its cost.

Also, we spent the bulk of our time adjusting the white balance, auto-exposure of cameras and lighting of the studio to have each panel of the pyramid output to be similar enough to each other. This is done by switching out a couple cameras because their sensitivities have slight variation. We also experimented with the lighting between the LEDs along the side of the walls vs point lighting. We found that point lighting creates gradient of shadows, especially when interacting with the object. These shadows make the background extremely difficult to remove, so point lighting is not desirable for our project.

Furthermore, we also spent signficant time adjusting our chroma key to find the optimal background color. We experimented with multiple colors of felt along the wall and the colors of the platform. We tried dark green, light green, blue and black and experimented between them to have chroma key close to removing all of the background. We prepared multiple felt sheets with specific dimensions that will fit into the studio, and then adjusted the chroma key to match the color that shows up on the camera inside the studio, where lighting is also introduced. The lighting was adjusted by dimming the overall studio, and dimming or diffusing specific light on the LEDs strip.

We also started testing our HoloPyramid. The latency between display and real time action turns out to be around 22ms of delay which significantly surpasses our initial requirement of 250ms of delay. This latency is more than low enough to be imperceptible to the human eye, and confirms that human eyes perceive the visual display as real time.

Our most significant risk is that the camera exposure does not have a lot of dynamic range. When we have a bright object against darker backgrounds, the camera struggles to auto-expose. This causes the object to be shown with the incorrect exposure. To mitigate this risk, we used brighter backgrounds and experimented with lighting. Another risk we have is that the wires are very sensitive and the connectivity is not the greatest between the camera and the GPIOs pins. When the wires get loose, noise becomes apparent on the output which decreases our video feed quality. To mitigate this risk, when noise occurs, we go into the bottom of the studio, where the FPGA lives and adjust the wire connections using tweezer to make them tighter.

We also started working on trying to film for the final demos, capturing footage of our current project.

Images of our project can be found in this link (we ran out of space for our website).

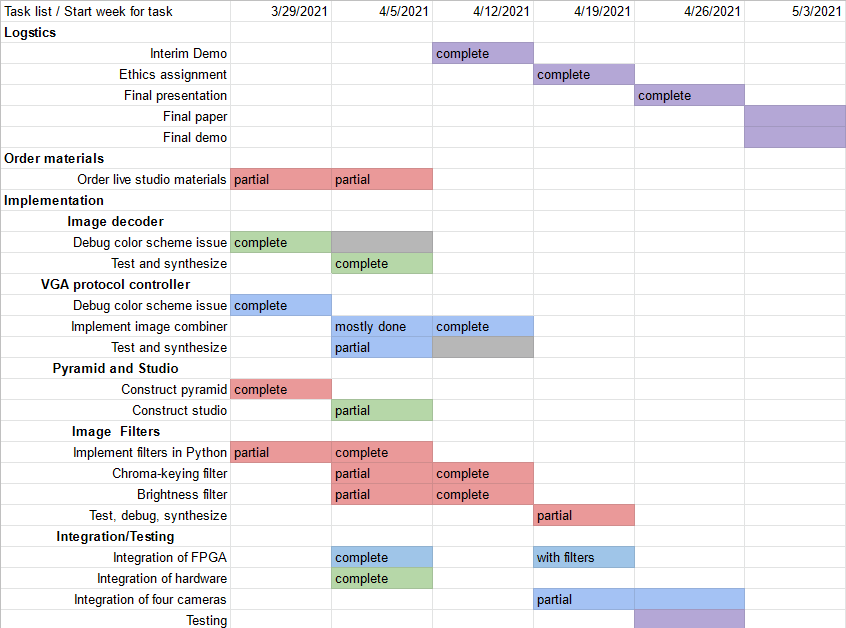

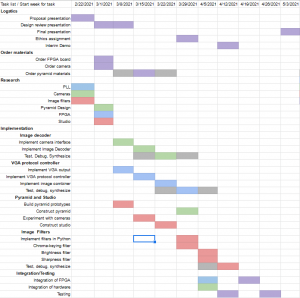

Our Gantt chart can be seen here. Our schedule has not changed; however, some additional tasks were added to the end to reflect our current integration and testing projects with greater granularity and details.