We achieve many milestones in these two weeks:

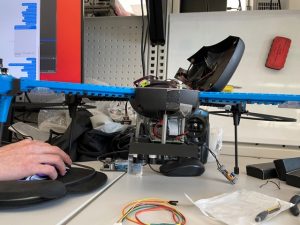

- Successfully conducted manual test flights with a remote control. This means we fixed our connection issues with the drone.

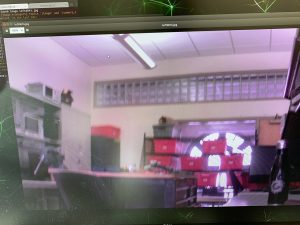

- Made automated scripts that launch on startup for the raspberry pi. This includes automatically starting a video stream and publishing images over ROS as well as automatically shutting down the video stream with a simple ROS message

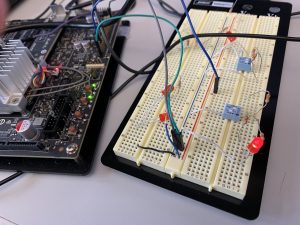

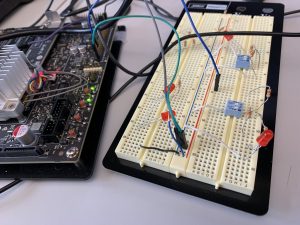

- Created draft user interface with buttons on breadboard to change drone flight modes. This involves programming interrupts on the Jetson TX1 to trigger the sending of ROS messages to the drone.

- Tested a new camera, which finally works as expected, and calibrated both the color filters for target detection and the intrinsic parameters with a chessboard.

- Implemented two different motion planners and verified performance in simulation.

- Successfully controlled the drone fully with code today. The code only told the drone to fly up to a certain height, but this means we fixed all other communication issues and verified that we can both stream commands to the drone and receive pose updates from the drone fast enough.

TODOs:

- We need some more buttons and a longer cable connecting the TX1 to our user interface board. We then need to integrate this with sending ROS messages.

- Integrate motion planning with drone

- Test and verify 3D target estimation on the real system, not just simulation.