- Accomplished this week: This week I first worked on the UI with Adriana then focused on tweaking the calibration and classification code for our device. For the UI, we first did some research into integrating UI such as tkinter with our OpenCV code but we realized this may be difficult. So we decided to make buttons by creating a box and when a click occurs in the box an action should occur. We then designed our very simple UI flow. I also spent some time working on a friendly logo for our device. This was what we designed. Our flow starts at the top left box with our CarMa Logo then goes on to the Calibration step below. Then tells the user it is ready to begin.

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides.

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides. - Progress: I am currently ahead of our must recent schedule. We planned to have the UI completed this week. We completed this early and started working on auxiliary items.

- Next week I hope to complete the final presentation and potentially work on the thresholding. I am wondering if this is the reason the classification of the eye direction is not great. We also need to complete a final demo video.

Jananni’s Status Report 4/24

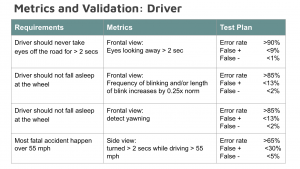

- This week I first worked on including the classification that if a driver’s eyes are pointed away from the road for over two seconds then the driver is classified as distracted. In order to do this, I first took the computed direction values of the eyes and if the eyes are pointed up, down, left or right for over two seconds, I send an alert. After committing this code, I worked on changing our verification and testing document. I wanted to include the Prof’s recommendations about our testing to not take averages of error rates because this implies we have a lot more precision that we actually have. Instead I recorded the number of total events that occurred and the number of error events. Then I took the total number of errored events for all actions and take the percentage. Now I am working on looking into research for our simple UI interface to integrate it with what we currently have.

- I am currently on track with our schedule. We wrote out a complete schedule this week for the final weeks of school, including the final report and additional testing videos.

- This week I hope to finish implementing the UI for our project with Adriana.

Jananni’s Status Report for 4/10

- This week I worked on finishing up integration with the main process of our project. Once that was completed I worked on getting more videos for our test suite of various people with various face shapes. Finally I started researching how to improve our code to optimize the GPU. This will ideally bring up our frames per second to higher than 5 frames per second as in our original requirement. I also worked on putting together material for our demo next week. Here are some important links I have been reading regarding optimizing our code specifically for the GPU: https://forums.developer.nvidia.com/t/how-to-increase-fps-in-nvidia-xavier/80507, and more. The article (similar to many others) talks about using jetson_clocks and changing the power management profiles. The change in power management profiles tries to maximize performance and energy usage. Then the jetson_clocks are used to set max frequencies to the CPU, GPU and EMC clocks which should ideally improve the frames per second in our testing.

- Currently as a team we are a little ahead of schedule, so we revised our weekly goals and I am on track for those updated goals. I am working with Adriana to improve our optimization.

- By next week, I plan to finish optimizing the code and start working on our stretch goal. After talking to the professor, we are deciding between working on the pose detection or lane change using the accelerometer.

Jananni’s Status Report for 4/3

- This week my primary goal was working through and flushing out the calibration process, specifically making it more user friendly and putting pieces together. I first spent some time figuring out the best way to interact with the user. Initially I thought tkinter would be easy and feasible. My initial approach was to have a button the user would click when they want to take their initial calibration pictures. But after some initial research and coding and a lot of bugs I realized cv2 does not easily fit into tkinter. So instead I decided to work with just cv2 and take the picture for the user once their head is within a drawn ellipse. This will be easier for the user as they just have to position their face and this guarantees we get the picture and values we need. Once the calibration process is over I store the eye aspect ratio and mouth height into a text file. Adriana and I decided to do this to transfer the data from the calibration process to the main process so that the processes are independent from each other. Here is a screen recording of the progress.

2. Based on our schedule I am on track. I aimed to get the baseline calibration working correctly with simple user interface.

3. Next week I plan to start working on pose detection and possible code changes in order to be compatible with the board.

Jananni’s Status Report for 3/27

- Accomplished this week: This week I have been focused on building the calibration for our project. This process asks the user to take pictures of their front and side profiles so that our algorithms can be more accurate for the specific user. This week’s goal is to get the user’s eye height (in order to check for blinking) and the user’s mouth height (in order to check for yawning).

I first did research on various calibration code I could potential use. One potential idea was using this project (https://github.com/rajendra7406-zz/FaceShape) where certain face dimensions are outputted.

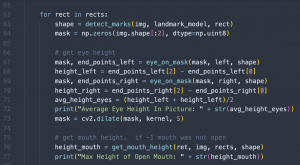

Unfortunately these dimensions were not enough for our use case. So I focused on using the DNN algorithm for a single frame and calculating the dimensions I required from the landmarks.

Then I started working on building the backend for the calibration process. I spent a lot of time understanding the different pieces of code including the eye tracking and mouth detection. Once I understood this, I worked on integrating the two and retrieving the specific values we need. For the eye height, I calculated the average of the left and right eye height and for the mouth I return the max distance between the outer mouth landmarks.

2. Progress according to schedule: My goal for this week was to build the basics of the calibration process and get the user’s facial dimensions which was accomplished on schedule.

3. Deliverables for next week: Next week I hope to start working on the frontend of the calibration process and the user interface.

Jananni’s Status Report for 3/13

This week I focused on finalizing the block diagrams and research Adriana needed in order to present the Design Review Presentation. I also started working on the Design Report due next week. As a group we worked on the report and began putting the device together to start preliminary testing. We are a little behind on our schedule but next week we plan to ramp up because we have more time. I plan on completing a rough draft for the Design Report by Monday so that next week we can turn in the Final report and begin serious testing. I will also start working on the calibration procedure.

Jananni’s Status Report for 3/6

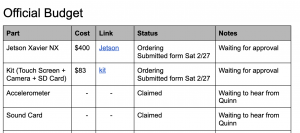

This week we focused on ordering our most essential parts and finalizing our design. First, we put in orders for the Jetson Xavier and the Kit since those are essential. When we placed the order, we realized our TA wanted an official Budget so I created that.

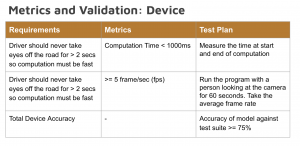

Once the budget was finalized and our orders were approved, I started working on the PowerPoint for the design review presentation. I primarily worked on the metrics slides for the driver and the device. I used the comments and feedback I received from my Proposal Presentation and tried to incorporate that. After, I helped work on the block diagrams. Specifically, I worked on the System Specification: Hardware and System Specification: Software diagrams.

After, our parts arrived and we started assembling the device.

We are currently on track with our schedule. Next week we plan to start testing the software we have on the device and hopefully begin some optimization.

Jananni’s Status Report for 2/27

This week I primarily focused on working on the Proposal Presentation. I was prepared to go on Monday but because the order was randomized I ended up practicing some more before my turn on Wednesday. For the presentation, I made sure to question every on the slides as the professors would and asked for reasoning for every single requirement so I could explain thoroughly during my presentation. I also did any additional research required for the introduction and spent some time practicing to myself. After the presentation, I wrote down all the tips and suggestions people had so we could improve our design as needed. I also ordered the main parts we would need (specifically the Jetson Xavier and the camera) so that we could start testing soon. We are currently on schedule as we planned to order critical material by this week. This next week, I hope to order the remaining material and receive the critical parts. From there we can start testing the facial detection on the board next week.

Jananni’s Status Report for 2/20

This week our group focused on finalizing our research and solution approach. First, I focused on setting up the basic structure of the website. Then, we first focused on researching two things: the hardware components and compatible software algorithms for facial recognition and eye detection. Adriana tested the dlib algorithm and I helped her with some debugging. Once most of our research was complete, we worked on flushing out the details in the Proposal Slides. During this time, I mainly focused on understanding and questioning everything on the slides since I would be presenting next week.