This week, we all worked on our last code changes for the project. For me, in particular this involved implementing the code for UI and user flow that Jananni and I worked on. This involved creating the on click functionality when the buttons on each page are clicked by the user. At the start, the user sees the starting screen page, to continue they press “start”. Then they are directed to the “calibration” page. Once they click on “start calibration” button then the calibration process starts. This requires the user to place their head inside the circle. After their head placement is correct then it automatically takes the user to the page when the user is ready to start driving. To begin the CarMa alert program, they press “start” and the program is now classifying when the user appears to be distracted or not! The full process can be seen:

https://drive.google.com/file/d/1pIs0zBR5DGlLfyH3uHI5zbVwX-ey5mxl/view?usp=sharing

One key point is that the program only starts when the user clicks on the start button. This design was based on the ethics conversation when the students and the professor mentioned that it should be clear when the program is starting / recording so the user can have a clear understanding on the program. Lastly, we worked on our final presentation which will take place this upcoming week. We had to make sure we had all the data and information ready for this presentation

Progress: I am currently on schedule. We ended up completing UI early and started working on smaller remaining items. This project is wrapping up nicely and no sudden hiccups have occured.

In the upcoming days I hope to complete the final presentation and the final demo video. The final demo video will take place in the car where the program audio and alerts and be heard and assessed in real time. Lastly, we hope on having some sort of nice hardware setup to put the screen and the rest of the materials to package it nicely in the car.

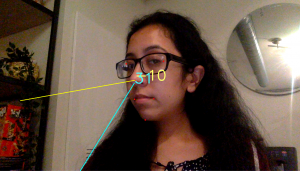

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides.

Once we designed this. We simply coded up the connection between pages and connected the UI to our current calibration and main process code. We needed to work on connecting the calibration because this step required the real time video whereas for the main process we are not planning on showing the user any video. We only sound the alert if required. After we completed the UI, I started working on tweaks for the calibration and classification code for the board. When we ran our code some small things were off such as the drawn red ellipse the user is attempting to align their face with. For this I adjusted the red ellipse dimensions but also made it easier for us later on to simply change the x, y, w, h constants with out go through the code and understand values. Once I completed this, I started working on adjusting our classification to fit the board. I also started working on the final presentation Power Point slides.