- One risk that could potential impact our project at this point is the buzzer integration. If there is an issue with this aspect of the project, we may need to figure out a new way to alert the user. This could potentially be addressed by alerting the user with an alert on the screen.

- No, this week there were no changes to the existing design of the system. For testing and verification, we more precisely defined what we meant by 75% accuracy against our test suite.

- This week we hope to complete the integration of the buzzer and the UI interface of our project. Next week we hope to create a video for the head turn thresholding to showcase the accuracy and how it changes as you turn your head. We also need to potentially work on pose detection and more test videos. Finally, we are going to be testing our application in a car to obtain real world data.

Adriana’s Status Report 04/24

This week, I worked on breaking up the landmarking function independently to find out where the bottleneck exists in terms of what is taking a long time for the code to run. This lead to me, working on having our landmarking functions, specifically the model that we use for predictions that is loaded from tensorflow to be run on the GPU on our Xavier board. We then turned on the appropriate flags and settings on our board to have the tensorflow-GPU enabled.

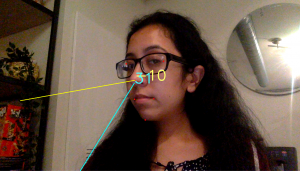

Similarly, I was able to research more into what our threshold for having ourface turned at an angle and still having accurate eye and mouth detection. The mouth detection turned out to be very accurate even when turned at about 75 degrees from the center of the camera. However, the eye detection began giving inaccurate results once one of the eyes was not visible. This usually happen around 30 degrees. Therefore we have concluded that the best range in which our application should work is when the user’s head is +/- 30 degrees from the center of the camera.

Lastly, I worked on the user flow for how the user is going to turn on / off the application and what would be less distracting for the user. For next steps, Jananni and I are working on creating the user interface for when the device is turned on / off. This should be completed by Thursday which is when we are putting a “stop” on coding.

I am currently on schedule and our goal is to be completely done with our code by Thursday. We are then going to focus the remainder of our time to testing in the car and gathering real time data.

Evann’s Status Report 4/24

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week I completed the accelerometer integration. I was able to make a physical connection between the accelerometer and board using some spare Arduino wires. After ensuring that the connection was established, I then checked that the board was receiving data from the accelerometer by checking that the memory was mapped to the device. I then used the smbus python library to read the data coming in from the memory associated with the accelerometer. I also completed the sound output. This was accomplished by using the playsound python library. I also worked on implementing pose detection using the facial landmarks we already use for eye detection.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Our progress on the board is slightly ahead of schedule. We’ve completed all of our major goals and have begun work on stretch goals.

What deliverables do you hope to complete in the next week?

We are planning to focus work next week to get our system working in a vehicle for a demo. I want to finish the pose detection next week as well.

Jananni’s Status Report 4/24

- This week I first worked on including the classification that if a driver’s eyes are pointed away from the road for over two seconds then the driver is classified as distracted. In order to do this, I first took the computed direction values of the eyes and if the eyes are pointed up, down, left or right for over two seconds, I send an alert. After committing this code, I worked on changing our verification and testing document. I wanted to include the Prof’s recommendations about our testing to not take averages of error rates because this implies we have a lot more precision that we actually have. Instead I recorded the number of total events that occurred and the number of error events. Then I took the total number of errored events for all actions and take the percentage. Now I am working on looking into research for our simple UI interface to integrate it with what we currently have.

- I am currently on track with our schedule. We wrote out a complete schedule this week for the final weeks of school, including the final report and additional testing videos.

- This week I hope to finish implementing the UI for our project with Adriana.

Adriana’s Status Report for 4/10

This week I was able to finish writing our video testing script that we are distributing to friends and family in order to gather more data. We have been beginning to test our algorithm locally using the videos in our testing suit to gain an analysis on how accurate our program is.

One of our biggest goals is to improve the performance of our algorithm on the board. During our weekly meeting with Professor Savvides, we came to the agreement that we are going to prioritize optimizing our program by making it faster and increasing our fps. This week in particular, I looked into having the OpenCV’s “Deep Neural Network” (DNN) module run with NVIDIA GPUs. We use the dnn module to get inferences on where the best face in the image is located. In the example tutorial we are following, they stated that running it on the GPU would have >211% faster inference than on the CPU. I have added the code for dnn to run on the GPU and we are currently in the process of having the dependencies fully work on the board in order to find out what our actual speed increase is which we will showcase during our demo next week.

I would say that I am currently on track with our schedule. The software optimization is my biggest task at the moment but it is looking pretty good so far! For next week, I am hoping to have some big board fps improvements in the upcoming days. This would greatly improve our algorithm and make the application more user friendly.

Source: https://www.pyimagesearch.com/2020/02/03/how-to-use-opencvs-dnn-module-with-nvidia-gpus-cuda-and-cudnn/

Evann’s Status Report for 4/10

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week I spent the majority of my time integrating the updated application onto the board. There was an issue with how some of the calibration code was interacting with the images that were captured using the onboard camera. I also spent time collecting data on performance and working to optimize the landmarking. There are some dependency issues with converting the landmarking model to a tensorrt optimized graph and I am currently working through these.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Our progress on the board is slightly ahead of schedule. We finished integration this week, as well as testing and validation. We are planning to start work on some of our reach goals.

What deliverables do you hope to complete in the next week?

I hope to finish accelerometer input and sound output by next week.

Jananni’s Status Report for 4/10

- This week I worked on finishing up integration with the main process of our project. Once that was completed I worked on getting more videos for our test suite of various people with various face shapes. Finally I started researching how to improve our code to optimize the GPU. This will ideally bring up our frames per second to higher than 5 frames per second as in our original requirement. I also worked on putting together material for our demo next week. Here are some important links I have been reading regarding optimizing our code specifically for the GPU: https://forums.developer.nvidia.com/t/how-to-increase-fps-in-nvidia-xavier/80507, and more. The article (similar to many others) talks about using jetson_clocks and changing the power management profiles. The change in power management profiles tries to maximize performance and energy usage. Then the jetson_clocks are used to set max frequencies to the CPU, GPU and EMC clocks which should ideally improve the frames per second in our testing.

- Currently as a team we are a little ahead of schedule, so we revised our weekly goals and I am on track for those updated goals. I am working with Adriana to improve our optimization.

- By next week, I plan to finish optimizing the code and start working on our stretch goal. After talking to the professor, we are deciding between working on the pose detection or lane change using the accelerometer.

Team Status Report for 4/10

- The most significant risk that could happen to our project is not meeting one of our main requirements of at least 5 frames per second. We are working on improving our current rate of 4 frames per second by having some openCV algorithms run GPU, in particular the dnn module which detects where a face in the image is. If this optimization does not noticeably increase our performance then we plan on using our back-up plan of moving some of the computation to AWS so that the board is faster.

- This week we have not made any major changes to our designs or block diagrams.

- In terms of our goals and milestones, we are a little ahead of our original plan. Because of this, we are deciding which stretch goals we should start working on and which of our stretch goals are feasible within the remaining time we have. We are currently deciding between pose detection or phone detection.

Adriana’s Status Report for 4/3

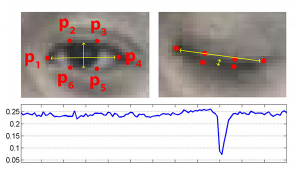

This week I was able to spent a lot of my time on improving our classification for our eye tracking algorithm. Before, one of the major problems that we were having is not being able to accurately detect closed eyes when there was a glare in my glasses. To address this issue, I rewrote our classification code to utilize eye aspect ratio instead of average eve height to determine if the user is sleeping or not. In particular, this involved computing the euclidean distance between the two sets of vertical eye landmarks and the horizontal eye landmark in order to calculate the eye aspect ratio. (As shown in the image below).

Now our program can determine if a person’s eyes are closed if the Eye Aspect Ratio falls below a certain threshold (calculated at calibration) at a more accurate rate than with just looking at the eye height. Another issue that I solved was removing the frequent notifications to the user when they blink. Before, whenever a user would blink our program would alert them of falling asleep. However, I was able to fine tune that by incorporating a larger eye closed for consecutive frames threshold. This means that the user will ONLY be notified if their eye aspect ratio falls below their normal eye aspect ratio (taken at the calibration stage) AND it stays closed for about 12 frames. This number of 12 frames ended up equaling to about < 2 seconds which is the number in our requirements that we wanted to notify users at. Lastly, I was able to fully integrate Jananni’s calibration process with my eye + mouth tracking process by having a seamless connection of our programs. After calibration process is done and writes the necessary information to a file, my part of the program starts and reads that information and begins classifying the driver.

https://drive.google.com/file/d/1CdoDlMtzM9gkoprBNvEtFt83u75Ee-EO/view?usp=sharing

[Video of improved Eye tracking w/ glasses]

I would say that I am currently on track with our schedule. The software is looking pretty good on our local machines.

For next week, I intend to use some of my time next week to be more involved with the board integration in order to have the code that Jannani and I worked on to be functional on the board. As we write code we have to be mindful that we are being efficient so I plan to do another code refactoring where I can see if there are parts of the code that can be re-written to be more compatible with the board and to improve performance. Lastly, we are working on growing our test data suite by asking our friends and family to send us videos of themselves blinking and moving their mouth. I am working on writing the “script” of different scenarios that we want to make sure we get on video to be able to test. We are all hoping to get more data to verify our results.

Evann’s Status Report for 4/3

What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

This week I spent the majority of my time integrating the primary application onto the board. There was an issue with an incompatible version of Jetson’s SDK with the version of TensorFlow that we were using which required reflashing our SD card with a new OS image. Using Nvidia’s JetPack SDK required us to change some of our dependencies such as switching to Python 3.6 and TensorFlow2.4 which required us to refactor our code to handle some of the deprecated functions. Working through these issues, I was able to get the preliminary code running on the board. A demo is shown below.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Our progress on the board is slightly behind schedule. We intended to finish integration earlier this week, however issues with dependencies and platform differences caused some unforeseen delays. I will use some of the dedicated slack time to adjust the schedule.

What deliverables do you hope to complete in the next week?

I hope to implement accelerometer input and sound output by next week. I also intend to begin work on pose estimation if time allows.