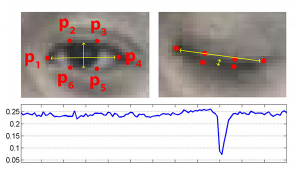

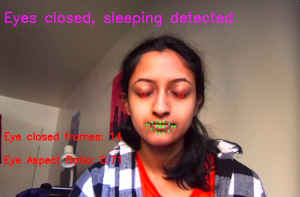

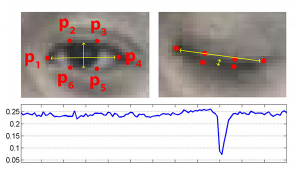

This week I was able to spent a lot of my time on improving our classification for our eye tracking algorithm. Before, one of the major problems that we were having is not being able to accurately detect closed eyes when there was a glare in my glasses. To address this issue, I rewrote our classification code to utilize eye aspect ratio instead of average eve height to determine if the user is sleeping or not. In particular, this involved computing the euclidean distance between the two sets of vertical eye landmarks and the horizontal eye landmark in order to calculate the eye aspect ratio. (As shown in the image below).

Now our program can determine if a person’s eyes are closed if the Eye Aspect Ratio falls below a certain threshold (calculated at calibration) at a more accurate rate than with just looking at the eye height. Another issue that I solved was removing the frequent notifications to the user when they blink. Before, whenever a user would blink our program would alert them of falling asleep. However, I was able to fine tune that by incorporating a larger eye closed for consecutive frames threshold. This means that the user will ONLY be notified if their eye aspect ratio falls below their normal eye aspect ratio (taken at the calibration stage) AND it stays closed for about 12 frames. This number of 12 frames ended up equaling to about < 2 seconds which is the number in our requirements that we wanted to notify users at. Lastly, I was able to fully integrate Jananni’s calibration process with my eye + mouth tracking process by having a seamless connection of our programs. After calibration process is done and writes the necessary information to a file, my part of the program starts and reads that information and begins classifying the driver.

https://drive.google.com/file/d/1CdoDlMtzM9gkoprBNvEtFt83u75Ee-EO/view?usp=sharing

[Video of improved Eye tracking w/ glasses]

I would say that I am currently on track with our schedule. The software is looking pretty good on our local machines.

For next week, I intend to use some of my time next week to be more involved with the board integration in order to have the code that Jannani and I worked on to be functional on the board. As we write code we have to be mindful that we are being efficient so I plan to do another code refactoring where I can see if there are parts of the code that can be re-written to be more compatible with the board and to improve performance. Lastly, we are working on growing our test data suite by asking our friends and family to send us videos of themselves blinking and moving their mouth. I am working on writing the “script” of different scenarios that we want to make sure we get on video to be able to test. We are all hoping to get more data to verify our results.