- Got the servo code working, attached servo to 3D printed gate. Servo is accurate enough and powerful enough. No noticeable jitter, even when fruit makes contact with gate.

- Spent a long time integrating the different parts (servo+gate mechanism, conveyer belt, cameras) of the design together. Got the integration working, project looks fluid and has a high classification accuracy.

- Got started on the poster.

Ishita Kumar’s Status Report for 05/08/21

This week, I prepared for the final presentation. The focus for me this week was fixing some visibility bugs in our hsv masks and finishing up hsv image segmentation for carrots. I also helped my team integrate the gate into our final product and we now have a fully working system. We focused a lot on testing both software and our physical components in the past few days. I have also started on the final video by collecting B-roll footage and preparing my script. I am happy that we got to finish our product. We should be on track for the Monday video and poster submission deadline. Thank you Professor Savvides as well as our TA Uzair for all your help.

Team Status Report for 05/08/21

This week was the last week we could actually put in work before the video, so it was a very crucial week for us since we had to ensure we had at least our MVP working for the video.

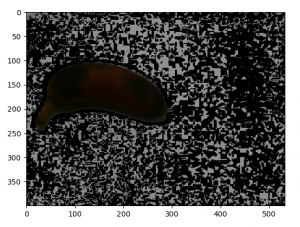

The team worked on figuring out how we can attach the servo to the diverter we had printed. We, then, fixed our image segmentation masks to ensure we were detecting the rotten and good parts of the banana correctly. Once we figured that out, we set up our final product and ran several tests to see if we were meeting the metric requirements we had set out to meet. We seem to be meeting the requirements, at least for the MVP, for now. We’re working on image segmentation for carrots to be able to include that into our product, and we’re also working on developing the AlexNet rottenness classifier, so we can see how it’s performing in order to be able to compare it to our current classifier and gain insights. We’re now working on the final video and poster.

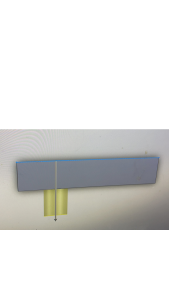

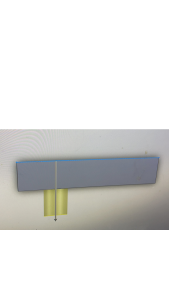

Below is a picture of our final product setup. We would like to thank Prof. Savvides and our TA, Uzair, for all of their help and support throughout the semester!

Ishita Sinha’s Status Report for 05/08/21

This week, my team and I worked on completing the integration of the diverter into our project and testing it. To begin with, we first worked on coming up with an idea so that we can make the servo drive the diverter. We were earlier thinking of drilling the arms of the servo into the diverter, but that wouldn’t have worked since the body of the servo would have been free. Finally, with trial and testing, we came up with a solution for this. After that, I worked on integrating the servo code with the rest of the code we had. Post that, I worked on setting up everything so that all of the components of the product are placed as they would be in the final setup. Then, I looked at the camera streams to figure out their right positions and placed them. After that, I updated the rottenness threshold to accommodate for shadows and then performed several tests on good and rotten bananas to see that they were being detected, classified, and diverted correctly. The diverter received the signal for diversion within just 0.08 seconds after the banana image was read, so our entire code took just an average of around 0.08 seconds, which definitely meets our requirements.

Currently, my team and I are working on the final video and poster. We’re also working on the image segmentation for carrots and I’m now working on the AlexNet classifier for rottenness to see how well that would have performed and how long that would have taken. We’ll continue to do this until Monday, and will then start writing up our final report from Tuesday onwards. I’m happy it seems to have all worked out so far for the project, and we’re now in the last leg of just presenting what it is!

I would like to thank all of the instructors and TAs, especially Prof. Savvides and Uzair, for all of their help and guidance and for a wonderful semester!

Kushagra’s Report for 05/01/21

- Finished integrating the code for project. So, we are now able to place banana on the belt, take picture as the fruit is moving, and get an output from the nano.

- We realized the algorithm was slow, so we also sped that up

- Almost done getting the servo working; didn’t realize the PCA9685 board needed power. We are waiting on the power bank, but in the meanwhile, i’m trying to find a battery.

- Designed and printed gate

Team Status Report for 05/01/21

This week was very important for our team as it was around the 2-week mark before the final deadline. So, we followed a daily work plan for this week.

Ishita Sinha and Ishita Kumar finished the final set-up for the conveyor belt, including positioning its height and stitching the belt in place. Kush integrated the code onto the Jetson Nano. Then, IK and IS did several conveyor belt + software runs. Initially, the algorithm was too slow to meet our conveyor belt speed requirements. However, IS sped up the code from 3s/fruit to 0.04s/fruit by switching to Numpy arrays. So, now we meet our speed requirements. Some frame analyzer bugs were also fixed and the software+conveyor belt system works well for the most part. We found that sometimes, the hsv filter masks the rottenness detector uses picks up a lot of unrelated noise due to bad lighting conditions. So, it is very important for us to obtain better lighting for more consistently successful runs of our system.

The final part of the MPV left is the gate. Kush and IK designed the first prototype of the gate based on our original design. Unfortunately, the gate from the original design is too short and the banana just gets stuck and starts rotating on the conveyor belt. However, we came up with several new prototypes and have CADed a longer version of our gate which we hope will work.

Having the strict plan for this week helped but we strayed from the plan by mid-week due to unexpected bugs and our original gate design not working. However, we learnt many important things about our design this week during integration and will continue to follow strict plans for the next weeks to finish up our original MVP design (including the gate) with the addition of the conveyor belt.

Ishita Kumar’s Status Report for 05/01/21

Since this week was very important for the team in terms of testing and integration, I made a plan for work to be done daily for the team. I helped finish the final conveyor belt set-up and stitched the belt together. Next, Ishita Sinha and I tested the frame detector on the banana running on the conveyor belt. We had a few bugs related to how many pixels we said were related to a banana in the frame to work out but we increased the pixels according to test results and the frame analyzer works now. Next, the hsv mask rotten detector does work for our bananas on the moving conveyor belt which Ishita Sinha and I tested too. But it heavily depends on lighting conditions. We found during testing that sometimes there is too much noise being picked up by the hsv filter masks when there is improper lighting and so we need to obtain better lighting. Next, I helped design the first prototype for our gate in CAD and 3D printed it. Unfortunately, our original design with the gate blocking the fruit did not work because the gate was too short. We have come up with another similar design with a much longer gate and are hopeful it works. I designed the new gate in CAD as well. As of now, it is my responsibility along with Ishita Sinha to fix the lighting issue so we can do a full software+conveyor belt run without issues. Overall, our design is working though. According to my plan, we should have had the gate ready by today but we had some delays because of our old design not working. This is okay for now, as we have the other design ready and we have the slack time to get it to work.

Ishita Sinha’s Status Report for 05/01/21

This week, my team and I have been working on integrating the algorithm with the actual conveyor belt system and getting it to work. To begin with, I switched all of the code to use Numpy, so I got quite a large speedup, so much so that the entire algorithm is now running in around 0.04 seconds, as compared to the earlier 3-4 seconds, so that seems to have worked out well. Next, I instrumented the code in order to better detect when the banana is in the frame, versus when it is coming in, going out, or isn’t in the frame. The edge detection and frame analysis seem to be performing very well. I worked on the final product setup. We just need to place the other camera and the diverter. I have also been testing the conveyor belt system a lot with the algorithm to see how well the image segmentation seems to be performing. There seem to be some issues that are largely resolved with the use of brighter light, so I’ve written code to increase the brightness of the image, but am also looking for a brighter light. When the image is bright, the algorithm seems to be performing very well with the conveyor belt system. Lastly, I worked on coming up with a design for our diverter that will actually divert the fruit on the belt to move into the rotten v/s the fresh fruit basket. We have the CAD design and should be 3D printing it tomorrow. Our plan is that the diverter will extend a bit more into the conveyor belt system so that it can start diverting the fruit much before it actually hits the end of the belt. I tested this using a book to see if it would work, and it seems to be working, so we hope it works out with the 3D printed part!

For future steps, we plan on getting the 3D printed diverter and setting it up with the servo motor so as to see that the diversion is working out well, so I think there’s going to be a lot of testing in the upcoming week. It’s the last leg, so I hope it works out! We also need to write image segmentation code for cucumbers and carrots, but that’s something we plan on looking into after we have the entire system working for a banana since that’s our MVP. The AlexNet classifier isn’t urgent since our classification system seems to be meeting the timing and accuracy requirements extremely well, but I could work on that after we have this setup working. I hope it all works out!

Ishita Sinha’s Status Report for 04/24/21

Over the past few weeks, I worked on developing a final model for fruit detection. I was working with an already built YOLO model and also tried developing a custom model. However, I noticed that the custom model did not perform much better in terms of accuracy and it did not reduce the time required for computation either, so I decided that we’d continue with the YOLO model. I tested the fruit detection accuracy with this model using a good Kaggle dataset I found online with images similar to the ones that would be clicked with our lighting conditions and background and 0% of the bananas were misclassified, around 3% of the apples were misclassified, and around 3% of the oranges were misclassified out of a dataset consisting of around 250 images. The fruit detection seemed to be taking a while, around 4 seconds for each image. However, I looked up the speed up offered by the Nano, so this should work out for our purposes.

I also worked on integrating all of the code we had so now we have the code ready for the Nano to read the image, process it, pass it to our fruit detection and classification algorithm, and be able to output whether the fruit is rotten or not. I also integrated the code for analyzing whether the fruit is partially or completely in the frame along with our code.

For the apples and oranges, I tested the image segmentation and classification on a very large dataset, but it did not seem to perform too well, so I plan on playing with the rottenness threshold I’ve set while Ishita Kumar improves the masks we get so that we can improve accuracy.

As for the integration, we plan on writing up the code for the Raspberry Pi controlling the gate in the upcoming week. We plan on setting up the conveyor belt and testing our code in a preliminary manner with a temporary physical setup soon, without the gate for now.

The fruit detection seems to be detecting apples as oranges sometimes and for a few instances it seems like it is not able to detect extremely rotten bananas, so I’ll need to look into that to see if it can be improved.

For future steps for my part, I need to work on testing the integrated code with the Nano and conveyor belt with real fruits. Once that’s working, we will start working on getting the Raspberry Pi and servo to work. In parallel, I can play around with the threshold for rottenness classification for apples and oranges. The AlexNet classifier isn’t urgent since our classification system currently seems to be meeting timing and accuracy requirements, but I’ll work on implementing that once we’re in good shape with the above items.

Kushagra’s Status Report for 04/24/21

- Started integration test. Currently, we have been able to take pictures from the cameras, and pass them along to the algorithms and get a final result.

- Almost done with servo + shield (should have the system running by monday). After which, we will have full system integrated (i.e from taking picture to controlling gate via the jetson nano). This is the next major step for me.