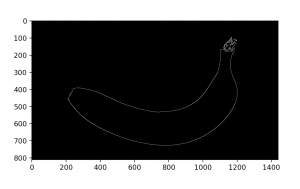

This week, my team and I worked on completing the integration of the diverter into our project and testing it. To begin with, we first worked on coming up with an idea so that we can make the servo drive the diverter. We were earlier thinking of drilling the arms of the servo into the diverter, but that wouldn’t have worked since the body of the servo would have been free. Finally, with trial and testing, we came up with a solution for this. After that, I worked on integrating the servo code with the rest of the code we had. Post that, I worked on setting up everything so that all of the components of the product are placed as they would be in the final setup. Then, I looked at the camera streams to figure out their right positions and placed them. After that, I updated the rottenness threshold to accommodate for shadows and then performed several tests on good and rotten bananas to see that they were being detected, classified, and diverted correctly. The diverter received the signal for diversion within just 0.08 seconds after the banana image was read, so our entire code took just an average of around 0.08 seconds, which definitely meets our requirements.

Currently, my team and I are working on the final video and poster. We’re also working on the image segmentation for carrots and I’m now working on the AlexNet classifier for rottenness to see how well that would have performed and how long that would have taken. We’ll continue to do this until Monday, and will then start writing up our final report from Tuesday onwards. I’m happy it seems to have all worked out so far for the project, and we’re now in the last leg of just presenting what it is!

I would like to thank all of the instructors and TAs, especially Prof. Savvides and Uzair, for all of their help and guidance and for a wonderful semester!