- Got one camera working with the jetson nano. Able to take pictures now automatically. Next step is to process livestream as was recommended in the feedback.

- Picked up the rollers for the conveyer belt from Home Depot, and tweaked the design for installing the motor into the rollers (we will now use a wooden piece, glue it to the inside of the pipe, and secure it to the shaft of the motor).

- For next week, my main goal is to continue working on the conveyer belt (hopefully have it finished), and to order the second USB camera and instill it.

Team Status Report for 03/27/21

Our team made good progress this week on various elements of our project. We now have two masks for rotten and good bananas respectively that we will use to calculate the percentage of rotten parts in a banana. We also have started researching our secondary ML solution and looked at AlexNet as of now. We had a meaningful discussion with Prof. Tamal and our TA Uzair who gave us the great idea of using live feed or burst pictures instead of periodically taking pictures which we talked about more as a team and decided would be better for our timing concerns on our conveyor belt. We also know that building a conveyor belt is an important part of our testing and so we have started building out the conveyor belt. We made good progress today in the woodshop. We managed to find free wood pieces that worked for us and we have cut all the parts necessary for our conveyor belt, including the wooden base, the wooden side stands, the PVC rollers. Next, we have to hook up the motor to the PVC rollers with woodwork and we have a few ideas on how to do that. So we are on schedule for our project as of now. Kush is going to also work more on the Nano early this coming week and Ishita K. and S. should have a good rottenness detector using masks developed as well as started on the ML plan. Our conveyor belt should be ready by mid-coming week.

Ishita Sinha’s Status Report for 03/27/21

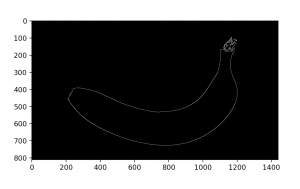

This week, I worked on implementing edge detection for separating the fruit from the background. We may need this when we introduce multiple fruits so that we can separate the fruit from the background, detect which fruit it is by examining the colour within the designated area, and accordingly running our algorithms. Here are the results of running edge detection on the image of this banana:

I developed the first classifier for our product, which is the percentage area rottenness classifier. This classifier considers the good parts of the fruit and the seemingly bad parts and computes the percentage rottenness of the fruit. If the percentage is above a certain threshold, it will classify fruits as rotten, else it will classify them as good. Ishita Kumar worked on segmenting the good versus bad parts of the banana, and then, I pass that result into my classifier so that the image can be classified. Over the next week, I plan on looking into an optimal threshold, which I can obtain by testing the classifier on a large number of Google images, as well as some manually taken images if possible. We have found a Kaggle dataset containing images of rotten versus good bananas, so I plan on looking into using that to determine a threshold.

I also looked into AlexNet to work on developing the second classifier. I have started working on it and plan to have it working in the coming week. I can test its working using the Kaggle dataset.

Regarding the use of the 2 classifiers and seeing which one is optimal, I was thinking that instead of using one of the classifiers, we could instead first run the percentage rottenness algorithm. If the percentage is above the “rotten” threshold, we classify it as rotten. If it’s under a certain “good” threshold, we classify it as good. However, if it is in a gray area between these 2 thresholds, we can classify it using the AlexNet classifier. I still need to discuss this with my teammates and the instructors. This approach would also depend on how well the AlexNet classifier seems to work.

As part of the team, we all started working on the conveyor belt this week and plan to have it done by the end of next week. My progress, as well as the team’s progress, is on schedule so far.

Ishita Kumar’s Status Report for 03/27/21

This week I met up with Ishita Sinha to work together on our rottenness detector. I improved the mask for detecting rotten bananas through the help of plotting rotten bananas on an HSV graph and then testing values on images of rotten bananas. My algorithm now has two separate masks one for rotten and for good parts of the banana and I worked to separate the two masks as before I just had a single mask for the whole banana. Ishita Sinha and I discussed ways to improve and implement our rottenness classifier and we decided using the areas found in our image segmentation masks would be a good start to calculating the percentages of rotten parts in fruit so we are implementing that. I also researched AlexNet and double-checked an online Kaggle dataset I found earlier to see if it would fit our needs and I believe it would as of now. I also worked with my team to build the conveyor belt this week and we made good progress with the woodwork and we will continue working on it early next week too. I am on schedule for my tasks.

Kushagra’s Status Report for 03/13/21

- Worked on getting camera to take pictures every 4s and saving on disk

- Worked on design for the conveyer belt more. Finalized dimensions, materials (including parts that need to be 3D printed such as bearings), and how different parts will fit together. Also, sketched different views of the conveyer belt as per the advice of the people in the machine shop. Next step is to prototype the design with cardboard and glue.

Ishita Sinha’s Status Report for 03/13/21

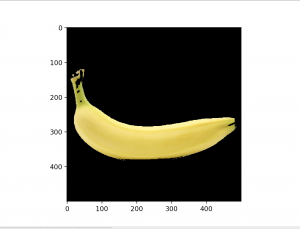

This week, I worked on implementing a colour analysis algorithm that could be used to identify colours in the picture to determine rottenness of the fruit, along with identifying the fruit itself. Earlier, my algorithm wasn’t using the HSV colour space, so it wasn’t working so well, but I worked on changing it, so it’s providing better results now. I need to test if it works well with images of rotten bananas as well. For now, I’ve been working with images of only good bananas. Next week, Ishita Kumar and I plan on meeting to integrate our code and develop a classifier to classify rotten v/s fresh bananas. I also plan on working on the Design Review Report in the upcoming week.

Team Status Report for 03/13/21

The most significant risk we anticipate as of now is building the conveyor belt. None of us have mechanical experience so this would definitely be challenging. However, as a contingency plan for the same, we plan on using a treadmill since the conveyor belt isn’t a part of our product – our product is meant to be integrated into existing conveyor belt systems, so if our conveyor belt doesn’t work, it doesn’t jeopardise our product itself.

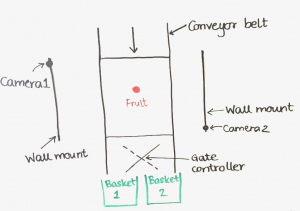

The design of the system was finalised and updated, as has been shown below:

We had to make this change to the design since the earlier design that was using pistons might not have been able to meet the requirements of the product. We were using the pistons to push an item off the conveyor belt. However, doing that would require a lot of force from the pistons, which may not be feasible. The pistons could possibly also not meed the speed requirements since they operate quite slowly. Thus, we shifted to this gate model. Corresponding to this change, we updated our model to be sorting fruit into only 2 categories – good v/s rotten, so the gate can rotate in one off 2 directions to push the fruit in the appropriate basket. Correspondingly, the updated block diagram is shown:

We updated the schedule to reflect a more feasible timeline given that we have midterms going on currently. This past week, Kush worked on understanding the Nano and Ishita Kumar and Ishita Sinha worked on improving results from their image segmentation and colour analysis. For the upcoming week, they’ll be integrating their code and working on building a good classifier. Besides this, the team will also be working on the Design Review Report.

Ishita Kumar’s Status Report for 03/13/21

I worked on improving my image segmentation algorithm this week. I noticed significant improvement from last week when using HSV color thresholding. This is because I decided to graph the HSV range in one banana image and then used information from that graph to decide my thresholds, instead of manually fixing the range through trial and error. This HSV color threshold does well on multiple fresh bananas too. I tried my algorithm on different images of bananas in different positions for testing it. I now need to work on segmenting rotten bananas as well. Ishita Sinha and I are going to merge our code and then work on classification. I am researching classifiers which I will discuss with Ishita Sinha. I will also work on the design document due next weekend and our team is going to meet to discuss this. We want to finish the classification code for rotten vs fresh by next week.

Kushagra’s Status Report for 03/06/21

- Finalized the conveyer belt design to some extent . 1) rack and pinion and 2) treadmill are contingency plans.

- Ordered the Nvidia Jetson Nano 2GB and the raspi camera. Started playing around with them, but haven’t gotten hello world to work yet. Ideally, want to have the camera take pictures and save on disk in the next week.

- Made design choices for the non-mechanical parts and helped write the design presentation.

Ishita Sinha’s Status Report for 03/06/21

This week, my teammates and I worked on finalizing the parts we’d need for the critical components and ordered some of those to get started with tinkering with them. We also worked on finalizing how our entire design would work, while also determining backups for the different components in the design.

I worked on implementing the code for a color analysis algorithm so I could integrate it with Ishita Kumar’s code. She’s working on the image segmentation code for identifying the fruit and for separating it from its background. I would then plug in my algorithm to analyze the colors of the fruit in order to predict the rottenness of the fruit.

In the upcoming week, I plan on finalizing the code for the color analysis and integrating the code we have so far so that we can work on developing a classifier for ripe v/s rotten bananas over the next 1-1.5 weeks. For now, I’m working with Google images. As a team, I believe we should also start tinkering with the Jetson Nano to see how we can program it and also figure out how we can program the cameras to take pictures at the given intervals, to begin with.

We are behind the schedule we had planned, but that was quite an ambitious one so that even if we’re lagging, we’re still doing okay. We’re working on an updated schedule based on our actual progress. We’ll need to speed up work over the next few weeks in order to have enough time for testing.