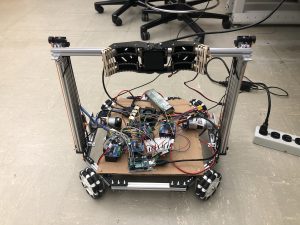

This past week, the team primarily worked collaboratively to start autonomizing the robot. We also had demos during class time where we were able to show the subsystems and receive feedback.

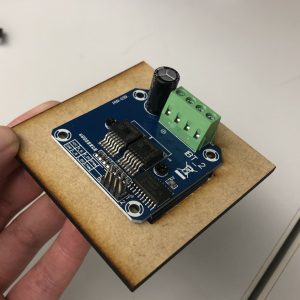

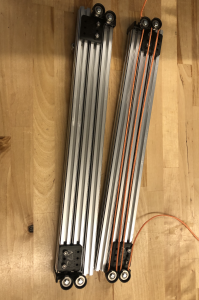

Originally, the code that runs the subsystems for the chassis, slide, and claw systems were separate. When we tried combining them together, we realized that the servo library for the Arduino disables 2 PWM pins on the board (after experiencing a strange bug where some motors stopped moving). This meant that we could not run our entire system together across 2 boards since we needed to use all 12 PWM pins for our 6 motors. We concluded that we either needed to get a servo shield to connect to servo to the Xavier (the Xavier has GPIO pins so the servo cannot be directly connected) or get a larger Arduino board. We were also running into some slight communication delay issues for our chassis with one motor being on a separate Arduino from the others. Hence, we ended up replacing our 2 Arduino Unos with one Arduino Mega 2560 board. Since the Mega board has 54 digital pins and 15 PWM pins, we were able to run all our subsystems on the single board and also eliminated the communication issues across the 2 boards.

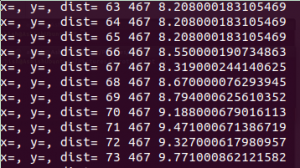

For our navigation code, we first focused on being able to get the robot to navigate to an april tag autonomously. Currently, we are relying on being able to rotate and move the robot based on powering the motors on the chassis for a given amount of time. In our tests, we were able to consistently replicate having 1 second of running motors to 0.25m of movement. However, the translational movement is prone to some drift and acceleration over larger distances. Hence, we plan to mostly keep our movements incremental and purchase an IMU to help with drifting disorientations.

Video of 1 second movement to 0.25m translation

Similarly, we found that we were able to fairly consistently get the robot to rotate angles of movement by proportionally associating power on time to our rotational movement.

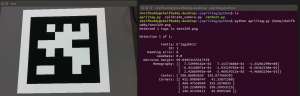

We then took these concepts and were able to get our robot to navigate to an April tag that is in its field of view. The April tag provides the horizontal and depth distance from the camera center as well as the yaw angle of rotation. Using this information, we wrote an algorithm for our robot to first detect the April tag, rotate itself so it is parallel-y facing the tag, translate horizontally in front of the tag, and translate depth-wise up to the tag. We still ran into a few drifting issues that we are hoping to resolve with an IMU but got results that generally performed well.

Our plan is to have an april tag on the shelf and on the basket so that the robot can be able to navigate both to and from the shelf this way.

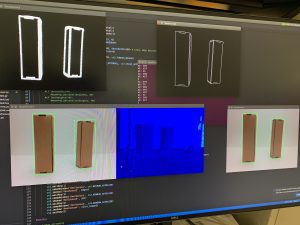

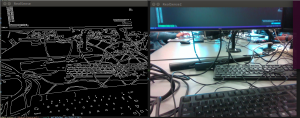

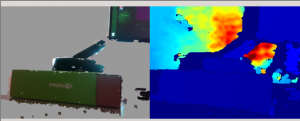

We then focused on being able to scan a shelf for the laser-pointed object. To do this, the robot uses edge-detection to get the bounding boxes of the objects in front of it as well as the laser point detection algorithm. It can then determine which object is being selected and center itself in front of it for grabbing.

We tested this with a setup composing 2 styrofoam boards found in the lab to replicate a shelf. We placed one board flat on 2 chairs and the other board vertically at a 90-degree angle in the back.

Video of centering to laser pointed box (difficult to see in the video but the right-most item has a laser point on it):

Our next steps are to get the robot to actually grab the appropriate object and combine our algorithms. We also plan on purchasing a few items that we believe will help us improve our current implementation such as an IMU for drift-related issues and a battery connector converter to account for the Xavier’s unconventional battery jack port (we have been unable to run the Xavier with a battery because of this issue). The camera is also currently just taped onto the claw since we are still writing our navigation implementation, but we will get it mounted at a place that is most appropriate based on our completed implementation. Finally, we plan to continue to improve on our implementation and be fully ready for testing by the end of the next week at the latest.