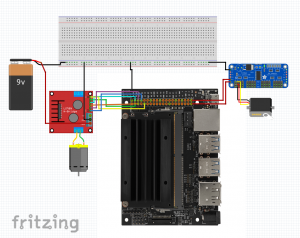

This week I continued the research I have been doing into the computer vision component of our project. After speaking with Tao this week, our team decided to use the Jetson Xavier NX rather than the Jetson Nano due to the fact that the Xavier NX has 8GB of memory compared to the 2GB of memory in the Jetson Nano. So, I researched cameras that would be compatible with this new component. I looked into the camera in the ECE inventory list that is specifically created for the Xavier NX, but, it has a very low FOV (only 75 degrees). So, I did more research into the sainsmart imx219, and it seems like this is compatible with the Xavier NX as well, and has a higher FOV (160 degrees), so we will most likely go ahead with this camera.

I also talked about depth sensing with Tao, and he suggested that we look into stereo cameras if we wanted a camera with depth sensing capabilities. Unfortunately, many of the stereo cameras compatible with the Xavier NX are quite expensive/sold out. So, I looked into the idea of using a distance sensor such as an ultrasonic sensor from adafruit along with a camera to properly navigate the robot.

This week I also looked into the April tag detection software, which is originally written in C and Java, but I found a python tutorial that I followed. I don’t have the software fully operating yet, but hope that it will be functional by next week. I also worked on the design presentation with my team, and we discussed our progress and project updates.

Next week I plan to continue working on the April tag detection code, and try to setup the camera from the ECE inventory with the Jetson Xaiver NX.