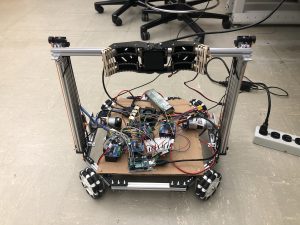

This week I worked mainly on the navigation code and some design of the middle board which places objects. I implemented a program where the Jetson sends directions to the two Arduinos, and the motors spin accordingly based on omnidirectional rules. Attached is a video that demonstrates this behavior.

One promising thing I noticed is that, even though each motor is controlled by a separate motor driver, and the four motors interface with different Arduinos, the motors change direction at the same time. However, I do notice that there is a lag between the time the Jetson sends commands to the Arduinos, and when the motors respond to the command. Hence, this is something to be aware of when the Jetson sends information to the motors potentially about a frame captured slightly earlier.

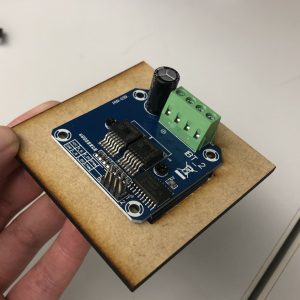

Esther and I also did a bit of designing of the board to place the various electronic parts. We think that we don’t necessarily have to mount everything on top of the board, but it is useful to create mounting holes for the motor shield, since there is a heat sink that gives it some height. We managed to measure out the dimensions for the motor shield. Attached is an image to show how the motor shield would be mounted. I would work on engraving the motor shields dimensions into the larger board, and hopefully have it laser cut on Monday or Wednesday.

To test how the robot currently navigates, I temporarily used a cardboard to place various electronics. Since I am testing in the lab room, I ran into the issue that the floor might have too little friction, resulting in the wheels spinning at different speeds. The robot would not go in the direction as intended. We plan to add a foam-like structure on the wheels to increase friction. I would also look further into my code and the mechanical structure of the robot to see if there’s anything to troubleshoot.

For next week, I plan to debug the issues about navigation ideally before the demo, laser cut the board to place the electronic components, and work with Bhumika to integrate computer vision into navigation. Hopefully, the robot can respond to camera data and move accordingly.