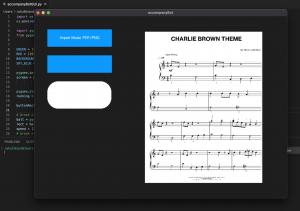

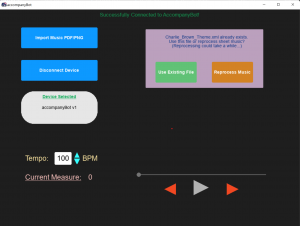

For the final week of classes, I was able to accomplish the tasks I outlined for myself last week. This includes building a UI accessible cache prompt, enabling a disconnection mechanism from the rest of the system, as well as creating disappearing messages to provide an informational feedback to the user on application events. The cache prompt works by checking to see if the music score that the user is inputting has already been processed. If so, the user is prompted with a message saying whether they would like to use the existing xml or create a new one (which would take a while). Since Audiveris takes several seconds to translate each input page of music into xml, it would be beneficial for the end user to save on computation time here. If no matching xml is found in the cache, the cache prompt is not displayed and the application goes straight to running an OMR job.

Connecting to device was made possible through a large sky blue button in the app, but if there are complications for connecting such as the USB wire coming loose, then the application should recognize and report such things. Previous print statements that I had in the code for when the device connected or python exceptions for the connection breaking have now been replaced by color coded disappearing messages that show as an alert at the top of the app. Additionally, any misinputs such as non pdf/png files or files that the OMR fails to process are also reported in this fading out alert message style. Lastly, just for the sake of having it the “connect to device button” now becomes a “disconnect from device” button after having connected. This was more complex under the hood as I had to clean up the communication threads each time the USB port was closed, and then generate new threads for a reconnection so as not to run into problems writing or reading from a closed port.

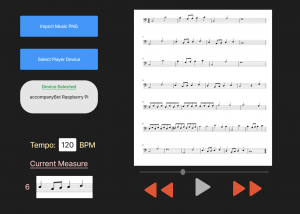

Here is a view of the essentially finished accompanyBot GUI showcasing the new cache message along with a fading alert at the top:

This simplicity of the updated display hides away all the complexity from the end user which was the intended goal of this design. To verify the new additions I tried connecting and disconnecting an arduino multiple times, sometimes unplugging, sometimes disconnecting through the software button. Everything there seems to run smoothly. Performance tests integrated with the notes scheduler and OMR software were detailed in previous status reports.

Thats all for my work into this project. Thanks for reading.