This week I received the Raspberry Pi 4 from ECE Receiving. After picking up the RPi, I worked on setting it up so that we could control and program it. Since we didn’t have a wired keyboard, I had to install the VNC Viewer application to ssh into the RPi using its IP address. This allowed us to open up the RPi’s desktop and type inputs to it. After seeing the full capabilities of the RPi, we considered migrating all of the code, including the UI and OMR Python application, onto the RPi to reduce latency when starting and stopping. However, given our current toolchain, we require a Windows device to run Audiveris, so we decided the microseconds-milliseconds we save from integrating together was not worth the added effort of setting up a new environment and OS on the RPi.

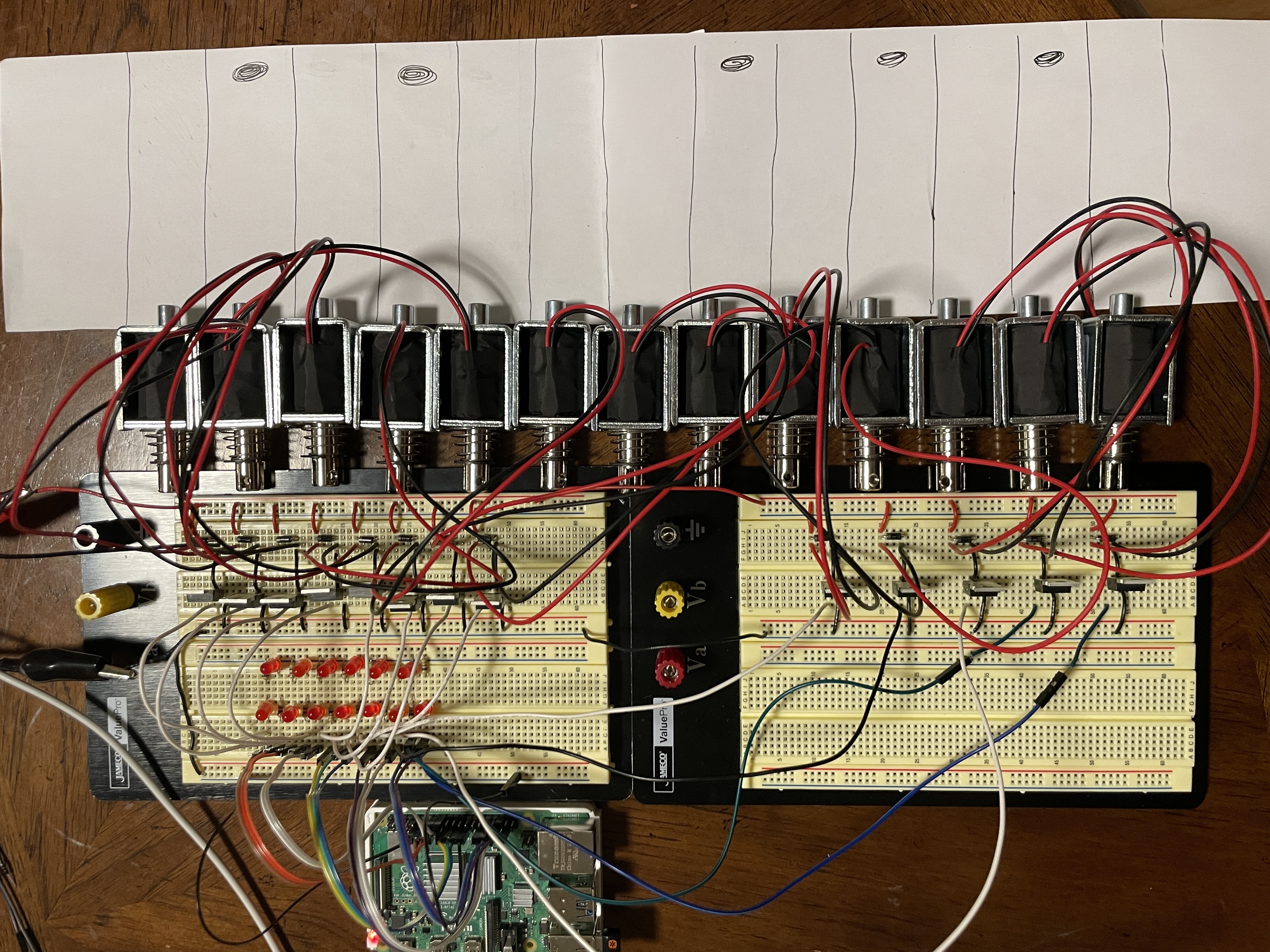

On Saturday, I worked with Aden on testing the exploratory solenoids and transistors that we bought. I wrote a simple Python file using a basic GPIO library to set pins high so that we could test the power switching. The output voltage from the RPi was above the necessary threshold voltage of the MOSFET, so the power switching was quite seamless.

One challenge we encountered when testing the solenoids was that our initial code using the sleep function from the time library could only get the solenoids to depress and retract about four times a second (video linked here) which is below the six times a second target frequency in our use-case requirements. Since the sleep function takes an argument in seconds, one possibility for the bottleneck is that it couldn’t delay for a shorter amount of time. I will be working on installing and using the pigpio library so that we can have microsecond delays instead of the limited sleep function. However, if the bottleneck on the timing ends up being due to the hardware itself, then we will need to rescope that requirement and change the code that limits the max tempo/smallest note value to account for this frequency cap.

One big change we addressed in our team status report was the switch to the music21 library for parsing rather than a custom parsing process. This resulted in me being behind schedule, but the new Gantt chart developed for our Design Review Presentation accounts for this change.

Next week I will be looking more into using music21 to extract the notes from the data and converting them into GPIO high/low signals. I was able to convert the test XML file that Rahul generated into a Stream object, as shown in the image below. However, as you can see, there are several nested streams before the list of notes is accessible, so I will need to work on un-nesting the object if I want to be able to iterate through the notes correctly.

As for the actual process for scheduling, I will try to explain the vision here. We can have a count that keeps track of the current “time” unit that we are at in the piece, where an increment of 1 is 1 beat. This corresponds nicely to the offsets from music21 (i.e. the values in the {curly braces}). We can also calculate the duration of each beat (in milliseconds) by calculating 60,000/tempo. So we will iterate through the notes and at a given time, if a note is being played, we’ll set the GPIO pin associated with it to high and we will set the rest of the pins to low (which is easily accomplished with batch setting from the pigpio library). Thus we will also need a mapping function that connects the note pitch to a specific GPIO pin.

Overall, the classes I have taken that have helped me this week are 18-220 and 18-349. Knowledge of transistors and inductors as well as experience with the lab power supplies that I gained from 18-220 helped me when working on the circuitry. Embedded systems skills from 18-349 was very helpful when looking at documentation and datasheets for the microcontroller and circuit components respectively.