Since there was a gap from the last post, I completed a good amount of the work pertaining my last two tasks in the updated Gantt chart. This includes finishing the communication protocols between subsystems and running some tests for the project requirements. Last report, the missing piece of intercommunication was mentioned to be the ssh/scp format for relaying xml files from the user application to the notes scheduler RPi. This subprocess has been resolved with no issues assuming both devices are present and connected to the campus internet. I had to do some research on how to specify a timeout parameter for making the scp connection in powershell, and incorporated this into the design as well. Otherwise, the application would freeze while trying to connect to ssh for much too long. Additionally, setting up scp required both Nora and I to store the appropriate keys in the ssh_config to avoid having manual password entry every time an xml file is sent.

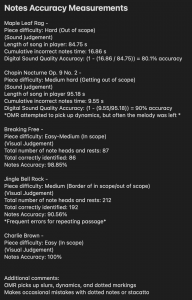

Regarding the testing, I am mostly done with performance tests. I was able to make qualitative and quantitative measurements for the Audiveris accuracy on reading notes. The quantitative measurements involved me counting the actual number of note heads and rests in a piece, generating the xml from the notes, reconverting this xml back into sheet music, counting the number of correctly placed notes/rests, and taking the ratio of correct markings to total markings. For the most part, results meet the design requirement of 95% accuracy on average for basic to moderate difficulty pieces. This is how the OMR testing results look:

Medium had either 4 note chords in one of the two piano staves and Hard tended to be more of a solo (this is supposed to be the “accompany”Bot) . These pieces were just tested to find the limits of the OMR solution. I also tried taking a picture with my phone of a sheet of music in okay lighting and the OMR seemed to have around 50% accuracy, but this too goes out of the appropriate inputs the accompanyBot should receive. Also note the first two pieces had too many notes for me to manually count so I just took the ratio of time where the song sounded correct after playback through MIDI. Sometimes MIDI playback would expose singing parts generated in the XML as opposed to only piano parts. This would not be problematic for the solenoids device as it ignores the type of part, however, is an issue for the sample audio which Tom suggested I incorporate. I tried correcting the xml to find and replace all singing parts with piano, but as the xml file gets longer, this job requires significant time that slows down the app. Ultimately, the digital player is just a secondary feature and plays more of a debugging role for the user in case something goes wrong once the solenoids start playing.

Additionally, with Nora I ran a latency test from application to scheduler and back, sending play and pause commands while the solenoids were active. Through our tests we determined the maximum full cycle communication time to not exceed 72ms. This successfully meets our 100ms limitation.

Regarding bug testing, I realized there were too many print statements in my code for a finalized project as well as the fact that the serial port from the GUI was never being closed at any point. I want to add in features to the GUI that replace console logs with more user friendly text or buttons, as well as introduce a disconnection mechanism from the UI for the user to disconnect without unplugging the device. This is by no means a setback, as I have had much time to work on the app and believe I can develop these features quickly now over the final week of classes.

Overall I believe my progress is on schedule. I’m looking forward to finishing my last tasks and operating the project on an actual piano.