Jonathan’s Status Post 04/30/2022

During this week our group finished up the last bits of testing on the whole system and I spent time getting the web server to work. We also started working on the final presentation poster. We increased the testing that we’ve done by increasing our footage count for 20 total clips to 36 total clips. While yes, we would have liked to test on more data, it was surprisingly difficult to find more and more data. It is very easy to find traffic camera footage but finding traffic camera footage with car accidents is more of an oddity as those events are less common. Additionally, as we mentioned in the beginning of the project, many traffic cameras do not record video as they are not “smart” enough to detect crashes thus a reason for the project to exist in the first place!

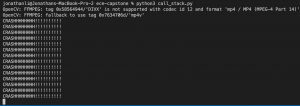

Additionally, I’ve worked on adding more “presentable” graphics for the final presentation and the tech spark showcase. This included adding metrics to the bounding boxes so viewers can see what is happening, increasing the size of metrics that we use such as directional arrows (increase size of arrows) and adding other metrics in text form.

We are all very excited to showcase everything that we’ve done this past semester and also very grateful for professor Kim’s guidance and our entrance into the techspark showcase!

Goran Goran’s Status Report – 4/30/22

For this week our group was finishing our last bits of testing on our whole system, and working on making sure our web server is working. We have also started working on making our final presentation poster. I have been spending my time specifically testing our rerouting implementation and different routes with more cars, and using this to create more graphs for our final implementation. I have also spent quite a bit of time refining our final presentation poster so we are ready for our poster session. Super excited to showcase everything we have accomplished!

Arvind Status Report 04/23

This week I have worked on finalizing and fine tuning the crash detection algorithm. The crash detection module works by deciding how similar the angle of two incoming cars are, as well as how quickly the speed is changing (acceleration). After the bounding box for two cars intersect, we say a crash is likely if the cars are travelling in perpendicular directions (so towards each other) and if there is a rapid change in speed. Different paramaters lead to different success rates of different situations. For example, if we prioritize the angle too much, then we will not detect crashes well when the cars are running parallel, such as fender benders.

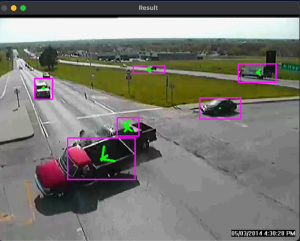

Firstly, in our testing with 20 videos of crashes, we always (100%) success rate detect the basic head on intersection crash. This is a crash such as the one shown below.

However, we are having variable success rates with other types of crashes. For example, in one of the videos we have a rear end crash where the cars are not rapidly decelerating as the cars are slowly coming to a stop due to a red light, but then one of the cars goes too far and crashes into the car in front of it. The cars are both travelling in parallel directions, and there is no abnormal change in direction/speed. One potential fix for this is to have some kind of test where we check for abnormal movement (or lack thereof). After a crash, cars don’t move, so if all the other cars we are tracking are moving, and these two cars are just staying still for a very abnormal/extended period of time that might be a way to do it. Overall, however, we are very happy with the 100% success rate on the typical case, and we can continue to refine paramaters and develop other strategies for the edge cases.

Finally, I am just working on making slides and making sure we are good to go for our presentation next week. (I am not presenting, so just working on slides and with the group member that is presenting to make sure he is knowledgable about the work ive done).

I think we are on track in terms of schedule. By next week I hope to have a completely finalized crash detection algorithm, and I can report how successful it is at tracking different types of crashes with more video data (currently testing on 20 clips, want to test on more). We would also like to finalize what we are showing for the demo.

Jonathan’s Status Report 04/23

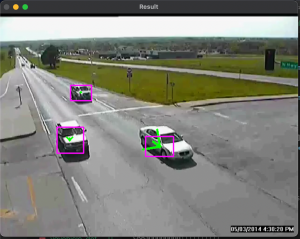

This week I worked with Arvind on further optimizing our crash detection and bounding box algorithms. We followed Professor Kim’s advice on focusing on the crash detection part of the project. The first thing we focused on was adding some live drawings to overlay over vehicles to better get an understanding of how our system was understanding the environment. We added bounding boxes (not shown in the image), tracking objects (the purple/pink boxes shown below) and vector /center points (the green arrows shown below) to all of the cars we picked up.

After doing so we were able to fix some minor bugs relating to how we track objects. At one point we realized we had a small bug in which we swapped x,y values during a tracking part of our algorithm. Using the images also helped us notice that certain non car objects were being tracked which led to false positives in our crash detection.

I remedied this by recalibrating how we implement background detection. Essentially we are training a simple KNN algorithm that reads in frames to build a background mask. What would happen is sometimes in some videos cars would be stopped for a very long time and the algorithm would end up defining that the car was part of the background. When the car finally moved, then the road itself would be tracked. To remedy this we used more frames for the background mask and tried using frames with no moving objects.

Finally, we worked on further refining the crash detection modules. Here is a set of screenshots from our example video that we have been using in our status posts.

Goran Goran’s Status Report – 4/23/22

For this week again our group was putting the finishing touches on our complete project, but we also have the final presentation coming up next week so a significant portion of our time has been dedicated to that. On the finishing touches side of our project, I have finished writing a script for our operators view or dozens of cars being rerouted at the same time. I have ported all of the information of all these possible routes for the cars, and run it through a few simulations. A case where routes are picked entirely randomly. the first route is picked. and a “best” version where the route with the least overlap is picked. Now I am just deciding how the best way to present this data is, whether it be graphical or what not. I am also in charge of doing next weeks presentation, so I have been making sure that I have an intimate understanding of all aspects of our project, including the portions I was not directly responsible. We are now starting to make our powerpoint and should have that done by Sunday night. Everything seems to be on track, I am excited to present everything we’ve accomplished so far!

Jonathan’s Status Report 04/16

This week I worked with Arvind on expanding on input given to us during our interim demo and our professor meeting the week after. Arvind and I increased our poll of crash videos together and ended up with ~20 video clips of unique crash situations that we tested our crash detection algorithm on. We were able to test all of these video clips with the algorithm and the results were mostly accurate. Some of the issues we noticed (like stated in previous weeks) were A) large bounding boxes due to shadows, B) multiple vehicles being bounded by one box, C) poor / irregular bounding due to contour detections.

Professor Kim gave us the idea to focus on using more “non detailed” methods and focus more on the crash detection part of the project rather than the vehicle detection part of the project. We are almost at a point where we can exercise these ideas. This week we spent lots of time removing “erratic” behavior from the vehicle bounding boxes to support a larger focus on crash detection. This was achieved by using a centric point to track vehicles.

We used the built in numpy library which can take a image mask (vehicles / contours create an image mask of the vehicle) and calculate an average midpoint of the mask. With this image mask, we narrowed our focus to work on creating less noise / erratic behavior in the center points of vehicles. Primarily, we further tested / fully implemented last weeks shadow detection algorithm on other vehicles. With better center tracing, the crash detection suite can focus more on location tracking etc. The data is just more dependable.

As of now, this current alg is well enough for MVP. We are always looking to improve on the current algorithms / design which is why we are exploring these newer techniques.

Goran Goran’s Status Report – 4/16/22

For this week our group was mainly working on testing and refining our individual components as the deadline fast approaches. For rerouting that means that I have spent this week focusing on trying to create an operators view of the entire mapped area, showing how multiple cars are being rerouted. For now that means our goal is to have approximately 20 cars and their routes with respect to each other tracked and be able to show that their updated routing is improving their overall experience. In that regard I’ve run into several limitations for of the API software, since it limits me in the amount of requests I can make, my hope for creating a constantly updating map showing the routes seems to be a pipe dream. What I have been working on, and continuously tweaking is creating an excel document, showing each of the routes taking my the cars. I plan on cleaning up this data and creating visuals in the form of graphs showing changes in the travel time, and which streets are made more traffic heavy by the rerouting algorithm. So far everything seems to be going on track to make that happen by our Monday meeting.

Team Status Report 4/16

For this weeks status report, we are mainly working on testing and refining our algorithm from the object detection / crash detection side of things. The two problems we are working on improving is that we are getting a good amount of false positives due to larger bounding boxes from shadows and larger vehicles such as trucks. We are working on using only the center of the boxes to track the vehicles and using some kind of area thresholding.

With regard to the rerouting we are working on better simulating traffic. We are creating a simulation software script where we put in 20 cars with prescribed routes, and then compare how long they take to get to their destination after the rerouting vs before the rerouting assuming there is a crash that is an obstacle on their path.

Arvind Status Report 04/16

This week I have worked on continuing to work on advice given to us from our demo. The main thing I worked on was expanding the testing of our algorithm to more videos. Before we had 3 video clips that we tested our algorithm on. Now we have a set of 20 video clips that we have collected of crashes and tested our crash detection software on. They all work reasonably well. They all detect the crash, however there are false positives due to detecting larger bounding boxes due due to some factors in certain video clips. For example shadows and larger vehicles such as cars lead to larger bounding boxes for the objects tracked and so they easily hit other bounding boxes and trigger a false positive incident. We are working on switching the model to only consider the central point and include some kind of area threshold to determine intersections and crashes in the future to make the accuracy better for the future. We think the algorithm we have presently works well enough for a MVP, but we would like to improve upon our current design based on our test results on the video clips with shadows and larger vehicles.