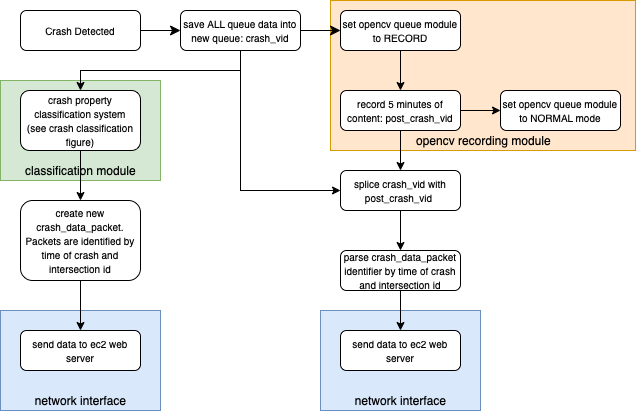

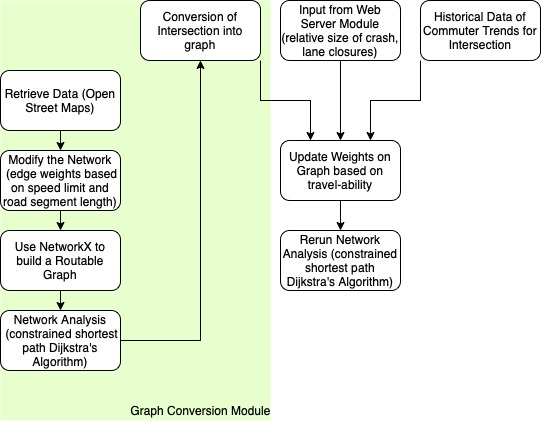

This week our team spent a lot of time working on detailing out specifics for our project. These include constraints, requirements, behaviors, algorithms for implementation, etc. We all documented these specific details and created a set of block diagrams showing how all parts of the project (modules) interact with one another. This makes the requirements and dependencies very clear. Shown below are the four main modules.

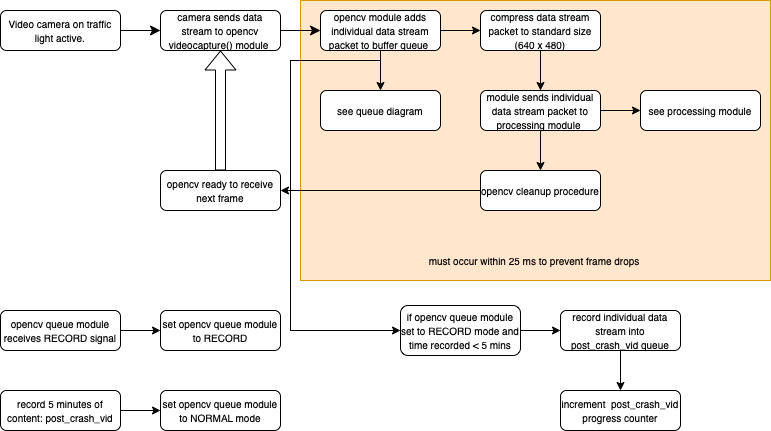

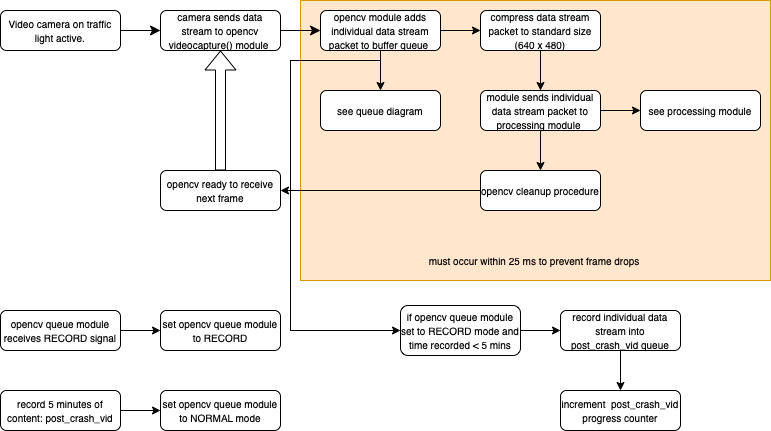

Video Capturing Module

-Notable Implementation Updates: Most of the “normal” operations are working this week. Focus needs to be placed on RECORD signal handling.

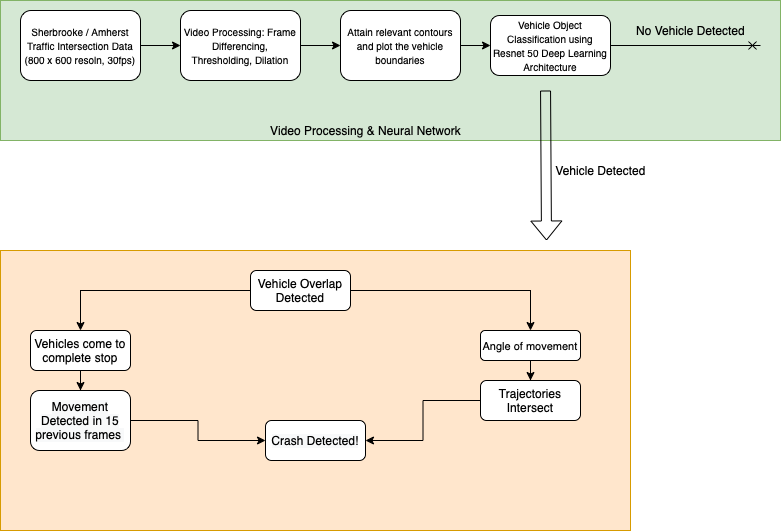

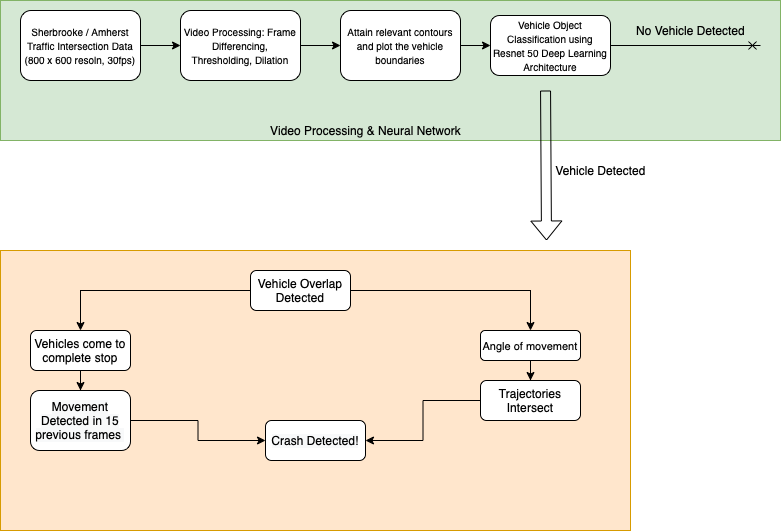

Crash Detection / Classification Module

-Notable Implementation Updates: Moving object detection proof of concept has been demonstrated this week. Additionally we have explored deep learning architectures to use for object classification (car classification) and have settled with either resnet or mobileye.

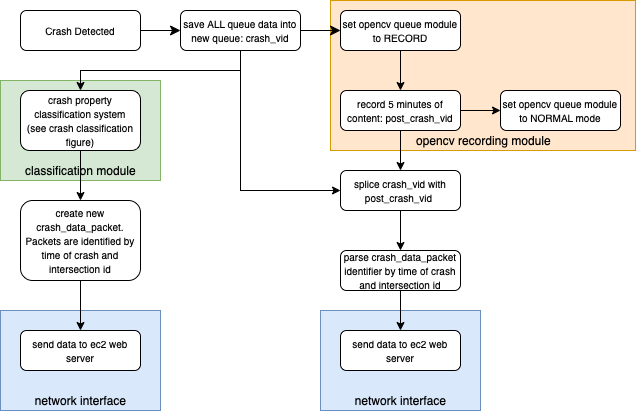

Post Crash Detection Module

-Notable Implementation Updates: NA. Most of our efforts have been placed elsewhere. Defining constraints / requirements / behaviors is all we have put into this part of our project.

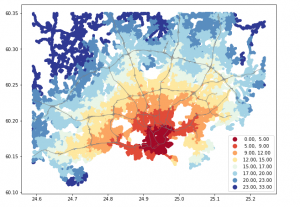

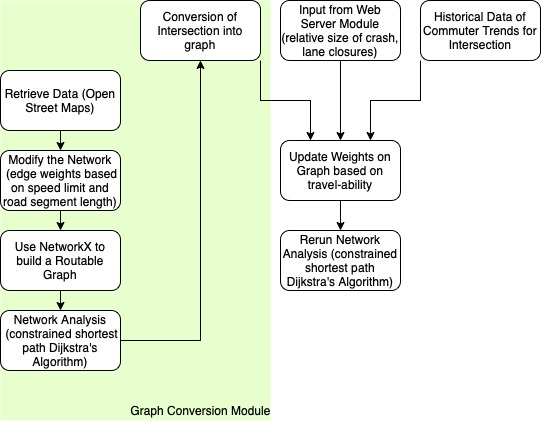

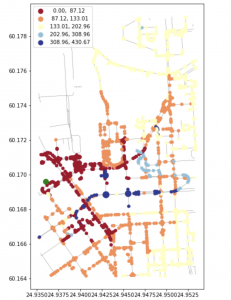

Rerouting Module

-Notable Implementation Updates: Algorithms and proof of concept have been demonstrated.

—————————————————————-

This week so far has been quite productive. We are still working in the phase in which work can be done separately. There is minimal “integration” to do at this point in our project