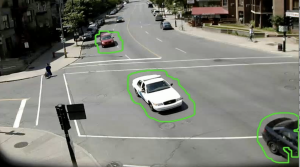

This week I helped Jon with his new algorithm to isolate moving objects in a frame. It allows us to use a Gaussian Blur kernel to smoothen out the image first, and then subtracts out a background frame to isolate the objects we wish to track.

We know are creating better contours, and are able to better apply the tracking algorithms from last week. From here we are able to create bounding boxes that correctly track the moving object blobs. Once these bounding boxes get to close together, we detect a crash. One thing we are working on implementing is keeping track of direction. For example if two boxes are too close together /intersecting and it’s deemed a crash but they are traveling in parallel, then they should not be a crash as they are probably two cars that looks very close together due to the perspective of the camera but are probably just traveling next to each other in parallel lanes. For example here is an image of two stacked vehicles that show together as one contour. Another issue are shadows, which are also shown in the image below.