Earlier this week, I presented our design review, which was focused on the specifics of implementation and testing that would help us meet our metrics/requirements for a successful project.

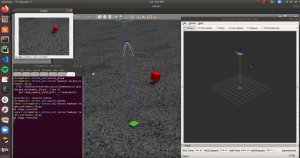

On the project implementation side, I focused on connecting the simulator to the drone’s flight controller API, and was able to send motion commands to the drone and watch the results in simulation. This will be useful since any code testing in this simulation can be directly applied onto the physical drone with no change, the only tweak will be the communication port used.

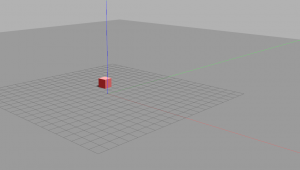

Unfortunately, our old simulator of choice (Airsim) proved incompatible with the flight controller API. I was able to get the simulator and the controller’s Software In the Loop (SITL) framework to communicate half of the time, but the other half of the time, the simulator would randomly crash with no clear reason. After extensive search through online forums, it was clear that Airsim was still addressing this bug and no solution was available, so I decided to just avoid the trouble and work with a more stable simulator, Gazebo. Shown in the picture is a colored cube that we will treat as the simulated human target to test the integration of motion planning and target tracking.

Next week, our priority is to begin integration and get the drone up in the air with target tracking. In this case, I will focus on making sure we have a well-tested procedure for arming the drone, letting it takeoff to a height of 20 feet, and implementing a very basic motion plan that will just follow attempt to follow the 2D direction of the target.