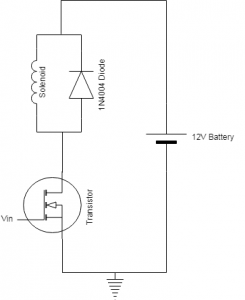

I ran into servo problems this week. I started by trying to get the servo to turn using an Arduino, but it kept jittering. I tried replacing Vin and GND with a power supply from the 220 lab, but still no luck. I thought it was an issue with the PWM from the Arduino, but when Ronit lent me a mini servo from 18-349, the Arduino handled it fine. I’m also confused because the servo would draw close to 1A when it was jittering. I’m not sure if it’s a problem with the way I’m wiring or controlling the motor or if it’s a faulty motor. I asked around and someone suggested looking into stepper motors. The advantage of this is the continuous rotation. I don’t need to worry about the wheel diameter. However, stepper motors are power drains, especially when doing no work at all.

contour

con⋅ tour | /ˈkänˌto͝or/

(noun) A curve joining all the continuous points (along the boundary), having same color or intensity.

I did achieve my goal to implement image cropping. The current frame is smoothed and converted into grayscale, and then the absolute difference is taken between this and the reference frame. A binary threshold is applied to the frame delta such that if a pixel’s intensity is above a set threshold, the pixel is set to maximum intensity.

For each contour in the frame delta, if the contour area is greater than the minimum contour area parameter, the contour is considered to be actual motion. The image is cropped around all significant contours. In order to track where to crop, a bounding rectangle is drawn around each contours and the outermost corners out of all the contours set the cropping region. The original and cropped frames are written to a file and the smoothed grayscale current frame is saved as the new reference frame.

This week was high in discouragement and low in progress made. Next week, I will ask Sam and Cyrus for advice in the beginning of the week, and have new parts picked and an order in by Tuesday. I will also have the parameters for the motion detection script tuned and tested as it is integrated with Jing’s ML.