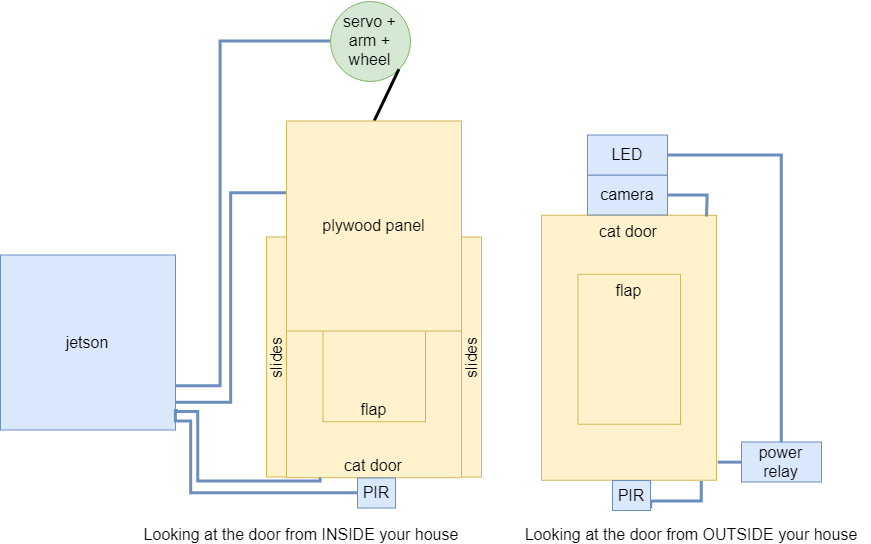

I laser cut all the door parts, with the assistance of my friends, Alfred Chang and Christian Manaog. Two identical rectangles were laser cut into the 2ft x 2ft plywood. These are the two sides of the hollow door. Professor Nace helped me cut 1 inch cube spacers with the band saw and taught me how to use the power drill. We used bolts to maintain alignment between the two plywood sides together and used the cubes to maintain spacing. I installed the pet door and here is the result:

Here is the installation guide for the drawer slides: https://images.homedepot-static.com/catalog/pdfImages/d5/d57bf3c8-71fe-4ed0-af53-a427049d4421.pdf. It will be adapted to fit our needs. A 23cm x 21cm plywood panel has already been cut. #8-32 machine screws will be used to secure the panel to the drawer members. Two 12in x 1in x 1in rectangular prisms will be cut using a band saw. Wood screws will be used to attach the cabinet members to the rectangular prisms, and #8-32 machine screws will be used to attach the prisms to the plywood. The solenoid will be secured using #4-40 machine screws and the servo will be secured using #6-32 machine screws.

Since the panel 21cm high and the servo rotates 180 degrees, the diameter of the wheel needs to be 13cm. The bottom of the wheel needs to be 21cm higher than the top of the panel. There is not enough space above the pet door, so I will need to elevate the servo. I plan to laser cut and band saw a few more parts to create an extension mount. We don’t have time to order more parts and re cut more plywood and rebuild the door. Since this is a prototype, this is okay, and is convenient for storage because we can take off the servo extension mount.

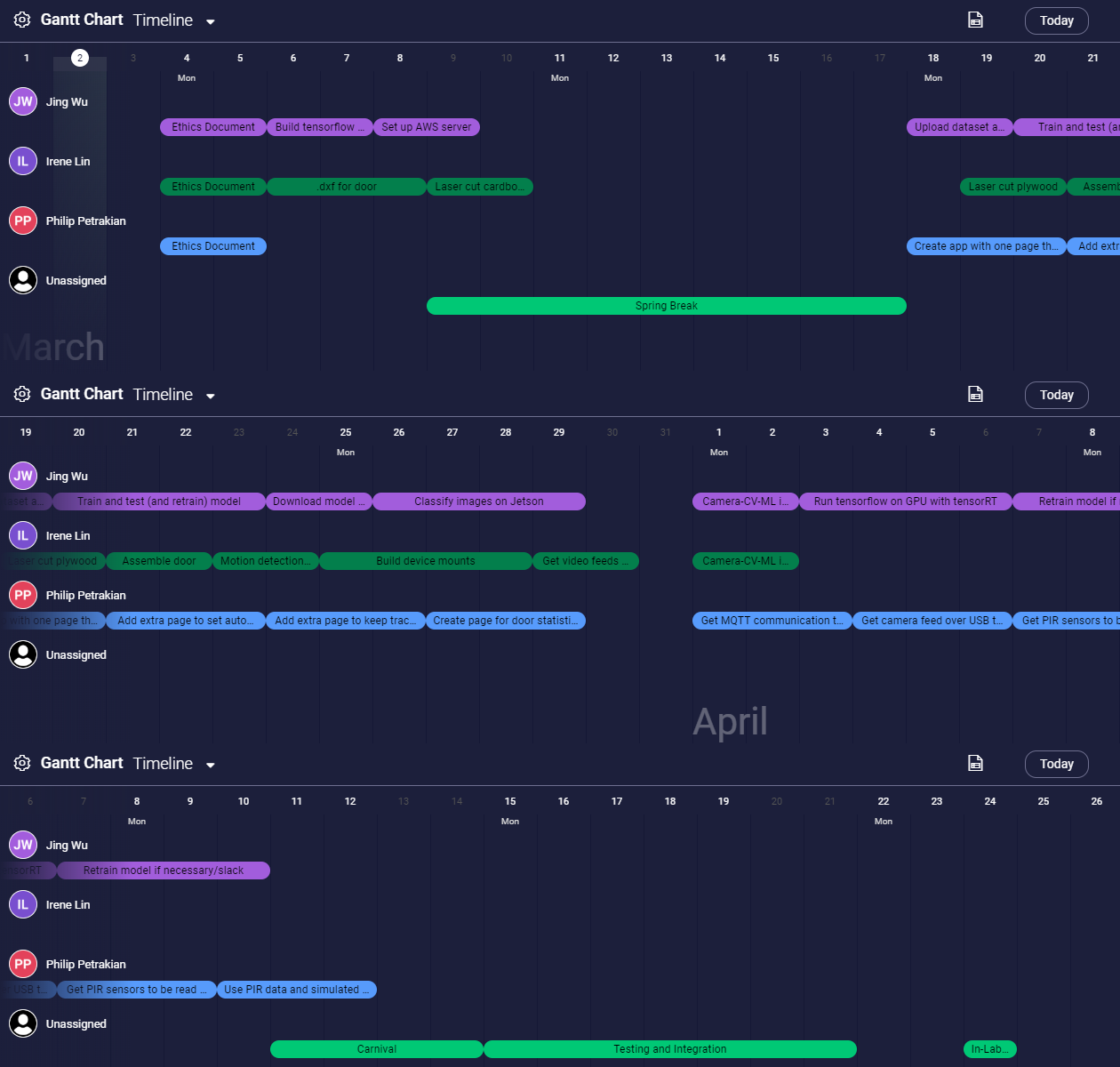

Parts have arrived, and progress is on schedule to complete the door by March 23, and the computer vision script by March 30. I am on track to be ready for integration on April 1.

I will be able to use the power drill for installing the drawer slides and panel, and will only require additional assistance for the band saw. Deliverable for March 23 is the completed door.