Jerry

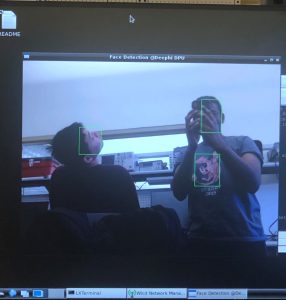

I was able to successfully quantize and compile the trained Yolov3-tiny network, so that it runs on the Ultra96 board as a demo taking in a camera feed and showing bounding boxes. Right now this DNNDK version of the network doesn’t match the behavior of the original (so the bounding boxes are wrong), but I believe I know the reason for the discrepancy. Soon, we should be able to get a working version of this demo.

When I get this done, I will turn back to looking at implementing the lower power modes.

Karthik:

Because we got all of the materials for the project, I started and finished assembling the pan-tilt servo mount. This mount essentially allows us to move the camera, when mounted, both horizontally and vertically as needed to track a person. There were some complications with regards to the screws on the servo motors but we were eventually able to get those off even if our methods were less than conventional, to say the least. Once Jerry finishes getting the neural network loaded, we will have to start working on the control algorithms for moving the servo.

To help this process go faster, I will start looking into making a hello world that proves that we can interface with the servo motor. Overall I think we have a good pace but we still do need to increase our pace if we want to finish on time.

Nathan:

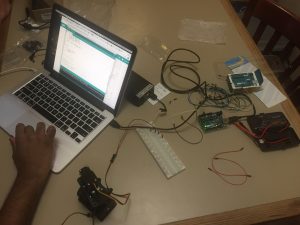

While the latter part of my week was occupied with a Model UN conference I had to dedicate significant time to hosting, earlier in the week I got access to the servo hardware and a chance to try out some of the basic servo functionality. I started with some basic code from the Arduino servo library, as that is one of the most ubiquitous control schemes and serves as a good proof of concept before trying Circuit Python. Unfortunately, I discovered that there seems to be a problem/incompatibility either with our servo or the Arduino ecosystem, as the servo would refuse to do anything other than turn counter-clockwise until it hit the limit of its range of motion. Manual analog pin output control did nothing to address this issue. To illustrate the complication involved, we found that even while not nominally transmitting a PWM signal to the servo (so it should be at rest), it consistently moved by ~10 degrees when a stove-top igniter ~4m away sparked. This is troubling to say the least, and we are investigating the cause of the discrepancy as a group. I am still somewhat behind schedule, though the coming week should be lighter than past ones. I will focus on servo/motor control and finish the soldering needed to get the Feather boards functional.

Team:

In addition to individual contributions specified above, Karthik and Jerry completely assembled the servo mount, which is now ready for a camera attachment to enable full pan and tilt functionality. Additionally, towards the end of the week we worked together to try to diagnose the servo problems, with a picture of our setup shown below. It should be noted that using the battery as our power source serves as a good proof of concept for how the servos will be powered in the final system, control dynamics aside.

Overall, we are pretty much on track but could be slightly behind depending on how long it takes us to get through the issues we saw throughout this week.