Jerry:

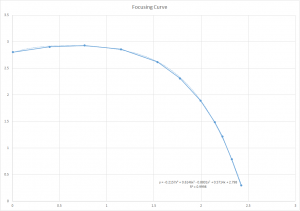

So as mentioned on the team report, we worked hard to port all our code to run with the Arduino library. In that version of the code, the focus and zoom controls moved independently of one another, so focus would be lost while the zoom was being modified. In addition, the zoom controls were quite slow. I realized that the slow zoom was caused by a bottleneck in the I2C bus, and that the clock speed of the I2C bus was configurable to be as much as 10 times higher than the default value. Over the week, I modified the Arduino code to simultaneously modify both zoom and focus so that the camera never loses focus while zooming in, and enabled the faster I2C clock option so that zooming takes much less time.

When that was done, I tried to improve the Yolo-v3 neural network by getting a larger data set with clearer images, and performing data augmentation on the data set to improve the network’s ability to handle bounding boxes that extend outside the image. However, when I trained the network with the new data set it looked like the new network performed more poorly. It turned out that the data set I used (Google’s Open Images data set) had many missing and inaccurate bounding boxes, making it much lower quality than COCO and the Caltech Pedestrian dataset even with a larger size and higher quality images. I think I will still try to re-train with just data augmentation.

I have also implemented a mechanism to switch between multiple targets in the Ultra96 code, locking onto one person for certain periods of time before switching to another. This should make up one of the last components left on the checklist of features to implement.

Karthik Natarajan:

This week I have been working on trying to save the video stream to the SD card on the board so we can look at the footage when necessary. To start this process, I initially tried to use gstreamer so we could take the compressed file directly from the camera. However, this approach resulted in a lot of compilation errors due to “omxh264dec”, “h264parse” or some other arbitrary package not being installed. And, to fix that I even tried to install all of the plugin packages available for gstreamer but to no avail :'(. So, after toiling with this for a fairly long time I moved over to trying to use openCV. Which, after messing with the Makefile, started to save uncompressed video on the board. And, if we try to compress the frames with openCV our code starts to run slowly and result in lost frames. Therefore, as of right now, I am concurrently looking into gstreamer and openCV to figure out which option would be better able to fix this problem. Also, earlier in the week I helped port the Circuitpython code to arduino C code as mentioned in the team report.

Nathan:

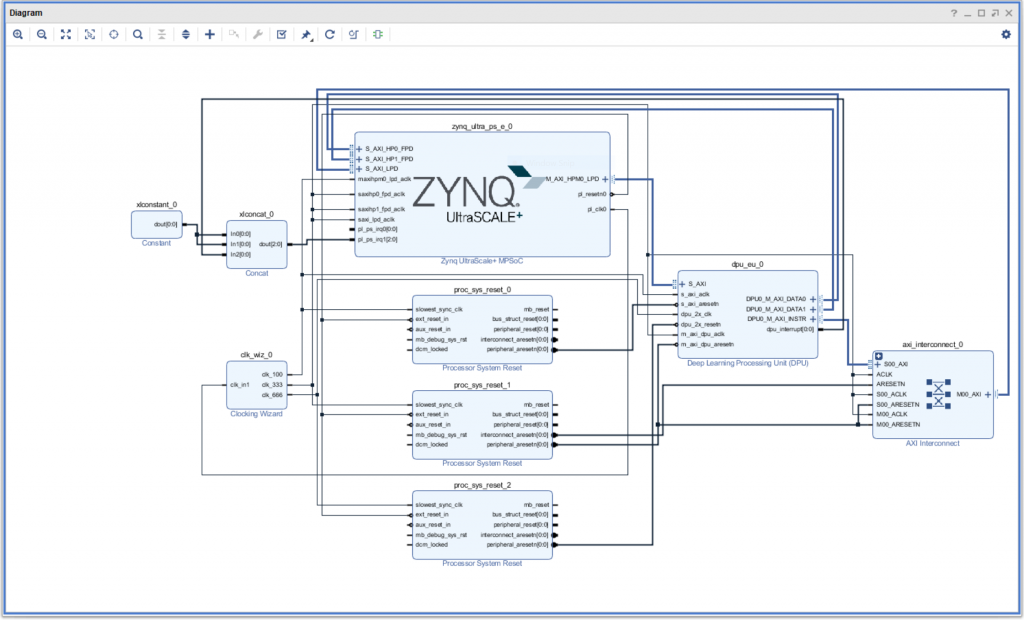

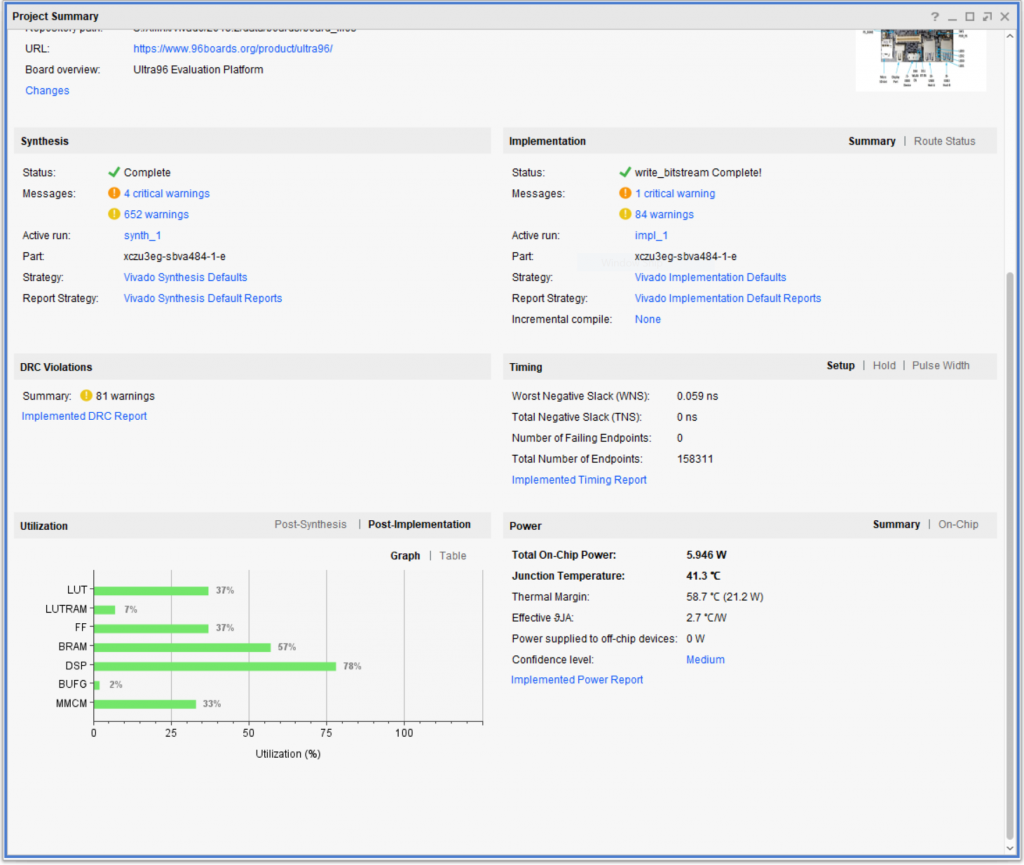

After the Monday demo (see Team section for details), I spent my time gathering measurement data and preparing the presentation for this upcoming Monday or Wednesday. The main topic of the presentation will be the evaluation of our system, including power and identification accuracy measurements, so I’ve been focusing on measuring the power draw of the system and making organized comparisons to our original requirements. To that end, I’ve also been testing different DPU configurations so that we can choose the optimal one for our final product, based primarily on framerate data from the execution of our neural net, though I’m also experimenting with some Deephi profiling tools. I’m also working on updating past diagrams and such for the presentation, including making note of all the additional customizations we’ve made since the design review. Once this presentation is done, I will move on to working on the poster and wrapping up the construction of the enclosure, for which I picked up the internal components earlier this week.

Team:

It’s crunch time for the project, but while there are still a number of tasks to accomplish before the end, we feel generally positive going into the final stretch. All of the major components are functional, and the remaining tasks (integration of the motion detection, tuning of power, tuning of tracking, and enclosure) are either simple or just polishing existing aspects of the system.

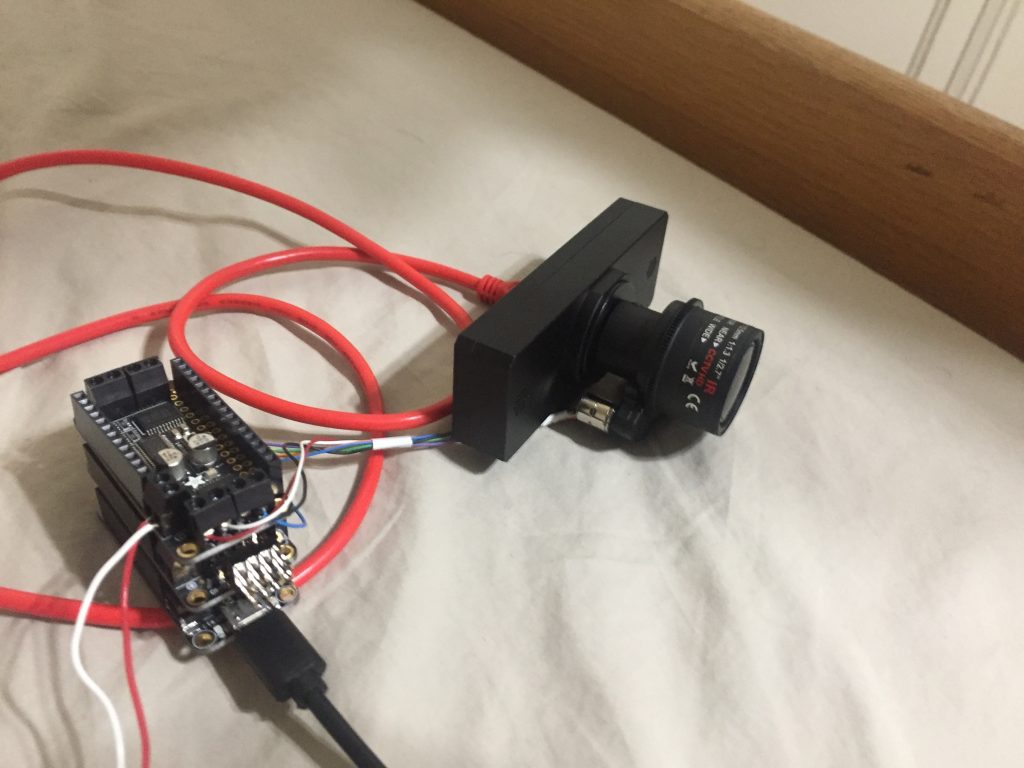

We had our last in-lab demo earlier in the week, and for that we finalized the integration of the pan, tilt, and zoom systems. We unfortunately had to convert our code from CircuitPython to Arduino C to fit within the Feather M0’s RAM limites (32KB), but that only temporarily slowed us down. We had a few bugs regarding the zoom behavior, but those have since been fixed, and we’ve sped up the motion system’s performance dramatically by tuning the I2C bus used for communication between the Featherboard and Featherwings.

The outstanding risks are the integration/completion of the remaining components (listed above), but there is no longer anything that fundamentally threatens the integrity of the project. By the end of this coming week, the remaining tasks should be complete, and we should be essentially ready for the final demo. Accordingly, the design of the system and Gantt chart remain unchanged from last week.