Karthik Natarajan:

This week I spent most of my time writing code to interact with the servos and the motion sensor. Specifically, throughout Monday and Wednesday, I worked on getting the servo libraries working so we could move our board. Initially, Ithought the problem was with my code, so I kept looking for the error there. However, after looking on the oscilloscope with both Jerry and Nathan, we realized that the pulse we were sending was not what we assumed. Instead of getting 1 ms pulse, we were getting a distorted spike. To fix this we equated the ground wires for the Arduino we were using and the power source. This code in turn swept both servos on the pan-tilt configuration. After that with a little bit of modification to the code, I was able to make the servos only move when they detected motion. Through this we learned that the motion sensor is asserted low. Overall we should be on schedule for both the demo and the final project deadline. Most of what is left is integration. Specifically, I would probably try to make code that works with the neural network.

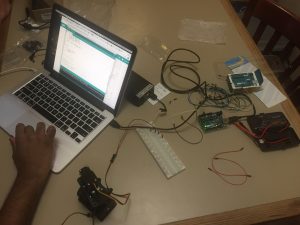

Nathan Levin:

This week I soldered the Adafruit boards together, and have started working with them. I have a basic proof of concept for UART communication working on the Feather’s side of things (see attached image), though it is currently buggy. I think a little more tweaking with the serial settings should be able to fix the bug of not displaying past the first character. I’m currently working on getting communication and control working between the Adafruit board and the Ultra96 for controlling the servos, which is the last major component needed before the demo on Monday.

Related, it looks like our Feather board has too little storage to contain all of the CiruitPython libraries we’d want, so we will use the Arduino ecosystem for now. This is a slight pain, but should not prove much of a long term setback caught now.

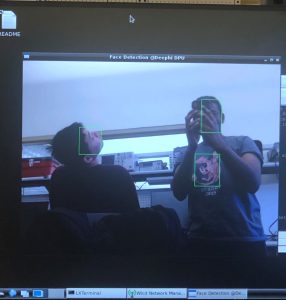

Jerry:

I’ve looked into turning off the fan for the board, which involves controlling one of the programmable logic I/O pins. There were some tutorials for how to route the pin to a custom datapath / IP block, but the main problem is that these steps typically occur before getting the boot image. Since we already have the final boot image, this may be more difficult. I have created a forum topic on Xilinx’s webpage, and am waiting for the responses; if controlling the fan isn’t possible on the boot image, we may consider a backup plan where we wire the fan differently so that it can be turned off with a hardware switch.

I have also have learned how to create a new UART device on the Ultra96, with which we can use to communicate with the FeatherBoard M0. Once the FeatherBoard side is set up, everything should be ready to make a demo of the camera tracking a person in view. The motion sensor also works now, so the next step will be to wake the board with the motion sensor.

Team:

As a team, we worked together on some of the UART debugging and communication. Our immediate goal for today and tomorrow (this weekend) is to get a functioning demo working for lab on Monday. We have individual subsystems working, but the key now is to integrate them and have the camera follow someone under the motion subsystem’s control and the software’s analysis/dictation.