For the past two weeks, my team and I worked on the design review of our project to finalize the application structure and implementation design. We worked on the design review document together and dicussed on how each subcomponent of our project should work. I worked specifically on finalizing the user interface section.

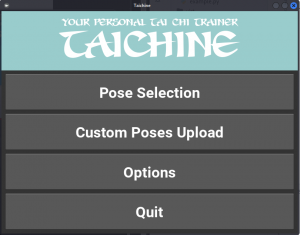

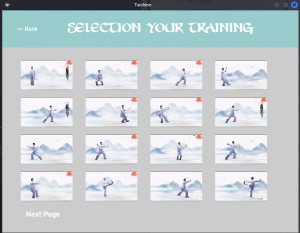

To accomplish the above task, I shifted my focus from tkinter to kivy, which is the new package we chose for implementing our application interface. I learned about the basics of initiating and configuring the widgets and wrote kv language files to simplify the design process. Using the skills I learned, I created the prototype pages for the main menu and the pose selection page. Below are the prototypes I created. (Consider this my answer to the ABET question.)

In order to let the application run as expected, I have to get used to kivy’s screen manager functionality to switch between different pages. I am currently working on it and I plan to work out a functional application frame next week. Also, the live camera embedded in the training page is also something I need to look into, and I will start working on it as soon as the previous task is mostly completed.

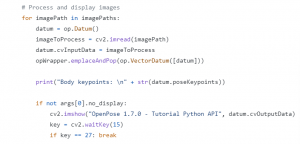

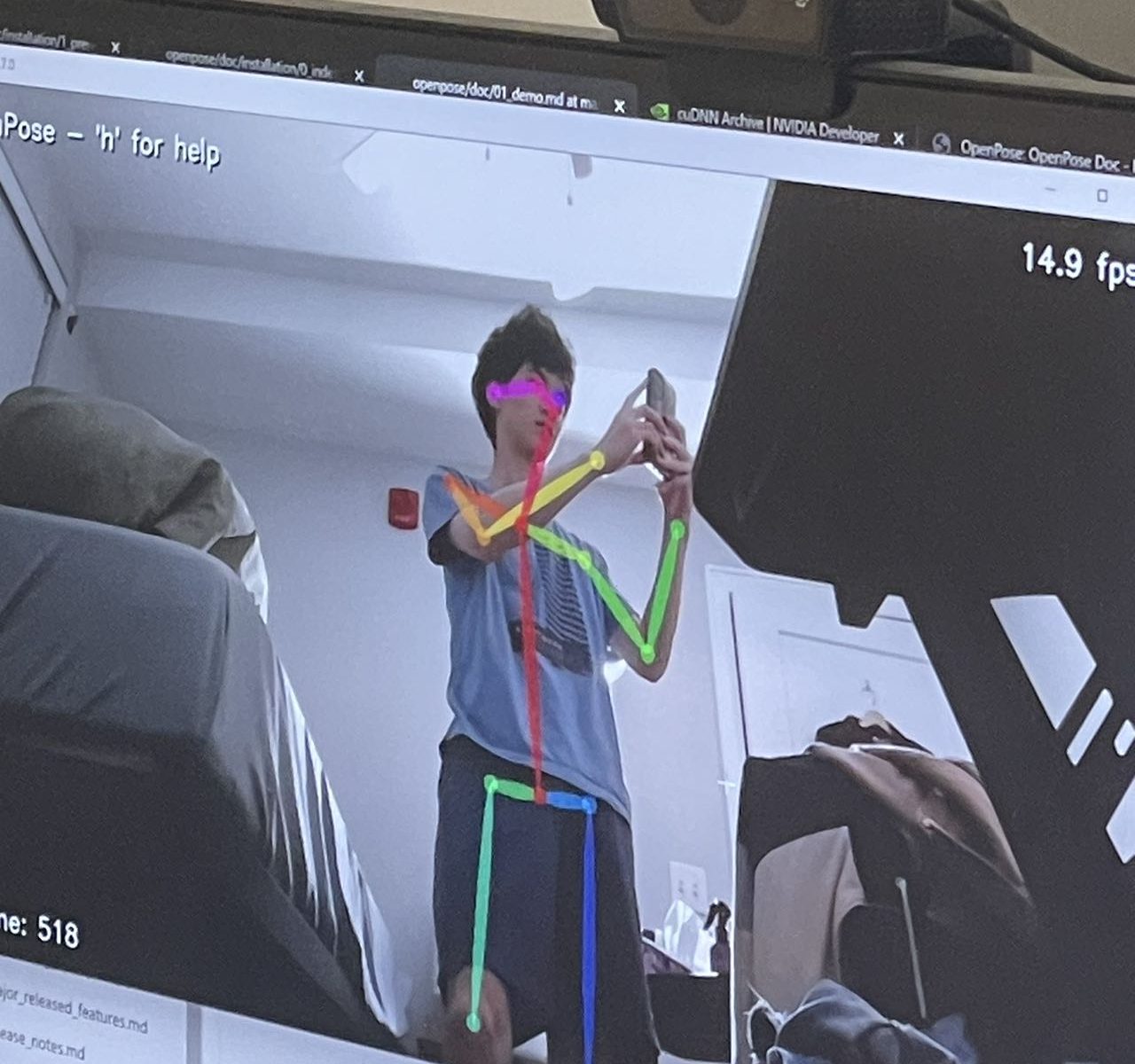

I am still trying to configure openpose on Windows; if necessary, I will ask Eric about the building process and make sure openpose run on my computer by next week.

I am overall on schedule for this week. Still, starting from next week, I have to put more attention on cooperating with my teammates since the integration process of our application should soon begin.