This week, we met with Professor Kim and Ryan during the regular lab meetings. We discussed our goals for the interim demo and our current progress on the project. We also briefly talked about the mid-semester grades and the feedback of the mid-semester grades.

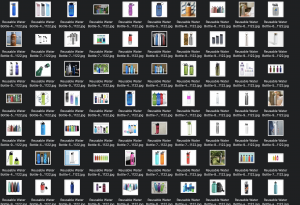

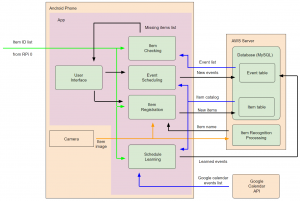

For the item recognition part, aside from the collected images (250 images per item) for the training dataset, I then collected 150 images per item, which are different from the collected images. The IDT library was useful in this purpose as well, because it supports separation of training data and test data, which prevents the model from training on test images and potentially skewing the test results. I also augmented the images by applying image transformation, reflection and distortions to generate many images from a single image that are real-world feasible and label preserving. I also wrote Python and PyTorch code to build the CNN model.

My progress is slightly behind because training the CNN model took longer than I expected, and this was due to the large number of images. However, I am confident that I can integrate this item recognition part onto the web application that Janet is working on so that our group can demonstrate the feature on the interim demo.

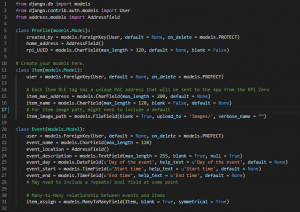

Next week, I plan on fully training the CNN model with the dataset and test on the test dataset I collected this week. I will also look into the integration of the CNN model to the web application built in Django framework and dealing with the machine learning models onto the AWS server.